Agentic AI promises systems that don’t just generate responses, but take real action for the business. The problem is that most autonomous agents are built on unstructured data, such as emails, PDFs, and scanned documents, that they can’t reliably act on. This article explores why structured data is the missing layer in agentic AI and how a dedicated parsing layer turns promising agents into dependable systems.

Key Takeaways:

- Agentic AI shifts AI from content generation to executing real business actions, dramatically increasing the cost of data errors.

- Autonomous agents require clean, structured, and validated inputs to operate safely at scale. LLMs alone aren’t enough.

- Parseur provides the critical parsing layer that converts real-world documents into reliable, structured data, enabling agents to act with confidence rather than relying on guesswork.

The Shift From “Chatting” To “Doing”

Over the past few years, AI has moved fast. In 2023 and 2024, the focus was largely on generative AI: systems that could write emails, summarize documents, and answer questions with impressive fluency. These tools changed how people interact with software, but for the most part, they stopped at conversation.

By 2026, the conversation has shifted, with Gartner forecasting that 40% of enterprise applications will integrate task-specific AI agents. The next wave of innovation is agentic AI: systems designed not just to respond, but to act. Instead of drafting an email, an AI agent can send it on your behalf. Instead of suggesting next steps, it can execute an end-to-end workflow.

The promise is compelling. According to Kong INC, 90% of enterprises actively adopt AI agents, with 79% expecting full-scale deployment within three years. Imagine an AI that manages parts of your supply chain, processes and pays invoices, or updates your CRM automatically as new information arrives. No dashboards. No manual handoffs. Just outcomes.

However, there’s a reality check beneath the hype. While the “brains” of these systems, large language models like GPT-5 or Claude, are increasingly capable, the “fuel” they run on is often unreliable. According to Rubrik, 80% of enterprise data remains unstructured, including emails, PDFs, scanned documents, and loosely formatted attachments. When agents are forced to act on this kind of messy data, errors scale quickly.

This is why many agentic AI initiatives stall or remain experimental. The issue isn’t reasoning or planning. It’s a trust in the inputs.

For agentic AI to evolve from an impressive demo into a dependable enterprise infrastructure, it needs a dedicated layer focused on data reliability, one that transforms human-readable information into clean, structured facts before any autonomous action takes place.

What Is Agentic AI?

Agentic AI refers to systems that operate autonomously to achieve goals. Unlike traditional chatbots that wait for a prompt and respond with text, agentic AI systems are designed to perceive information, reason about it, and take action without continuous human input.

In practical terms, an agent doesn’t just answer a question like “What should I do next?” It decides what to do and executes the task itself.

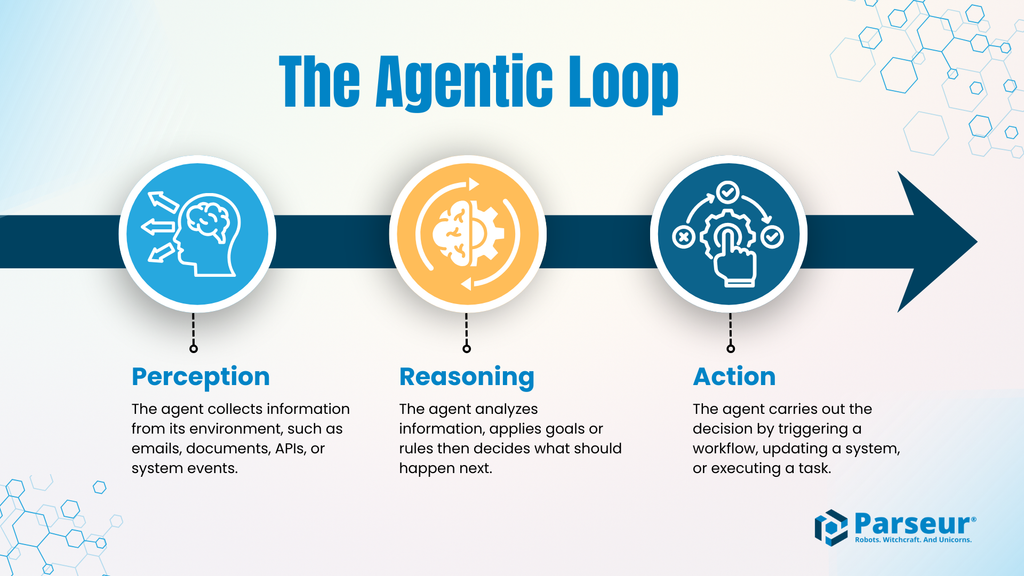

The Agentic Loop: Perception → Reasoning → Action

Most agentic AI systems follow a simple but powerful loop:

- Perception: The agent receives information from its environment. This might include emails, documents, API responses, or system events.

- Reasoning: The agent interprets that information, applies rules or goals, and decides what to do next.

- Action: The agent executes a task, such as updating a record, triggering a workflow, sending a payment, or notifying another system.

This loop repeats, enabling the agent to operate with minimal supervision. As long as it can perceive new information, it can keep acting.

Why are the stakes higher?

When AI systems move from generating content to executing actions, the cost of error changes dramatically. A chatbot making a typo is harmless. An autonomous agent misreading a number, misidentifying a customer, or acting on incomplete data can cause real operational issues.

Examples include:

- Paying the wrong invoice amount

- Ordering incorrect inventory quantities

- Updating the wrong customer record

- Triggering workflows based on faulty assumptions

In agentic systems, mistakes don’t just look bad. They break processes.

Generative AI vs. Agentic AI

| Aspect | Generative AI | Agentic AI |

|---|---|---|

| Primary purpose | Generates content such as text, images, or summaries | Executes tasks and workflows to achieve a goal |

| Interaction model | Responds to user prompts on demand | Operates autonomously with minimal human input |

| Typical outputs | Drafts, explanations, suggestions | System updates, transactions, triggered actions |

| Decision-making | Focused on producing coherent responses | Focused on choosing and executing next actions |

| Tolerance for error | Relatively high; mistakes are visible and easy to correct | Very low; errors can impact operations or finances |

| Data requirements | Can work with ambiguous or incomplete information | Requires precise, structured, and validated data |

| Risk profile | Errors are usually cosmetic or informational | Errors can cause operational or financial failures |

The “Ground Truth” Problem

Agentic AI systems operate in a world of logic, APIs, and schemas. They expect inputs structured as JSON or XML, with clearly defined fields, consistent formats, and predictable structures. The business world, however, runs on something very different. Critical information arrives via email, PDFs, spreadsheets, scanned documents, and human-created attachments.

This mismatch is the core bottleneck for autonomous agents.

Before an agent can reason or act, it needs “ground truth”: reliable, machine-readable facts it can trust. Without that foundation, even well-designed agents are forced to improvise.

Why LLMs Alone Aren’t Enough?

It’s tempting to assume large language models (LLMs) can simply read raw documents and figure things out. In practice, this approach introduces several risks that compound as automation scales.

Hallucinations

LLMs are probabilistic by design. When information is missing, unclear, or poorly formatted, they don’t stop; they guess. In a conversational setting, that guess may be harmless. In an agentic workflow, a guess becomes an action. Research on autonomous agent behavior consistently shows that hallucination rates increase when models are asked to extract structured data from noisy documents, especially across long or complex inputs.

Cost and latency

Processing full PDFs, long email threads, or scanned documents through LLMs is computationally expensive. Each document consumes tokens, increases latency, and introduces variability in response time. For agents operating in real-time or high-volume environments, this becomes a practical barrier, not just a technical one.

Inconsistency

Autonomous workflows depend on consistency. An agent requires the same field names, data types, and structure each time to reliably trigger downstream actions. Raw LLM outputs can vary subtly from one run to the next, even when the source document looks similar. That variability is enough to break automated workflows.

What failure looks like in practice

When agents act on unreliable document data, failures show up quickly and visibly.

An invoice-processing agent misreads a vendor name from a PDF and routes payment to the wrong account. A purchasing agent enters an incorrect quantity on a purchase order and triggers a reorder too late, resulting in stock shortages. A contract-management agent misinterprets a renewal clause buried in a document and applies the wrong pricing terms across multiple accounts.

These are not edge cases. In agentic systems, errors don’t stay isolated. One incorrect extraction can cascade through connected systems, triggering additional actions based on false assumptions. The result is a chain reaction of operational errors that requires manual cleanup, undermining trust in the entire automation effort.

This is the ground-truth problem: agents are ready to act, but without reliable, structured data, they’re operating with blurred vision.

The Missing Layer: Intelligent Document Processing (IDP)

If agentic AI struggles in the real world, it’s not because agents can’t reason or act. It’s because they lack a reliable way to interpret the inputs businesses actually use. This is where Intelligent Document Processing (IDP) becomes essential.

IDP introduces a dedicated parsing layer that sits between messy, human-generated documents and autonomous AI agents. Instead of forcing agents or large language models to interpret raw inputs directly, this layer converts the documents into structured, trustworthy data before any action is taken.

In an agentic architecture, this layer acts as a stabilizer. It ensures that agents operate on facts, not guesses.

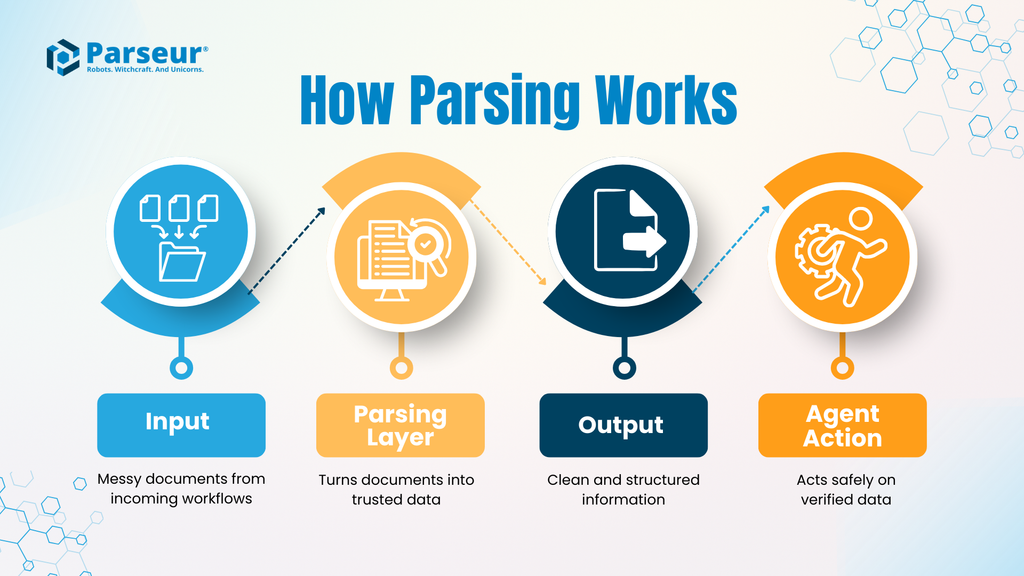

How the document parsing works?

A typical IDP workflow looks like this:

- Input: A real-world document such as an invoice, bill of lading, contract, or email. These inputs vary widely in format, layout, and structure.

- The Parsing Layer: An IDP tool extracts predefined fields, standardizes formats (e.g., dates, currencies, and identifiers), and validates data against known rules. The goal is not interpretation, but accuracy and consistency.

- Output: Clean, structured data often in JSON that conforms to a predictable schema.

- Agent Action: The autonomous agent consumes this structured output and executes its task, confident that the inputs are complete and reliable.

By separating document understanding from decision-making, organizations reduce risk and increase transparency. The agent doesn’t need to “read” documents. It simply acts on verified inputs.

Why does this layer change the risk profile?

Without a parsing layer, agents are exposed directly to the ambiguity of real-world documents. Every inconsistency becomes a potential failure point. With IDP in place, that uncertainty is absorbed upstream, before automation kicks in.

This distinction matters at scale. As one way to frame it:

According to Parseur’s 2026 survey, 88% of businesses report errors in document data. An autonomous agent with an 88% error rate isn’t an asset; it’s a liability.

IDP doesn’t make agents smarter. It makes them safer. It reduces the risk of hallucinations, improves workflow reliability, and enables organizations to automate with confidence without constant human oversight.

In agentic systems, intelligence gets the attention. But reliability is what makes autonomy viable. The parsing layer is the missing link that turns experimental agents into dependable operational tools.

Real World Use Cases: Where Agents Need Parseur

Agentic AI becomes valuable when it operates inside real business workflows. In these environments, success depends less on abstract intelligence and more on whether the agent receives accurate, structured data when it needs to act. Two common scenarios illustrate where a parsing layer makes the difference between automation that works and automation that fails.

Use Case A: The autonomous supply chain

Scenario:

A shipping delay notification arrives via email from a logistics provider. The message includes a revised arrival date, a container ID, and an updated port schedule, all embedded in free-form text or a PDF attachment.

Without a parsing layer:

The agent scans the email directly and attempts to infer the new arrival date. The formatting varies from previous messages, and the container ID appears in an unexpected location. The agent either misses the update or misreads a critical field. As a result, the ERP system is not updated correctly, downstream planning remains unchanged, and the factory prepares for inventory that won’t arrive on time. Production slows or stops, and the issue is only found after the damage is done.

With Parseur:

The email and attachment are processed by the parsing layer first. Parseur extracts the container ID, revised arrival date, and location using its AI engine and outputs a clean, structured update. The agent consumes this data in real time, reroutes logistics, updates the ERP, and automatically adjusts production schedules. What could have caused a shutdown becomes a controlled, automated response.

Use Case B: The “self-driving” accounts payable function

Scenario:

An organization aims to automate invoice reconciliation. Incoming invoices must be matched against purchase orders, validated, and approved without manual review.

The challenge:

Autonomous AP agents can handle this workflow, but only if every line item, quantity, unit price, tax, and total amount is extracted correctly. Even small inaccuracies can break matching logic or trigger incorrect payments.

Without reliable extraction:

The agent misreads a line-item total or confuses similar product descriptions. The PO match fails, invoices are flagged incorrectly, or worse, approved with incorrect amounts. Human intervention increases, eroding trust in automation.

With Parseur:

Invoices are parsed with pixel-level accuracy. Line items are captured consistently, formats are standardized, and totals are validated before the agent ever sees the data. The agent can confidently match invoices to POs, approve payments, and handle exceptions only when real discrepancies occur.

Why Human-in-the-Loop (HITL) Is the Safety Net

One of the biggest barriers to agentic AI adoption isn’t technical capability; it’s trust. Businesses are understandably cautious about autonomous systems making decisions that affect money, operations, or customers. The fear of a “rogue agent” acting on flawed data is real, especially when errors can propagate quickly across connected systems.

This is where human-in-the-loop (HITL) design becomes essential.

HITL doesn’t slow automation down. It makes automation usable in the real world.

How HITL fits into an agentic architecture

In a well-designed system, humans aren’t reviewing every action. They are involved only when uncertainty appears. Parseur plays a key role by acting as the checkpoint before data reaches the agent.

When a document is clear and matches predefined extraction rules, structured data flows directly into the agent’s workflow. When a document is ambiguous, missing fields, inconsistent layouts, or has unexpected values, Parseur can flag it for human review. A person validates or corrects the extracted data, and only then does it move downstream.

This approach creates a controlled decision boundary:

- Agents handle high-confidence, repeatable work

- Humans intervene only when context or judgment is required

The result is a system that scales without sacrificing accuracy or accountability.

Why HITL builds trust instead of undermining it

There’s a common misconception that needing human review means automation has failed. In reality, HITL enables agentic AI to operate safely in complex environments.

Without HITL, organizations are forced to choose between full automation with unacceptable risk or heavy manual oversight that negates efficiency gains. HITL offers a third path: automation with guardrails.

For enterprise adoption, this matters. Auditors, compliance teams, and business leaders need clear answers to questions like:

- What happens when data is unclear?

- Who approves exceptions?

- How are errors prevented from cascading?

A HITL-enabled parsing layer provides those answers.

The takeaway

Human-in-the-loop isn’t a fallback. It’s the safety net that makes agentic AI viable at scale. By catching ambiguity before autonomous actions are triggered, HITL transforms agentic systems from experimental tools into dependable infrastructure that businesses can trust.

Building the Infrastructure for 2026

We are entering the era of Digital Coworkers, autonomous agents that don’t just suggest or respond, but execute critical business workflows at scale. The promise is immense: faster operations, fewer errors, and the ability to focus human attention on high-value decisions rather than repetitive tasks.

That promise comes with a condition: agents are only as reliable as the data they act upon. Launching AI that executes actions on unstructured or messy inputs is a recipe for operational risk, cascading errors, and lost trust. Before automating action, organizations must ensure their data pipeline is accurate, structured, and auditable.

This is where Parseur comes in. Acting as the “engine room” of agentic AI, Parseur transforms emails, PDFs, and documents into precise, machine-readable data. With clean inputs, human-in-the-loop safeguards, and structured outputs, agents can act with confidence, turning ambitious AI workflows into dependable, can grow with demand operations.

Frequently Asked Questions

As agentic AI moves from experimentation to real-world deployment, questions around reliability, data quality, and system design become unavoidable. The following FAQs address some of the most common points of confusion.

-

What is agentic AI, in simple terms?

-

Agentic AI refers to systems that perceive information, reason about it, and take autonomous action to achieve a goal. Unlike chatbots, agentic systems don’t just respond; they execute tasks across workflows with minimal human intervention.

-

Why is unstructured data a problem for autonomous agents?

-

Most business data arrives in formats designed for humans, not machines. Emails, PDFs, and scanned documents lack a consistent structure, making them difficult for agents to interpret reliably. Acting on this data without validation increases the risk of errors that can cascade across systems.

-

Can large language models handle document understanding on their own?

-

LLMs can read documents, but they are probabilistic and inconsistent when extracting precise, structured data at scale. In agentic workflows, this variability can lead to incorrect actions, higher costs, and operational failures.

-

What role does Intelligent Document Processing (IDP) play?

-

IDP serves as a dedicated layer that transforms unstructured documents into clean, structured, machine-readable data before they reach an autonomous agent. This separation improves reliability, reduces risk, and makes agentic systems viable in real business environments.

Last updated on