A Parseur-commissioned survey of 500 U.S. professionals working in document-heavy workflows reveals a striking contradiction: 88% of business leaders say they are very or somewhat confident in the accuracy of the data feeding their analytics and AI systems, and the same 88% report finding errors in document-derived data at least sometimes. This “confidence illusion” suggests data quality risks are widespread and routinely undermine analytics, forecasting, and AI outputs.

Key Takeaways:

- Confidence vs reality: 88% report confidence in their data, while 88% also report finding errors in document-derived data at least sometimes.

- Frequency: Nearly 69% of respondents said they find errors sometimes, often, or very often.

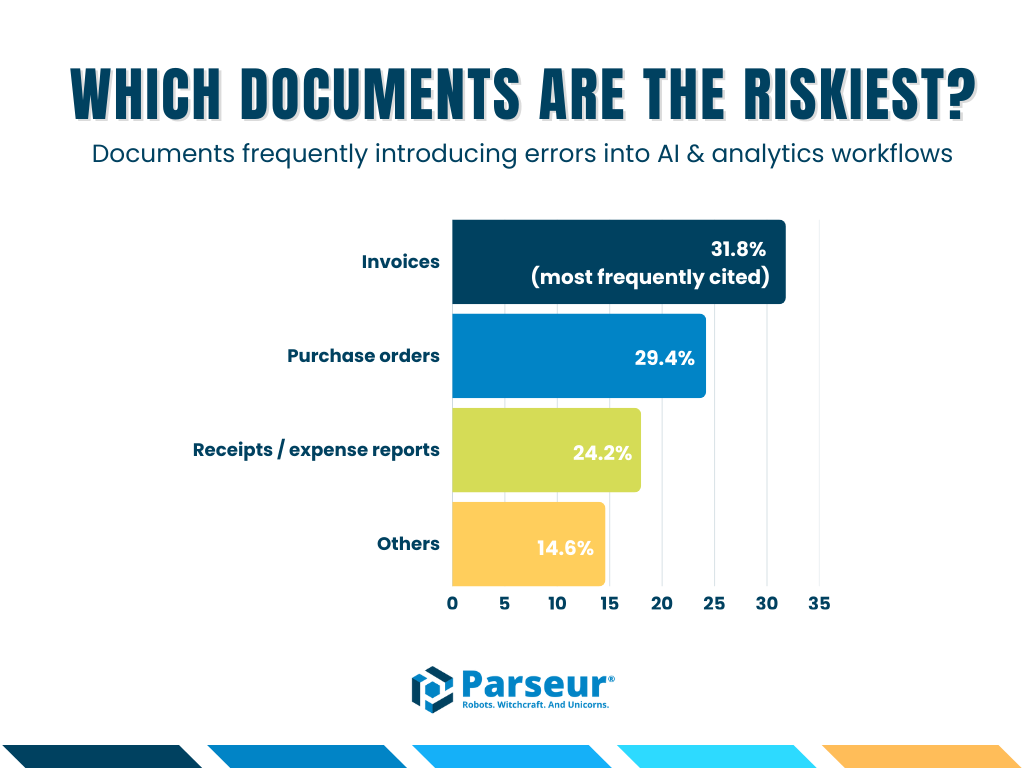

- Top error sources (most-cited): Invoices (31.8%), Purchase Orders (29.4%), Receipts/Expense Reports (24.2%).

- Scope: 500 U.S. respondents across operations, finance, IT, customer support, and related functions (primarily manager, director, and C-suite roles).

Beyond the Numbers: The AI Data Paradox

As organizations accelerate AI adoption in 2026, the conversation has evolved from whether to use AI to how well it can be trusted. Businesses are training Large Language Models (LLMs), launching Retrieval-Augmented Generation (RAG), and automating analytics pipelines using internal documents as their primary data source.

Yet beneath this rapid adoption lies a critical contradiction.

According to a new Parseur-commissioned survey of 500 U.S. professionals working in document-heavy workflows, organizations are simultaneously confident in their data and regularly finding errors in it.

This disconnect is what we call the Document Data Confidence Gap: the growing gap between perceived data reliability and real-world data quality.

The headline finding? A staggering 88% of professionals report encountering errors in the document data used to feed their AI and automation workflows.

This article examines the findings from our 2026 survey, uncovering the root causes of the document data confidence gap, the real-world business costs of unreliable data, and how leading organizations are closing the loop to ensure AI systems are built on accurate, trustworthy information.

Why This Matters: Business Impact and Scale

Bad data is far more than an operational annoyance; it carries tangible financial, operational, and strategic consequences. Even a small error in a high-volume document can ripple through systems, leading to inaccurate reporting, flawed forecasts, and misinformed decision-making.

Beyond dollars, data errors also create operational friction: supply chain delays, compliance risks, customer dissatisfaction, and unnecessary rework all stem from flawed document inputs.

The 2026 Parseur survey highlights the real-world scale of these challenges. Across industries, professionals identified invoices (31.8%), purchase orders (29.4%), and receipts/expense reports (24.2%) as the primary “danger zones” where errors are introduced during capture. These errors are rarely isolated; they propagate downstream into analytics dashboards, AI models, and business workflows, increasing both financial and operational costs.

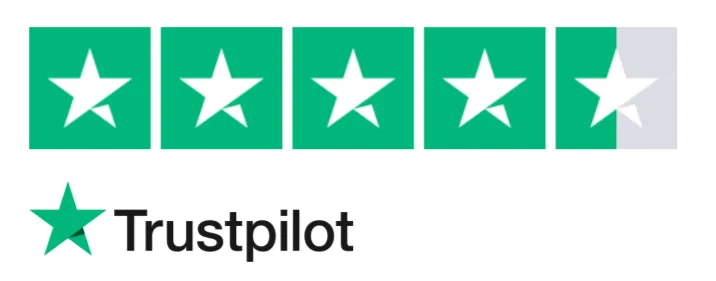

Key quantitative insights from the survey:

- 6+ hours per week: A significant portion of teams spend more than six hours weekly correcting or validating document-derived data, reducing the ROI of automation.

- 28.6% of respondents experience data errors often or very often, showing that errors are a persistent, recurring problem rather than rare exceptions.

- 48.7% of professionals remain “very confident” in the accuracy of data feeding AI, despite frequent errors, illustrating the dangerous confidence gap.

- Operational impacts reported include incorrect forecasts, financial reporting errors, disputes with customers or suppliers, compliance findings, revenue loss, and increased exposure to fraud.

Every unchecked document becomes a potential source of systemic inefficiency and risk. By understanding the scale and consequences of bad data in documents, organizations can prioritize accuracy at the source, establish validation workflows, and adopt human-in-the-loop processes that protect both AI outputs and critical business outcomes.

Why Parseur Conducted This Survey?

At Parseur, we work daily with teams automating document workflows across finance, operations, IT, and customer support. While AI-powered extraction has advanced dramatically, we consistently observe a familiar challenge: confidence drops when errors surface downstream in analytics dashboards, AI outputs, forecasts, or compliance reports.

This survey was designed to quantify that challenge, uncover where errors originate, and highlight how organizations are responding. Our goal is to provide business leaders with actionable insight into one of the most underappreciated risks in AI-driven operations: bad document data.

The 88% Reality Check: Errors Are the Norm, Not the Exception

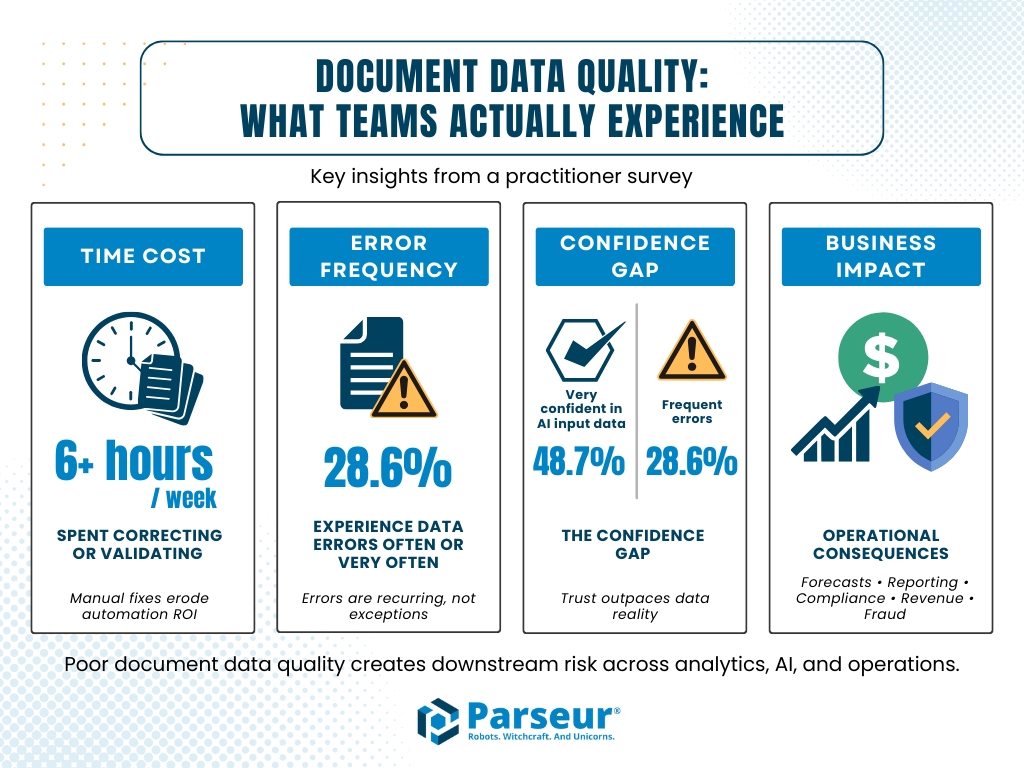

To understand the scope of the document data confidence gap, we asked respondents a straightforward question:

“In processes that require extracting, validating, or reviewing data from documents, do you experience data errors?”

The results were striking.

- 88% of respondents reported encountering errors at least sometimes

- Only 12% reported completely error-free document data pipelines

These findings reveal a critical insight: despite the widespread adoption of OCR, AI parsers, and other automation technologies, document variability continues to undermine accuracy. Differences in layouts, formats, handwriting, inconsistent terminology, and embedded free-text fields create frequent points of failure. Even small errors, such as a misread invoice amount, an incorrect purchase order number, or a mislabeled customer record, can propagate downstream, impacting AI models, analytics dashboards, and decision-making workflows.

This prevalence underscores that automation alone is not a silver bullet. Without careful monitoring, validation, and human oversight, AI pipelines risk increasing errors rather than eliminating them. The survey makes it clear that businesses must proactively anticipate and address these errors to protect both operational efficiency and strategic decision-making.

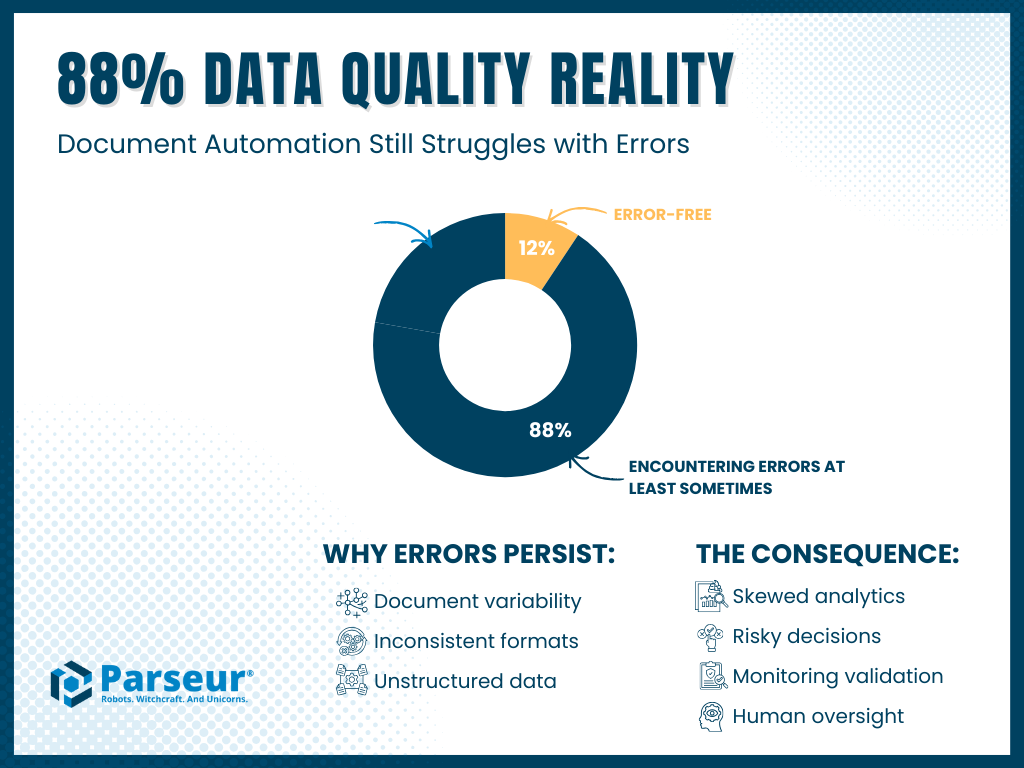

Which Documents Are the Riskiest?

Not all documents are created equal when it comes to errors. Our 2026 survey asked professionals to identify the types of documents that most frequently introduce mistakes into AI and analytics workflows.

The results reveal a clear pattern:

- Invoices: 31.8% (most frequently cited)

- Purchase orders: 29.4%

- Receipts/expense reports: 24.2%

These documents share several key characteristics that make them particularly vulnerable to errors:

- High Volume: Organizations process thousands, sometimes millions of invoices, purchase orders, and customer communications each year. Even a small error rate can translate into significant operational and financial exposure.

- Complex Structure: Many of these documents combine structured data (e.g., dates, line items, totals) with free-text fields, notes, or attachments. This variability makes automated extraction prone to misreads, misalignments, or missing information.

- Critical Decision Impact: These documents directly influence financial reporting, revenue recognition, supply chain operations, and customer interactions. Errors are not just minor inconveniences; they can result in overpayments, delayed shipments, compliance issues, or reputational damage.

In other words, these error-prone documents represent the “danger zones” of AI-fed workflows. Failing to address inaccuracies at this stage can magnify mistakes across downstream systems from analytics dashboards to AI-driven decision-making, creating both operational friction and financial risk.

Operational impacts reported (qualitative)

Respondents linked document data errors to: incorrect forecasts, financial reporting issues, disputes with customers/suppliers, compliance/audit findings, operational delays, revenue loss, and increased fraud exposure. Many rated those impacts as moderate or severe.

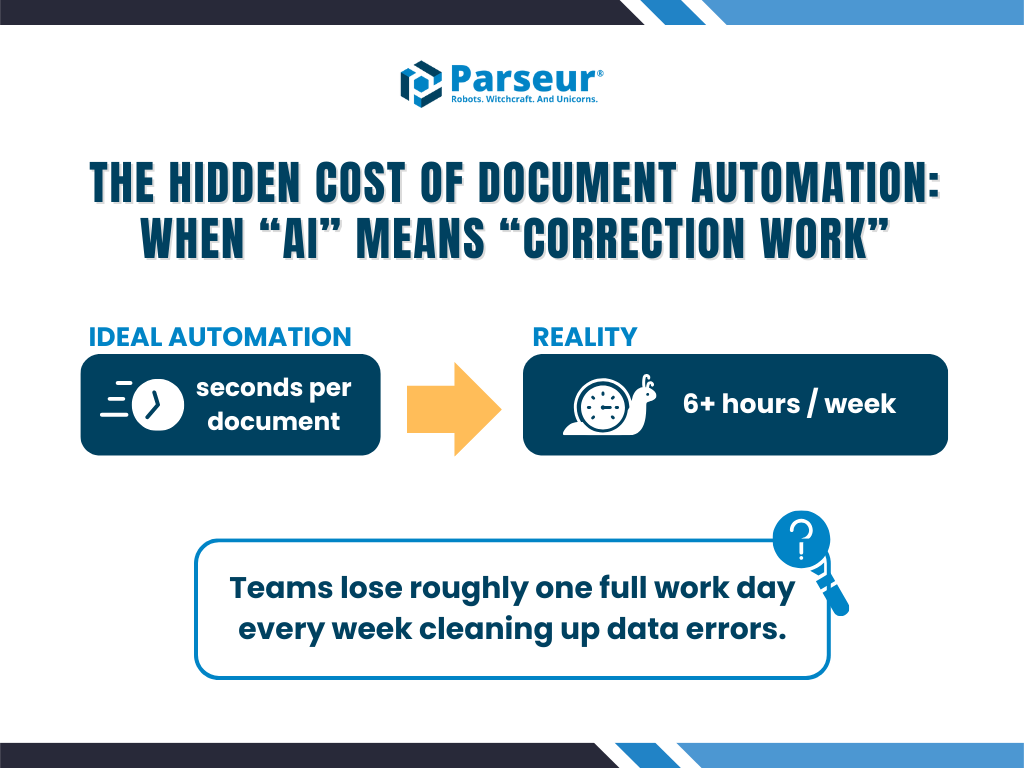

The Hidden Cost: Hours Lost to "The Fix"

One of the most revealing insights from the raw data lies in the human cost of these errors. We asked teams: "Approximately how many hours does your team spend each week correcting or reviewing data?"

The data indicates that automation hasn't removed the human from the loop; it has merely changed their role from "entry" to "correction," often a more tedious and frustrating task.

- The Findings: A significant portion of teams are spending 6+ hours per week just fixing bad data.

- The Impact: This "correction tax" kills the ROI of automation. Instead of focusing on strategic analysis, skilled professionals (C-Suite, IT, and Data Managers) are acting as high-paid spellcheckers.

The Hidden Cost: When Automation Becomes “Correction Work”

Automation is supposed to eliminate manual effort, but the survey reveals a different reality.

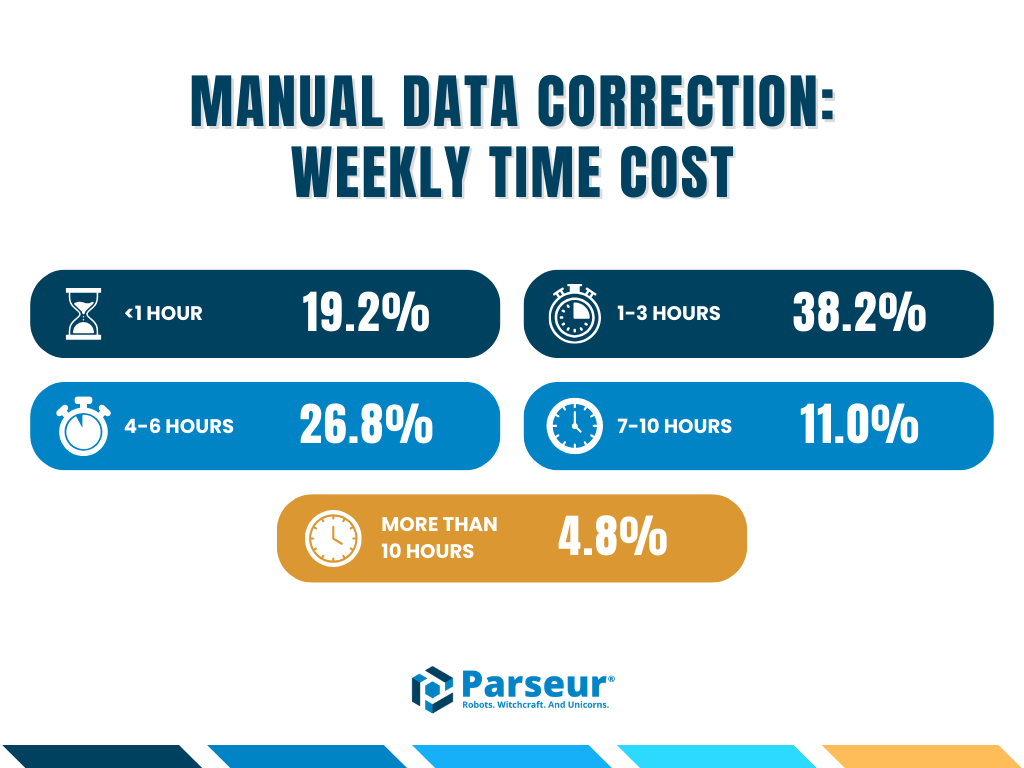

When asked how much time teams spend correcting or reviewing extracted data each week:

- 38.1% reported spending 1–3 hours

- 26.8% reported spending 4–6 hours

- A significant portion reported 6+ hours per week

This creates what many respondents described as a “correction tax”: skilled professionals moving from strategic work to reviewing and fixing AI output.

Instead of accelerating decision-making, flawed data pipelines turn executives, IT leaders, and finance teams into high-cost quality controllers, dramatically reducing automation ROI.

Where Errors Start: The Documents Driving the Problem

Not all documents pose equal risk. These documents share three traits:

- High volume

- Mixed structured and free-text fields

- Direct financial or operational impact

Errors in these documents directly lead to over payments, disputes, revenue leakage, compliance exposure, and operational delays.

Why the Confidence Gap Exists

We asked participants: "How confident are you in the accuracy of data derived from documents?" Despite frequent errors, nearly half of respondents (48.7%) report being very confident in the accuracy of the data feeding their AI and analytics systems. This disconnect highlights the Confidence Gap: the space between the expectation of AI-driven precision and the reality of stochastic outputs.

Several factors contribute to this gap:

1. Statistical vs. Business Accuracy

For mission-critical workflows, even 99% accuracy is insufficient. One error in every 100 fields can have major consequences in finance, compliance, or regulatory reporting. This nuance is often overlooked when evaluating AI performance based purely on accuracy metrics rather than business impact.

2. Overconfidence in Generic AI

Generic LLM-based extraction tools may hallucinate or misinterpret fields, particularly in complex or unstructured documents, and often operate without reference data or validation rules. These misinterpretations can propagate downstream, affecting analytics, AI outputs, and operational decisions.

3. The “Black Box” Problem

Users frequently don’t know why or where an error occurred, creating uncertainty and eroding trust in the entire data pipeline.

4. Risk Awareness Among Key Roles

Respondents most skeptical of data accuracy were those closest to risk: IT teams, data professionals, and C-suite executives. Their heightened awareness reflects the serious potential impacts of errors in mission-critical processes.

Strategic Impacts Identified

The consequences of these errors go far beyond minor inconveniences. Survey respondents reported significant business risks, including:

- Financial losses: Overpayment of invoices or missed revenue opportunities

- Compliance risks: Incorrect reporting in regulated sectors such as finance and healthcare

- Operational delays: Supply chains halted due to mismatched purchase order numbers

By understanding these underlying factors, organizations can better design workflows, validation rules, and human-in-the-loop processes to close the confidence gap, ensuring AI outputs are both accurate and actionable.

The Business Impact: More Than Inconvenience

Respondents linked document data errors to tangible business consequences, including:

- Financial losses and overpayments

- Incorrect forecasts and reporting

- Compliance and audit findings

- Customer and supplier disputes

- Operational delays and revenue loss

- Increased fraud exposure

These are not theoretical risks; they are daily operational realities amplified by AI at scale.

Closing the Gap: The Human-in-the-Loop Advantage

The survey data clearly indicate that AI alone is not enough.

Organizations successfully closing the confidence gap are adopting Human-in-the-Loop (HITL) workflows that combine AI speed with verification and control:

- Reliable Extraction: Using specialized AI (like Parseur) that is grounded in reference data, rather than generic LLMs that hallucinate.

- Validation Rules: Enforcing strict constraints (e.g., "Total Amount must equal sum of line items").

- Seamless Review: When confidence is low, the system should flag the document for a quick human check, turning a potential error into a verified data point.

This approach transforms human review from a bottleneck into a precision safeguard, ensuring AI systems are fed trustworthy data.

Methodology

The Parseur 2026 Document Data Survey was conducted in late 2025 using QuestionPro. It includes responses from 500 professionals working in document-intensive roles across the United States, ranging from managers to C-suite executives.

Industries represented include Technology, Finance, Retail, Healthcare, and Logistics. The survey examined document data accuracy, confidence levels, AI adoption, and the operational impact of data errors.

Looking Ahead: Stop Feeding AI Bad Data

AI systems are only as reliable as the data they consume. As adoption accelerates, organizations that fail to address document data quality risk increase errors at scale.

The strategy isn’t to abandon AI; it’s to build trust into the pipeline.

Parseur helps businesses close the Document Data Confidence Gap by combining intelligent document processing with validation, transparency, and human precision so your AI works with certainty, not assumptions.

Ready to reclaim confidence in your data?

Stop feeding your AI bad data and experience document automation you can trust.

Additional Insights from the 2026 Survey

- Error visibility remains common: 39.4% of respondents said they sometimes identify errors in document-derived data (197 out of 500). An additional 28.6% encounter errors often or very often, reinforcing that data inaccuracies are a recurring operational issue rather than isolated incidents.

- Technology and finance lead representation: 43.4% of respondents work in Technology, followed by 23.2% in Finance, two sectors where data accuracy is especially critical for decision-making, compliance, and AI-driven workflows.

- AI adoption is already widespread: 37.9% of respondents report using AI extensively across many workflows, while another 31.4% use AI in a few targeted workflows. This high level of adoption magnifies the downstream impact of document data errors when accuracy is not tightly controlled.

Last updated on