Key Takeaways:

- Document parsing APIs extract structured data from files you own, such as PDFs, images, and emails.

- Web scraping APIs collect information from public web pages by parsing HTML or rendered content.

- The right choice depends on your data source: files you receive vs websites you want to monitor.

- Many teams use hybrid workflows, scraping to fetch documents and parsing to extract reliable JSON.

Document Parsing API vs Web Scraping API

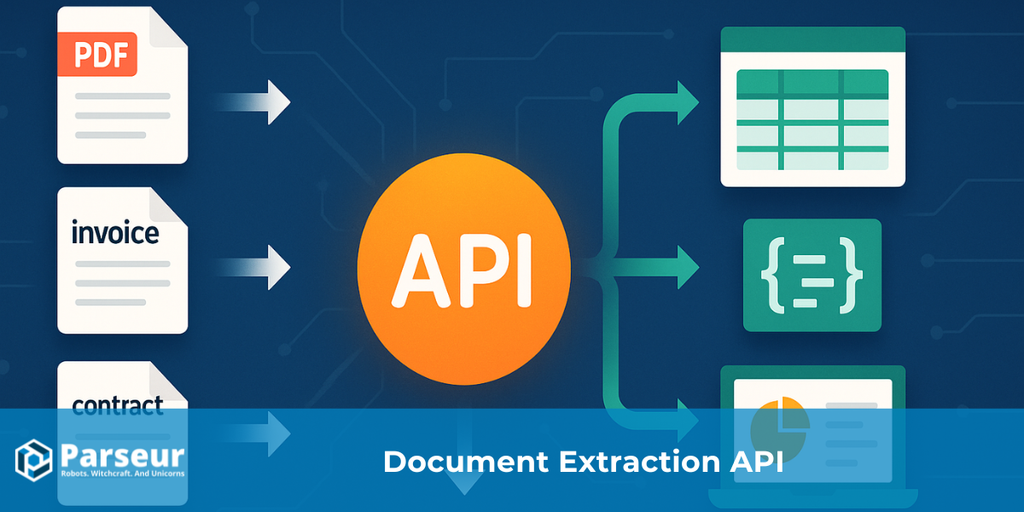

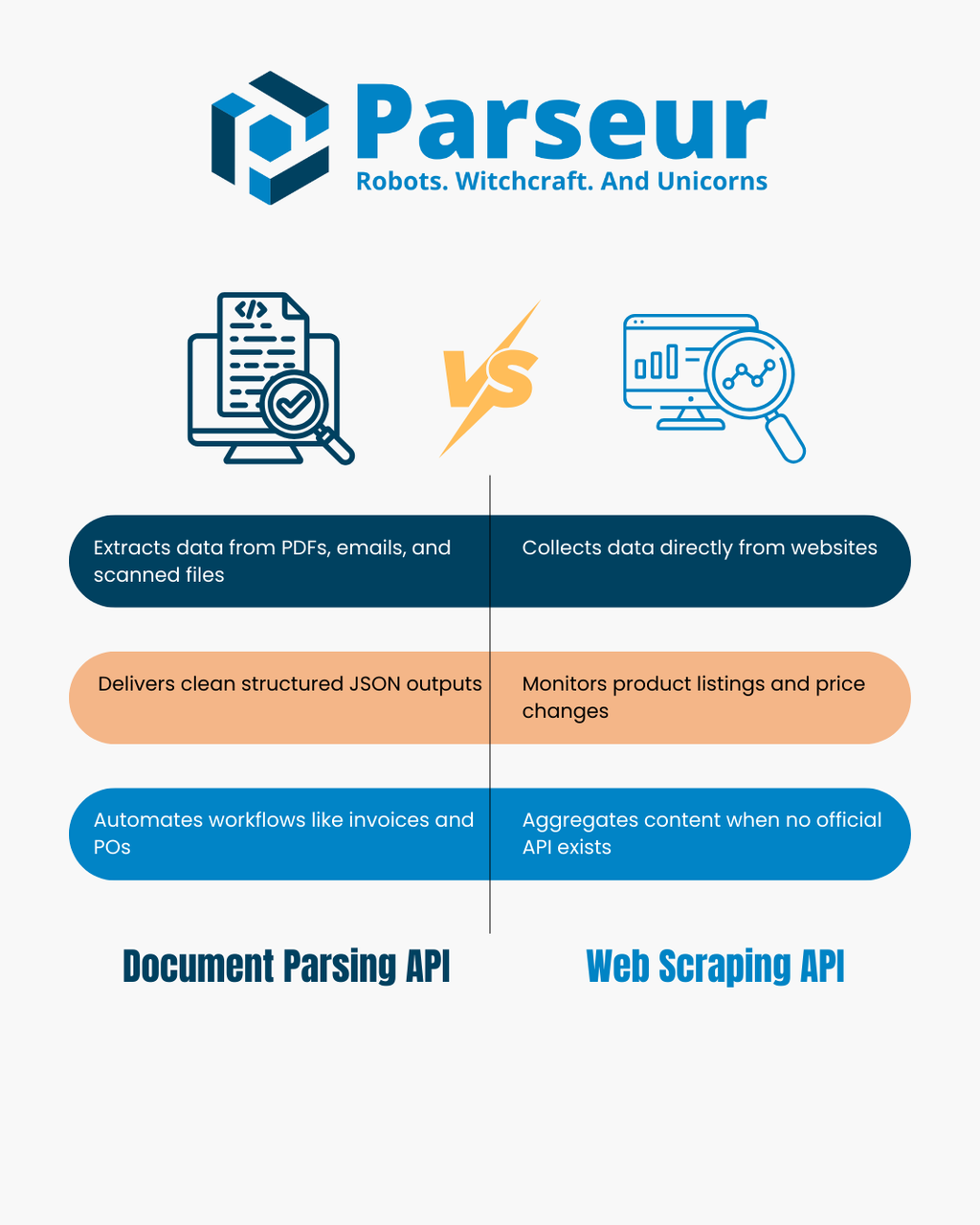

A document parsing API converts files such as PDFs, scanned images, and emails into structured JSON. It analyzes the document’s layout and text to extract key-value pairs and tables, making it easier to automate processes like invoice management, purchase order tracking, or email-to-database workflows.

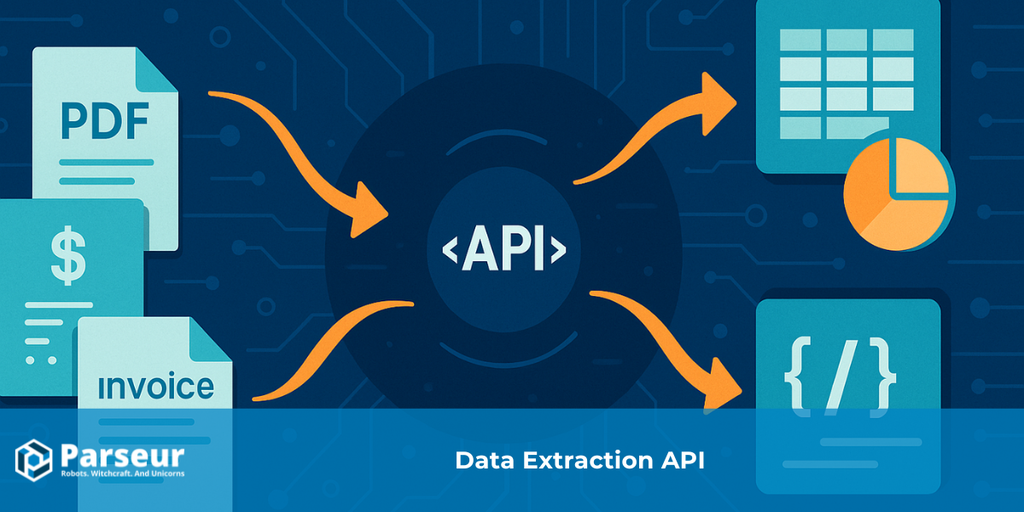

A web scraping API collects data directly from websites by programmatically fetching web pages and parsing the HTML or rendered DOM. When no official API is provided, web scraping is typically used to monitor product listings, track pricing changes, aggregate news content, or build datasets.

Both approaches extract data but work on very different sources: document parsing APIs handle files you own, while web scraping APIs focus on web pages you visit. This article will compare their strengths and limitations and provide a decision tree, a side-by-side table, and real-world scenarios. For a broader context on data automation, see our Data Extraction API guide.

How Document Parsing APIs And Web Scraping APIs Work

Document parsing APIs and web scraping APIs fall under the umbrella of data extraction, but the way they operate and the problems they solve are quite different. Understanding how each one works in practice is the first step to deciding which is the right fit for your business needs.

A study from Scrapingdog reveals that 34.8% of developers now use web scraping APIs, highlighting a clear trend toward structured, ready-to-use data extraction workflows over maintaining custom scraping scripts.

Document parsing API

A document parsing API focuses on extracting structured information from files that you already have or lawfully receive. These can include PDFs, scanned images, emails with attachments, and sometimes office documents. Instead of requiring manual data entry, the API analyzes the document’s layout and text to identify meaningful data points.

- Inputs: PDFs, scans, images, emails, and office files.

- Outputs: Clean, structured JSON containing key-value pairs, tables, and specific fields you define.

- How it works: Using OCR and parsing rules, the API detects text blocks, numbers, and tables. It then maps them into a consistent format that downstream systems (like CRMs, ERPs, or databases) can easily process.

- Typical use cases: Automating invoice and receipt processing, extracting line items from purchase orders, parsing financial statements, or managing large volumes of customer forms. Many teams also use document parsing to transform emails into structured data that can trigger workflows in tools like Zapier, Make, or n8n.

Web scraping API

A web scraping API, by contrast, is designed to extract information directly from the open web. Instead of dealing with files, it fetches data from websites and transforms the content into a usable format. The scraping process can involve fetching raw HTML, rendering pages through a headless browser, and applying selectors or JavaScript evaluation to extract specific fields.

- Inputs: URLs of websites, HTML content, or JSON endpoints.

- Outputs: Parsed and structured data, often in JSON or CSV format, ready for analysis or integration.

- How it works: The API loads a web page, analyzes its DOM (document object model), and applies rules like CSS selectors or XPath to capture fields such as product names, prices, or article headlines. Some tools also manage proxies and anti-bot measures to access websites at scale.

- Typical use cases: Monitoring e-commerce prices across competitors, collecting product catalogs, aggregating news articles, tracking job postings, or creating large datasets where no official API is provided.

With design, document parsing APIs are best suited for files you own or receive, while web scraping APIs excel at gathering information from public web pages.

Decision Tree: Which One Do You Need?

Choosing between a document parsing API and a web scraping API often comes down to the source of your data and the end goal you’re trying to achieve. To help make the choice clearer, here’s a simple decision flow with practical explanations for each path.

!

Is your source a file (PDF, image, or email attachment) that you lawfully possess?

→ Use a Document Parsing API. It will transform these files into clean JSON, extract key fields, and even capture line-item details from tables without manual data entry.

Is your source a public web page or online dataset?

→ Use a Web Scraping API. It will fetch HTML or rendered pages and let you pull out the specific data points you need, such as product listings or news articles.

Do you deal with both documents and websites?

→ Sometimes you’ll need a hybrid approach. For example, you might scrape a vendor portal to download PDFs and then pass those PDFs to a document parsing API for structured extraction.

Do you need structured tables or line items (like invoices, receipts, or purchase orders)?

→ This is where a Document Parsing API shines. It’s designed to handle tabular and financial data where accuracy and schema consistency are crucial.

Do you need real-time updates from dynamic sources (like price changes or breaking news)?

→ A Web Scraping API is the better fit, since it can repeatedly check websites and gather fresh content as it’s published.

This decision tree helps you quickly map your use case to the right tool, or in some cases, a combination of both.

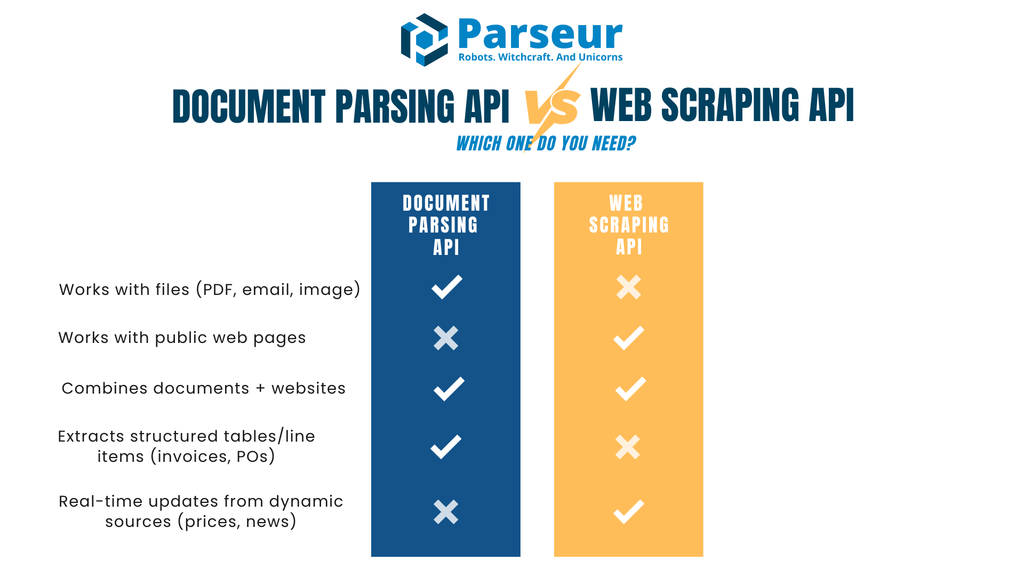

Document Parsing API vs Web Scraping API

When comparing document parsing APIs and web scraping APIs, it helps to look at their strengths and limitations side by side. The table below breaks down standard evaluation criteria, from inputs and outputs to security and compliance, so you can quickly see which solution fits your workflow.

| Criterion | Document Parsing API | Web Scraping API |

|---|---|---|

| Primary Input | Files such as PDFs, scanned images, and emails with attachments | Web pages (HTML/JSON) or rendered DOM content |

| Typical Outputs | JSON with key-value pairs, line-item tables, and structured fields | Parsed HTML converted to JSON or CSV through selectors |

| Change Sensitivity | Stable: once document types are set, parsing remains consistent | Fragile site layout, or DOM changes, can break selectors |

| Use Cases | Invoices, purchase orders, contracts, forms, financial statements, operational emails | Product catalogs, pricing updates, job boards, and news aggregation |

| Acquisition | You or your users supply the documents | Data is fetched directly from third-party websites |

| Legal Focus | Privacy and compliance (data controller/processor roles, retention policies) | Terms of Service, robots.txt, anti-bot protections |

| Latency & Scale | Works well with batch jobs, async processing, and webhook delivery | Constrained by crawl rates, anti-bot rules, and concurrency management |

| Maintenance | Occasional template adjustments or schema refinements | Frequent selector updates and anti-bot countermeasures |

| Data Quality | Structured output, validation rules, and normalized fields | Varies by site quality and HTML cleanliness |

| Security | Encryption in transit and at rest; signed webhooks; role-based access | Requires IP rotation, secure proxies, and network hygiene |

| LLM Fit | Ideal for structured JSON input to downstream AI/ML systems | Ideal for unstructured text enrichment, summarization, or classification |

| When to Pick | Use when you already receive documents (e.g., invoices, receipts, contracts) | Use when you need live website content (e.g., prices, stock, headlines) |

When Web Scraping API Is The Right Tool (And How To Do It Responsibly)

Web scraping APIs are often the best option when the information you need is only available on websites and not delivered as files. They allow you to capture data at scale without waiting for a partner, vendor, or customer to send you a document. Scraping works particularly well for market research, price monitoring, and knowledge aggregation projects where updates happen frequently.

Industry data from Browsercat reveals that the global web scraping market was valued at approximately USD 1.01 billion in 2024 and is projected to reach USD 2.49 billion by 2032, growing at an 11.9% compound annual growth rate (CAGR).

Common scenarios where scraping shines include:

- Monitoring prices or product availability across multiple e-commerce sites

- Aggregating news headlines or public announcements from different outlets

- Building datasets of job postings, directory entries, or event listings when no official API is provided

Since web scraping involves collecting information from websites that you do not own, it is important to approach it responsibly. Good practices include:

- Reviewing robots.txt and terms of service before scraping

- Applying rate limits so your crawlers do not overload a server

- Using caching where possible to avoid repeated unnecessary requests

- Identifying your scraper clearly, rather than attempting to disguise it

- Preferring official APIs if the website provides them

A practical reality of web scraping is that websites change frequently. A small update to the HTML structure can break your selectors and cause missing or incorrect data. It is essential to set up monitoring and alerts so that you can detect and fix these issues quickly.

Finally, in many workflows, scraping is not a standalone solution. For example, you might use scraping to discover or download PDF files from a vendor portal and then rely on a document parsing API to transform those files into structured JSON. This hybrid approach combines the reach of web scraping with the accuracy of document parsing.

Challenges Of Web Scraping APIs

Web scraping APIs can be a powerful way to collect real-time data from websites, but they also come with significant hurdles that businesses must carefully consider. Understanding these challenges helps set realistic expectations and highlights why scraping is often better suited for some scenarios than others.

A recent analysis from Octoparse shows that only about 50% of websites are easy to scrape, while 30% are moderately difficult, and the remaining 20% are especially challenging due to complex structures or anti-scraping measures.

Frequent website changes

Websites are not built with scraping in mind. Even small changes to the HTML structure, like renaming a CSS class or shifting page layout, can break scraping scripts and APIs. This leads to ongoing maintenance costs and the need for active monitoring to ensure data pipelines stay reliable.

Anti-bot measures

Many websites employ protections such as CAPTCHA, IP throttling, session validations, or bot detection algorithms. To avoid disruptions, scraping teams must implement strategies like rotating proxies, managing user-agent strings, and limiting request rates, which adds technical overhead.

Legal and ethical concerns

Web scraping operates in a gray area when it comes to legality. While scraping public data is often allowed, ignoring a website’s terms of service, robots.txt directives, or bypassing paywalls can expose companies to legal and compliance risks. Organizations must establish clear ethical guidelines and, when in doubt, seek legal counsel before deploying large-scale scrapers.

Data quality and consistency

Websites are designed for human viewing, not machine consumption. Scraped data often requires additional cleaning and validation. Inconsistent HTML structures, dynamic JavaScript content, or duplicated records can result in messy datasets that need downstream processing before they become useful.

Scalability challenges

Scaling a web scraping operation is not as simple as adding more requests. High-volume scraping requires strong infrastructure to manage concurrency, retry logic, error handling, and distributed workloads. As the scraping effort grows, costs for proxies, servers, and monitoring tools can rise quickly.

Long-term sustainability

Scraping can be a fragile solution for ongoing business processes. Scraped data pipelines need constant adjustments, unlike official APIs or structured document inputs. Organizations must be prepared to allocate time and resources for maintenance over the long term.

When A Document Parsing API Is The Better Choice

A document parsing API is the right fit when the information you need already comes to you as documents, rather than being published on a website. These documents may arrive as PDFs, scanned images, or emails with attachments. Instead of manually retyping details into a database or ERP, a parsing API automates the process by turning unstructured files into structured data.

According to Sphereco, 80% of enterprise data is unstructured, with formats like emails, PDFs, and scanned documents, making document parsing APIs essential to unlock efficiency and insight.

Typical use cases include:

- Invoice and receipt processing: extracting supplier names, dates, totals, and line-item tables for accounts payable workflows

- Purchase orders and statements: capturing order numbers, amounts, and payment terms for faster reconciliation

- Forms and contracts: pulling out standardized fields such as customer details or signature dates

- Operational emails: converting order confirmations, shipping notices, or booking requests into JSON for integration with downstream systems

A document parsing API is particularly valuable when you need accuracy and consistency. It not only extracts text but can also normalize formats, validate fields, and deliver results through webhooks directly into your application or database. This ensures data is structured, reliable, and ready for automation without additional clean-up.

Document parsing is more stable than web scraping because the file structure changes less frequently than a website’s HTML. Once the parsing instructions are configured, the same rules can reliably handle thousands of documents.

If your business relies heavily on processing vendor documents, customer statements, or emails, a document parsing API is almost always the faster and more sustainable solution.

Hybrid Patterns: Real-World Overlaps

In many workflows, document parsing and web scraping are not competing choices but complementary tools. Businesses often find that their data sources come from documents and websites, which means combining the two approaches creates a more complete solution.

Some practical hybrid patterns include:

- Scrape to download PDFs, then parse them: A vendor portal may host invoices or statements as downloadable PDFs. A scraping API can log in and fetch the files, while a document parsing API extracts line items, totals, and other structured data.

- Parse and enrich documents with scraped data: After parsing invoices, you may need additional metadata such as supplier categories or industry benchmarks. A scraping API can collect this context from public sources, while the parsing API ensures the financial details remain accurate.

- Email parsing with website verification: Order confirmations and shipping notices often arrive by email. You can parse the details directly, then use a scraping API to verify live stock availability or current pricing on the supplier’s website.

- Adding intelligence layers: Once structured JSON is available from documents, you can connect the data with scraped website information, then apply analytics or categorization. This layered approach helps teams normalize vendor names, detect anomalies, or map products across sources.

Hybrid setups are helpful because they respect the strengths of each method. Parsing APIs excel at structured, schema-driven outputs from documents, while scraping APIs provide visibility into unavailable web-native data. By using both, teams can reduce manual work and achieve broader automation across their operations.

Is Parseur A Document Parsing API Or A Web Scraping API?

Parseur is a powerful document and email parsing API that turns unstructured documents into structured JSON data. Unlike a web scraping API, which extracts information directly from websites, Parseur focuses on the documents and emails you or your users already own. This makes it a stable and scalable solution for automating workflows without the risks of website changes, scraping restrictions, or rendering issues. With Parseur, you can easily enhance processes such as invoice automation, receipt tracking, purchase order handling, or customer form processing.

What this means in practice

- What Parseur does: It ingests emails, PDFs, images, and office files and returns structured JSON with key-value fields and line-item tables. Data can be delivered via webhooks or accessed directly through the API.

- Data handling approach: Parseur operates strictly as a processor under your control. It supports data processing agreements (DPAs), provides a transparent list of subprocessors, allows configurable retention and deletion policies, encrypts data in transit and at rest, and secures delivery with signed webhooks.

- Best fit: Parseur is ideal for teams whose documents primarily arrive by email, such as invoices, receipts, purchase orders, or financial statements, and who need fast, reliable structured data extraction with minimal coding effort.

Why Parseur API Stands Out

The key advantage of Parseur API compared to other solutions is that it combines both an API and a web application. Developers can integrate the API directly into their applications, while Customer Support and Operations teams can use the web app to monitor, review, and improve parsing results without additional development.

This dual setup saves developers from building their own monitoring and management tools, which are often time-consuming and complex to maintain. In the web app, users can define their JSON schema and data fields in just a few clicks, adjust instructions on the fly, and validate extracted data. This flexibility ensures that technical and non-technical teams collaborate efficiently while keeping the integration lightweight.

Unlike web scraping APIs that rely on fragile website structures, Parseur works with files you already own, providing a more dependable foundation for business-critical automation.

How Parseur Handles Data

While Parseur is not a web scraping API, it is designed to efficiently and securely process documents and emails. For teams that rely on PDFs, scanned images, or email attachments, Parseur provides a reliable way to transform those files into structured JSON that can be integrated into workflows at scale.

Parseur's strong commitment to data security, privacy, and compliance makes it stand out. Businesses can confidently use Parseur knowing their information is handled responsibly and in line with industry best practices and strict global standards.

Key aspects of Parseur’s data management

Purpose-built for documents and emails

Parseur ingests PDFs, images, and email content, then delivers clean, structured JSON through webhooks or API calls. This enables teams to automate invoice management, purchase orders, or email-to-database workflows without heavy custom coding.

You stay in control of your data

You own the data you send to Parseur. Parseur only processes information under your explicit instructions, and you can configure your own data retention policy, down to as little as one day. A Process then Delete feature lets you immediately remove documents once parsing is complete.

Where data is stored

All Parseur data is securely stored in the European Union (Netherlands) within a highly secure data center powered by Google Cloud Platform (GCP). GCP itself is compliant with ISO 27001 certifications. See more details here.

Security and encryption practices

All data is encrypted at rest using AES-256 and in transit using TLS v1.2 or above. Deprecated transport layers (SSLv2, SSLv3, TLS 1.0, TLS 1.1) are fully disabled. Parseur leverages Let’s Encrypt SSL Certificates, the global industry standard, to secure communications between Parseur servers, third-party apps, and your browser.

Infrastructure monitoring and penetration testing

Parseur continuously monitors its infrastructure and dependencies, applying patches when vulnerabilities are discovered. Independent third-party companies also perform regular penetration testing to validate security against frameworks like OWASP Top 10 and SANS 25. Enterprise customers can request full pentest reports. In 2025, Parseur also received an official Astra Pentest Certificate, further proving its cybersecurity resilience.

Password security and account protection

Parseur never stores raw passwords. Instead, it uses a PBKDF2 algorithm with SHA-256 hashing, combined with a 512-bit salt and 600,000 iteration cycles, well above NIST recommendations, to secure account access.

Operational reliability and SLA

Parseur’s target uptime is 99.9% or higher, with retry and backoff mechanisms ensuring no data loss during outages. Email collection platforms retry for up to 24 hours, and dual sending mechanisms provide redundancy. Enterprise plans can reach 99.99% uptime with additional infrastructure guarantees. Check historical uptime here.

GDPR and privacy-first approach

Parseur is entirely GDPR compliant and acts as a processor under your control. You remain the data Controller, with complete ownership of your documents. Parseur never sells or shares your data. Team members only access your data if you explicitly request support, and all staff receive ongoing GDPR and data protection training. Learn more about Parseur and GDPR.

Incident response and breach notifications

In the unlikely event of a data breach, Parseur has a strict policy of notifying customers within 48 hours, ensuring full transparency and compliance with privacy laws. You can also review Parseur’s official Security and Privacy Overview here

Legal And Compliance At A Glance

Legal and compliance considerations are central when choosing between document parsing and web scraping APIs. Both approaches involve handling data, but the obligations differ depending on the source and context.

Organizations must ensure they have a lawful basis for processing documents. This usually involves agreements with the data owner or provider. It also means defining roles under data protection regulations, such as controller and processor, establishing a Data Processing Agreement, and implementing clear retention policies. Document parsing workflows should also account for breach notification obligations and data minimization practices.

The legal landscape for web scraping is more complex. While scraping public data can be permissible in some jurisdictions, many websites explicitly prohibit it through their terms of service or robots.txt files. Circumventing paywalls, access controls, or anti-bot measures can increase legal and compliance risks. Organizations using web scraping should always consult legal counsel to ensure their practices align with regulations and contractual obligations.

Cross-border data transfers add another layer of complexity. If your workflows involve personal data from the European Union or other regulated regions, you will need a compliant transfer mechanism to meet legal requirements.

Final Thoughts: Choosing The Right API For Your Data

Document parsing and web scraping APIs play valuable roles in automating data collection, but they serve very different needs. Parsing is best when working with documents you own, such as invoices, statements, or emails.

According to Experlogix, document automation can reduce document processing time by up to 80%, highlighting the significant efficiency gains businesses can unlock with document parsing APIs.

Scraping is the right fit when the data you need lives on public websites, such as product catalogs or price listings. In some workflows, teams use scraping to collect files and parsing them for structured outputs.

The key takeaway is to choose based on your source. A document parsing API will save you time and ensure accuracy if your data arrives as PDFs, scans, or emails. If your data lives on web pages, then a scraping API may be the right option. For teams handling multiple sources, combining both strategies provides a complete solution.

Frequently Asked Questions

Many readers have common questions when comparing document parsing and web scraping. Below are answers to some of the most frequently asked queries to help clarify their differences and practical use cases.

-

Is document parsing the same as web scraping?

-

No. Document parsing works with files such as PDFs, scanned images, or emails you already own or have received, while web scraping extracts data from websites by analyzing HTML or rendered content.

-

Is Parseur a web scraping API tool?

-

No. Parseur is a document and email parsing API, not a web scraping tool. It does not crawl or fetch web pages. Instead, it helps you transform documents you own, such as emails, PDFs, images, or office files, into clean, structured JSON. This makes it ideal for workflows like processing invoices, receipts, and purchase orders without building complex internal tools.

-

Is web scraping legal?

-

It depends on the context. Scraping public data is sometimes permissible, but websites often outline restrictions in their terms of service or robots.txt file. Always review these documents and consult legal counsel before proceeding.

-

When should I avoid scraping?

-

Scraping should be avoided when data is behind paywalls, subject to strict access controls, or explicitly prohibited by the site’s terms of service. Attempting to bypass restrictions can create compliance and legal risks.

Last updated on