Key Takeaways

- Data extraction can produce messy outputs that require validation and post processing if not carefully designed.

- A reliable pipeline should include schema validation, normalization, text cleanup, table fixes, deduplication, and continuous QA checks.

- Tools like JSON Schema, Pydantic, Pandas, and Great Expectations make post-processing stronger and automated.

- Parseur API helps capture and structure data quickly, so teams can focus on quality and analytics.

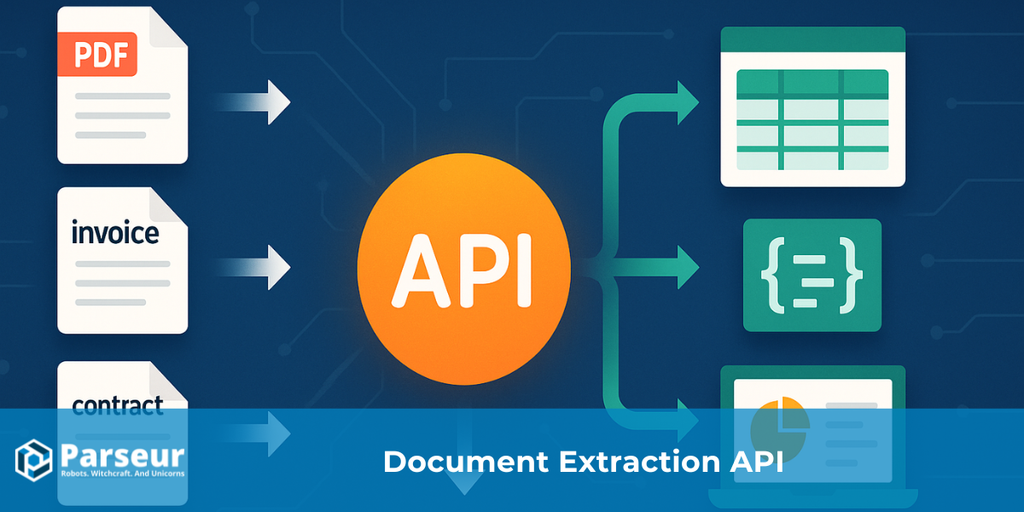

Data cleaning techniques refer to correcting, standardizing, and validating the raw data returned by APIs. Even though extraction tools convert unstructured files like PDFs, images, or emails into structured formats such as JSON or CSV, the results often contain inconsistencies, null values, incorrect types, duplicates, or formatting errors. Cleaning ensures the dataset matches the intended schema and is reliable for reporting, analytics, and downstream workflows.

A recent case study from DataXcel revealed that 14.45% of extracted telephone records were invalid or inactive, underscoring the importance of implementing strong data cleaning practices to mitigate such errors and ensure the quality of extracted data.

APIs that extract data from PDFs, images, or emails usually return structured formats like JSON or CSV. While this makes raw information more usable, the output is rarely perfect. Teams often encounter missing values, inconsistent headers, mixed data types, duplicates, or malformed dates. These errors can compromise reports, analytics, and financial decisions without cleaning.

This guide walks through a practical playbook to turn messy extractions into reliable datasets: validate, standardize, enrich, test, and log. For teams handling email and PDF attachments, tools like Parseur simplify capture so you can focus on cleaning and quality.

If you want a deeper knowledge about how data extraction APIs work from start to finish, check out our complete guide: What Is a Data Extraction API for Documents?

Types Of Data Cleaning Techniques

After extracting data using a data extraction API, the raw outputs often contain inconsistencies, missing values, formatting errors, or duplicates. Even though APIs help convert unstructured files like PDFs, images, or emails into structured formats like JSON or CSV, the data still requires careful cleaning to be reliable.

A study by Harvard Business Review found that only 3% of companies' data meets basic quality standards, and 47% of newly created data records have at least one critical error. These errors have a real impact, as Gartner projects that data quality issues cost the average business $15 million annually in losses.

Implementing effective data cleaning techniques is essential to ensure your data is accurate, consistent, and ready for analysis. Here are some common techniques:

Validation and Error Checking

Verify that the extracted data matches the expected formats, such as dates, numbers, or emails. This prevents errors in analytics or reporting and ensures the accuracy of your data extraction API's outputs.

Standardization

Convert data into consistent formats, such as normalizing phone numbers, addresses, or date formats, to make datasets easier to integrate and use.

Handling Missing Values

Depending on the dataset's purpose, address null or missing values by filling gaps, interpolating, or removing incomplete records.

Deduplication

Remove duplicate entries that may result from repeated API calls or overlapping sources, improving dataset accuracy and reliability.

Data Enrichment

To enhance the usability of extracted data, you can add context or supplemental information, such as geographic details or categorizations.

Format and Type Correction

To ensure consistency across your dataset, fix misformatted values, convert strings to numbers, correct misspellings, or standardize currencies.

Logging and Auditing

Track all cleaning operations to monitor the quality of your API extractions and maintain data integrity over time.

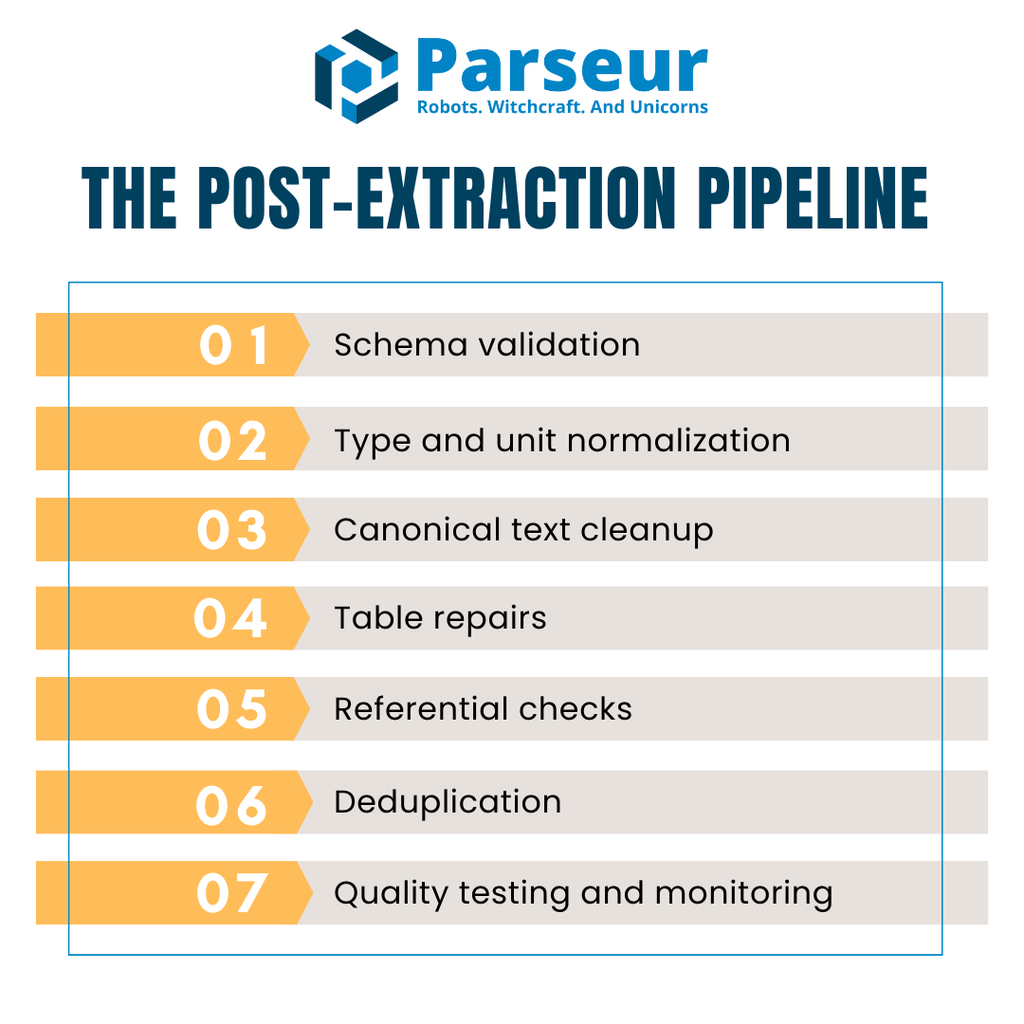

The Post-Extraction Pipeline (Overview)

Once data is pulled from an API, it is rarely ready for direct use in analytics or reporting. Raw outputs often contain missing fields, mismatched types, inconsistent tables, or duplicate values. To avoid passing these issues downstream, teams need a structured pipeline that can be repeated for every batch of extracted data.

A practical post-extraction pipeline generally includes five primary stages:

- Schema validation – Confirm that incoming JSON or CSV matches the expected structure before processing anything else.

- Type and unit normalization – Fix data types, handle missing values, and apply consistent units or formats.

- Canonical text cleanup – Standardize strings, normalize casing, and handle Unicode inconsistencies.

- Table repairs – Unify headers, align line items, and reconcile totals in multi-line invoices or receipts.

- Referential checks – Validate relationships across datasets, such as vendors, currencies, or tax rules.

- Deduplication – Identify and remove duplicate records without discarding legitimate repeats.

- Quality testing and monitoring – Run automated checks so errors are flagged early and don’t corrupt production data.

For performance, many teams keep data in a columnar representation like Apache Arrow or Parquet while cleaning. This improves memory efficiency and processing speed, especially when dealing with large invoice or transaction datasets.

Think of the flow as a swimlane—API → Validation → Cleaning → QA → Warehouse, and organizations can enforce consistency, reduce costs, and prevent data quality surprises.

Step 1: Validate Against A Schema (Stop Garbage Early)

The first step in cleaning data after API extraction is schema validation. This ensures that the information you receive is machine-readable and matches your expected structure. Malformed data can slip into your system without this step and cause failures later in the pipeline.

One of the most widely used standards for this task is JSON Schema (Draft 2020-12). It is portable, tool-agnostic, and supported by a large ecosystem of libraries. JSON Schema allows you to define what valid data should look like, including field types, required attributes, and format rules. For example, you can specify that invoiceDate must follow the ISO 8601 format or that total must always be a non-negative number.

In Python projects, Pydantic v2 efficiently validates data at runtime while automatically generating JSON Schema definitions. Creating models for invoices or line items allows developers to enforce structure and catch invalid data instantly. A simple model might check that vendorName is a string, invoiceNumber matches a regex pattern, and currency belongs to a specific set of codes (USD, EUR, GBP).

Validation rules should go beyond types. Add constraints such as enumerations for allowed values, regex for formats like tax IDs, and numeric ranges for totals or quantities. These guardrails prevent subtle errors from moving forward.

Finally, plan how to handle invalid records. Some teams prefer to reject them immediately, while others quarantine them in a dead-letter queue for later review. This fail-fast approach ensures only trustworthy data enters the rest of the cleaning pipeline.

Step 2: Fix Types, Nulls, And Units

Once data passes schema validation, the next priority is correcting data types, handling missing values, and normalizing units. Even when a data parsing API outputs structured JSON or CSV, it is common to see numbers stored as strings, inconsistent date formats, or null fields scattered across rows. Without fixing these issues, downstream analytics and reporting can become unreliable.

With the Parseur API, users can automatically extract data from invoices, receipts, and emails into clean JSON with minimal setup. Its real-time webhooks push structured data directly into ERPs, CRMs, or databases, reducing the manual cleanup required before applying validation rules. This not only speeds up the cleaning pipeline but also prevents many common errors from appearing in the first place.

The first step is to coerce values into the correct data type. Convert fields like quantity or unitPrice into numeric types and parse dates such as invoiceDate and dueDate into standard ISO formats. Boolean values like paid or approved should also be normalized to true or false.

Next, decide how to handle missing values. There are three main strategies:

- Drop: remove incomplete rows if they are non-critical.

- Fill: impute values with defaults, averages, or placeholders.

- Flag: mark missing fields for review instead of silently replacing them.

The chosen rule should be documented per field to remain consistent across the pipeline.

In Python, libraries like Pandas make this process straightforward. Functions such as to_numeric(errors="coerce"), to_datetime(), fillna(), and dropna() provide flexible ways to standardize data. These transformations ensure every column has the expected format and that nulls are handled deterministically.

Fixing types, nulls, and units early creates a clean foundation for subsequent steps like text normalization, table repair, and referential checks.

Step 3: Canonicalize Text (Names, Casing, Unicode)

Once numeric and date fields are corrected, the focus shifts to cleaning text values. Text data is often messy, with variations in casing, spacing, or encoding that can prevent accurate grouping or matching. The same vendor might appear under multiple names without standardization, and analytics can become fragmented.

The first step is whitespace and punctuation cleanup. Trim leading or trailing spaces, collapse multiple spaces into one, and remove stray characters. Next, apply consistent casing rules. For example, company names might format title (), while invoice status fields could be standardized to uppercase for easy comparison.

Handling Unicode normalization is equally important. Different character encodings can make two visually identical strings register as mismatches. Normalizing text to NFKC form ensures accents, symbols, and punctuation are stored consistently. For global datasets, accents may be stripped when comparing vendor names to avoid duplicate entries like “Café” versus “Cafe.”

Finally, build a canonicalization list for high-value fields like supplier or vendor names. This can start with light rules such as replacing abbreviations (“Inc.” vs “Incorporated”) and expand toward machine learning models for entity resolution.

Canonicalizing text ensures comparability across sources and reduces the risk of duplicate or fragmented records downstream.

Step 4: Repair Tables (Line Items That Add Up)

After API extraction, line-item tables often require the most repair. Headers may be split across multiple rows, cells rotated from scanned PDFs, or values merged in ways that make analysis difficult. Cleaning begins with ensuring every table has a single, consistent header row that maps clearly to your schema.

Once the structure is set, normalize the units so calculations remain consistent across invoices. For example, convert kg and lbs into a standard unit, or reconcile currencies before comparing totals. Recomputing each row’s amount = quantity × unitPrice is a simple yet critical step to verify integrity. To further protect accuracy, always check that the sum of line items matches the invoice total within a small tolerance. This ensures errors like missing rows or duplicated entries are flagged immediately.

Exporting tables to CSV adds another layer of complexity. Hidden delimiters, stray quote characters, or encoding mismatches can cause columns to break. A reliable pattern is to load files with flexible tools such as DuckDB, which allows you to set explicit delimiters, quote characters, and encodings. Using options like all_varchar lets you load tricky files safely, then cast columns into the correct types afterward.

These steps transform messy extracted tables into structured, reliable rows that analytics and finance teams can trust. The goal is not only to make numbers add up but also to guarantee that no silent errors sneak into your reports.

Step 5: Referential And Business Rules

Problems often appear when data is compared across tables even when individual records look correct. Referential checks ensure that values are consistent and that business rules are respected before data reaches your warehouse.

For example, every vendorId in the invoices table should exist in the master vendors table. Currency codes should only use those allowed for your company’s operations, and tax rates must match the jurisdiction they are filed under. Catching these issues early prevents downstream errors like failed joins, miscalculated reports, or compliance violations.

Modern data teams often enforce these rules directly in transformation layers. DBT tests make it easy to codify constraints such as:

- unique (no duplicate invoice numbers),

- not_null (critical fields like vendorId cannot be empty),

- accepted_values (currency must be USD, EUR, etc.),

- relationships (foreign keys like vendorId must exist in the vendors table).

Encoding referential integrity into automated tests gives teams defense-in-depth: problems surface immediately, not weeks later in financial reviews. This approach turns manual checks into scalable, repeatable processes that improve confidence in reporting and compliance.

Step 6: Deduplication And Record Linkage

Duplicate records can silently erode trust in your data. They inflate totals, trigger double payments, or cause confusion during audits. Cleaning after API extraction requires a strategy that removes duplicates without discarding legitimate repeats.

The first step is to define deterministic keys. For invoices, a safe combination might be supplierName, invoiceNumber, invoiceDate, amount, and currency. If two rows share all these values, they are almost certainly duplicates.

Beyond exact matches, duplicates often hide in near-identical records. A fuzzy matching window can help — for example, invoices from the same supplier within seven days and one percent of the same amount can be flagged for review. This bucketed approach avoids accidental deletions but still catches suspicious entries.

It is also important to distinguish between syntactic matching (comparing text strings directly) and semantic matching (understanding that “Acme Corp.” and “ACME Corporation” are the same entity). Tools like OpenRefine use clustering methods to group similar records, making it easier to suggest duplicates for human review.

Combining deterministic rules with fuzzy or semantic checks creates a balanced deduplication process that maximizes accuracy while minimizing the risk of deleting legitimate records.

Step 7: Automate Data Quality Checks

Data quality is not a one-time task. Even after thorough cleaning, new extractions can introduce errors at any point. Automating quality checks ensures that problems are caught consistently before they affect reports or decision-making.

One reliable option is Great Expectations (GX). GX allows teams to declare rules, called Expectations, for their datasets. For example, you can verify that invoice numbers match a regex, quantities fall within a valid range, or row counts stay within expected limits. These tests can run as part of a CI/CD pipeline, giving instant feedback if extraction quality drops.

For Python-native pipelines, Pandera provides a lightweight way to enforce data types, ranges, and nullability directly on Pandas dataframes. With a few lines of code, teams can reject invalid rows or raise warnings when data deviates from expected patterns.

Automation is only effective if results are visible. Push test outcomes to dashboards or alerting tools so stakeholders know the percentage of invalid rows, pass/fail counts, and error details. This closes the loop and helps teams prioritize fixes quickly.

Making data quality measurable and continuous helps organizations ensure that every cleaned dataset remains accurate, consistent, and production-ready.

Performance And Storage Tips (So Data Cleaning Does Not Become the Bottleneck)

Cleaning data after extraction is essential, but it should not slow pipelines down. When dealing with high volumes of invoices, receipts, or transaction logs, poorly optimized processes can create latency, inflate costs, and frustrate downstream teams. The goal is to enforce quality without sacrificing speed.

Studies from MDPI have shown that data cleaning can consume up to 80% of a data professional’s time, highlighting its significant impact on overall data processing efficiency. This underscores the importance of optimizing data cleaning processes to maintain pipeline performance and efficiency.

Here are some practical ways to keep performance in check:

- Use columnar storage formats — Formats like Apache Arrow and Parquet make data cleaning faster by enabling vectorized operations and reducing memory usage. They also integrate smoothly with analytics engines and Python data libraries.

- Batch and parallelize workloads — Instead of processing records one at a time, run batch jobs or async tasks from your extractor. This allows you to process multiple files simultaneously and minimize wait times.

- Leverage warehouse-native tools — For very large CSVs or complex joins, push heavy parsing into DuckDB or directly into your data warehouse. This offloads processing from your local pipeline and speeds up transformations.

- Cache intermediate results — If certain checks or normalizations need to be rerun, caching avoids re-processing the same records repeatedly.

- Monitor system usage — Track CPU, memory, and I/O utilization to identify bottlenecks before they impact service-level expectations.

Efficient cleaning pipelines balance accuracy with scalability. Adopting columnar formats, batching, and smart use of compute resources keeps validation and cleanup layers fast enough to support real-time analytics and reporting.

Security And Compliance Notes (Do Not Clean Away Controls)

Data cleaning is not just about fixing errors; it also has compliance implications. Many extracted documents contain sensitive information like banking details, tax identifiers, or employee records. Mishandling these fields during post-processing can create risks before data reaches the warehouse.

Research from Mitratech indicates that 61% of organizations experience data breaches, inefficiencies, and compliance issues arising from poor data governance. This underscores the critical importance of implementing strong data cleaning practices to ensure both data quality and regulatory compliance.

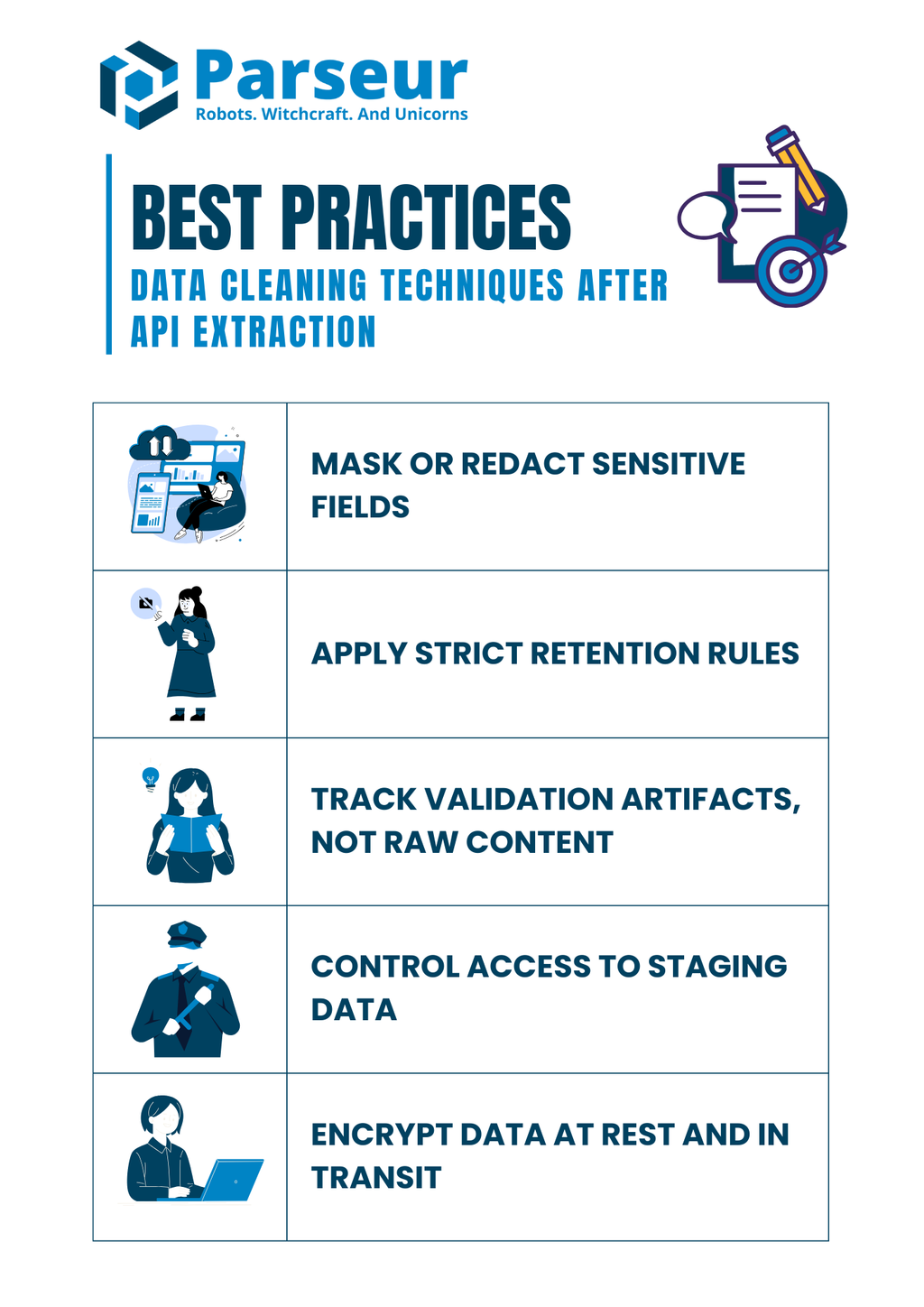

Here are the best practices to keep compliance intact while cleaning:

- Mask or redact sensitive fields — Do not log raw identifiers such as Social Security numbers, credit card details, or account numbers. Always replace them with masked or hashed versions when storing in logs.

- Apply strict retention rules — Do not keep raw extracted files longer than necessary. Define retention windows that align with regulatory standards.

- Track validation artifacts, not raw content — Keep records of what failed and why (e.g., missing fields, invalid dates) without storing the sensitive document.

- Control access to staging data — Apply role-based permissions so only authorized users can view or modify sensitive records in staging areas.

- Encrypt data at rest and in transit — Whether temporary or permanent, ensure staging databases, files, and logs are encrypted to reduce exposure.

Strong compliance practices should run in parallel with technical cleaning steps. Maintaining controls throughout the pipeline protects teams from regulatory fines and maintains customer trust.

Worked Example (Pulling The Pieces Together)

To make the process more concrete, let us walk through a simple example that ties validation, normalization, reconciliation, and testing into one pipeline. Imagine you used Parseur API to extract invoice data from a PDF or email. Parseur delivers structured JSON straight from unstructured documents, giving you a reliable starting point for your cleaning pipeline.

Sample extracted JSON (input):

{

"invoiceNumber": "INV-001",

"invoiceDate": "2025/08/15",

"vendorName": "Acme, Inc.",

"lineItems": [

{ "description": "Widget A", "quantity": "10", "unitPrice": "5.00" },

{ "description": "Widget B", "quantity": "3", "unitPrice": "12.50" }

],

"total": "87.50"

}

Step 1: Validate schema with Pydantic:

from pydantic import BaseModel, Field

from datetime import date

from typing import List

import pandas as pd

# Define line item model

class LineItem(BaseModel):

description: str

quantity: int

unitPrice: float

# Define invoice model

class Invoice(BaseModel):

invoiceNumber: str

invoiceDate: date

vendorName: str

lineItems: List[LineItem]

total: float

# Example raw JSON string

raw_json = """

{

"invoiceNumber": "INV-001",

"invoiceDate": "2025-08-15",

"vendorName": "Acme, Inc.",

"lineItems": [

{ "description": "Widget A", "quantity": 10, "unitPrice": 5.00 },

{ "description": "Widget B", "quantity": 3, "unitPrice": 12.50 }

],

"total": 87.50

}

"""

# Parse JSON into Invoice model

invoice = Invoice.model_validate_json(raw_json)

# Normalize line items into Pandas DataFrame

df = pd.DataFrame([item.model_dump() for item in invoice.lineItems])

df["amount"] = df["quantity"] * df["unitPrice"]

# Check totals

if round(df["amount"].sum(), 2) != invoice.total:

print("Mismatch: line items do not add up to invoice total")

else:

print("Totals match ✅")

print(df)

Step 3: Run data quality tests with Great Expectations:

import great_expectations as gx

# Initialize Great Expectations context

context = gx.get_context()

# Load the Pandas DataFrame into a batch

batch = context.sources.pandas_default.read_dataframe(df)

# Get a validator to run expectations

validator = batch.get_validator()

# Set expectations

validator.expect_column_values_to_be_between("quantity", min_value=1, max_value=1000)

validator.expect_column_values_to_be_between("unitPrice", min_value=0, max_value=10000)

validator.expect_column_sum_to_be_between("amount", min_value=0, max_value=100000)

# Run validation and capture results

results = validator.validate()

print(results)

Output (cleaned):

- Invoice data is validated against a schema.

- Dates and numbers are typed correctly.

- Totals are reconciled with a tolerance check.

- Quality tests confirm ranges and structure.

This end-to-end process demonstrates how teams can take messy API outputs, validate them early, and apply deterministic cleaning before loading them into production systems.

Data cleaning is only one part of building a reliable data pipeline; the first step is getting accurate, structured data from your documents. That is where Parseur comes in. With its intuitive platform and the flexible Parseur API, you can automatically extract data from PDFs, emails, spreadsheets, and attachments, reducing the manual effort that often slows teams down. Once your data is captured, you can apply the cleaning techniques covered in this guide to ensure it is accurate, consistent, and ready for analysis.

Looking ahead, Gartner predicts that by 2026, 70% of new cloud deployments will leverage cohesive cloud data ecosystems rather than relying on manually integrated point solutions. This trend underscores the growing need for clean, structured data and seamless API-driven extraction workflows.

For teams that want to understand the bigger picture, we put together a comprehensive resource on how APIs transform document processing, including how to choose the right tool and optimize your workflows. Explore our full guide on Data Extraction APIs for Documents to see how you can confidently move from messy raw files to clean, actionable data.

Frequently Asked Questions

Before wrapping up, here are some frequently asked questions about data cleaning after API extraction. These quick answers address common pitfalls and practical concerns teams often face.

-

Should I drop rows with missing totals?

-

I prefer quarantine and investigation rather than dropping them outright. Totals are critical financial fields, and removing them silently can skew reporting. Keeping them in a review bucket ensures transparency and proper resolution.

-

How do I guarantee valid JSON before cleaning?

-

Validate with JSON Schema or Pydantic to confirm that incoming data is machine-readable and matches expected fields. Catching malformed JSON early prevents wasted time on downstream fixes.

-

Can I test quality without a warehouse?

-

Yes. Tools like Great Expectations or Pandera allow you to enforce rules directly in Python pipelines or CI/CD workflows. This allows you to maintain quality even before data lands in your warehouse.

-

What if tables don’t add up to the invoice total?

-

Set a reconciliation rule to compare line-item sums against the invoice total within a tolerance range. Any mismatch should be flagged and sent for review rather than overwritten.

-

Are DBT tests necessary if I test upstream?

-

Yes. DBT tests provide an additional safety net by codifying constraints at the model layer. Even if upstream checks exist, this defense-in-depth approach prevents insufficient data from slipping into production analytics.

-

How do I handle encoding issues from CSV exports?

-

Always define delimiter, encoding, and quote characters explicitly during parsing. DuckDB and similar tools can help diagnose tricky files and ensure consistency when normalizing data from multiple sources.

Last updated on