Large Language Models (LLMs) provide unmatched flexibility in understanding unstructured text, making them ideal for reasoning, summarization, and low-volume document tasks. However, their probabilistic nature, latency, and lack of deterministic precision make them insufficient as a standalone approach for high-volume, regulated document automation.

Key Takeaways:

- Strategic Flexibility: LLMs excel at translating unstructured, novel, or highly variable documents, enabling faster onboarding and reasoning-driven tasks.

- Operational Limitations: For structured, high-volume workflows, LLMs alone can lead to errors, slow processing, and compliance exposure. Deterministic systems remain essential.

- Hybrid Advantage: The most effective document automation strategies combine LLMs with specialized platforms like Parseur, leveraging AI for contextual understanding while relying on deterministic extraction for accuracy, scalability, and compliance.

The Automation Paradox

Large Language Models (LLMs) have fundamentally advanced natural language understanding. Their ability to explain unstructured text, infer meaning, and generalize across domains has expanded what is technically possible in document automation. Tasks that once required rigid rules or extensive manual configuration can now be approached with far greater flexibility.

LLM Limitations

However, this flexibility introduces a paradox for enterprise automation. While LLMs perform well in reasoning-oriented or low-volume scenarios, standards from Hyperscience show they often achieve only 66-77% exact match accuracy on critical document tasks like invoices and bills of lading, compared to over 93-98% for specialized IDP systems.

However, this flexibility introduces a paradox for enterprise automation. While LLMs excel in reasoning and low-volume use cases, they are not designed to operate as systems of record, where deterministic accuracy, fixed schemas, and predictable performance are mandatory.

Non-deterministic outputs, variable latency due to API rate limits, and escalating inference costs, driven by token volume and GPU demand, make pure LLM-based systems difficult to deploy reliably in production environments that demand speed, consistency, and predictability.

Hybrid IDP Growth

As a result, enterprise-grade document automation increasingly requires a hybrid approach. Hybrid Intelligent Document Processing (IDP) architectures combine the adaptability of LLMs with the precision of specialized extraction engines and deterministic logic, delivering superior Pareto frontiers in quality and efficiency.

This change reflects a growing recognition that no single technology can optimize for flexibility, accuracy, cost, and governance simultaneously. Enterprises are prioritizing architectures that explicitly separate reasoning from execution, allowing each layer to be optimized for its operational role rather than forcing LLMs to handle deterministic tasks they were not designed to perform.

According to Fortune Business Insights, the global IDP market is projected to grow from $14.16 billion in 2026 to $91.02 billion by 2034, at a 26.20% CAGR, reflecting surging demand for these reliable systems among large enterprises that handle high document volumes. In this model, LLMs are used selectively where contextual understanding adds value. At the same time, high-volume extraction, validation, and downstream automation are handled by purpose-built systems designed for reliability, cost control, and compliance.

The strategic implication is clear: LLMs are a powerful component of modern document automation, but they are not a replacement for specialized processing engines. Organizations that align each technology to its operational strengths are best positioned to scale automation without sacrificing accuracy, governance, or performance.

What Are Large Language Models (LLMs)?

Large Language Models (LLMs) are a class of machine learning models designed to understand, generate, and reason over natural language at scale. They are trained on vast corpora of text using deep neural networks, typically transformer architectures that learn statistical relationships between words, phrases, and concepts.

At a high level, LLMs work by predicting the most probable next token (word or symbol) given a sequence of prior tokens. By exposing this simple mechanism to massive, diverse datasets, it enables complex behaviors, including summarization, classification, question answering, translation, and contextual reasoning. In document processing, this allows LLMs to explain free-form text, infer meaning across paragraphs, and respond flexibly to varied layouts or language styles.

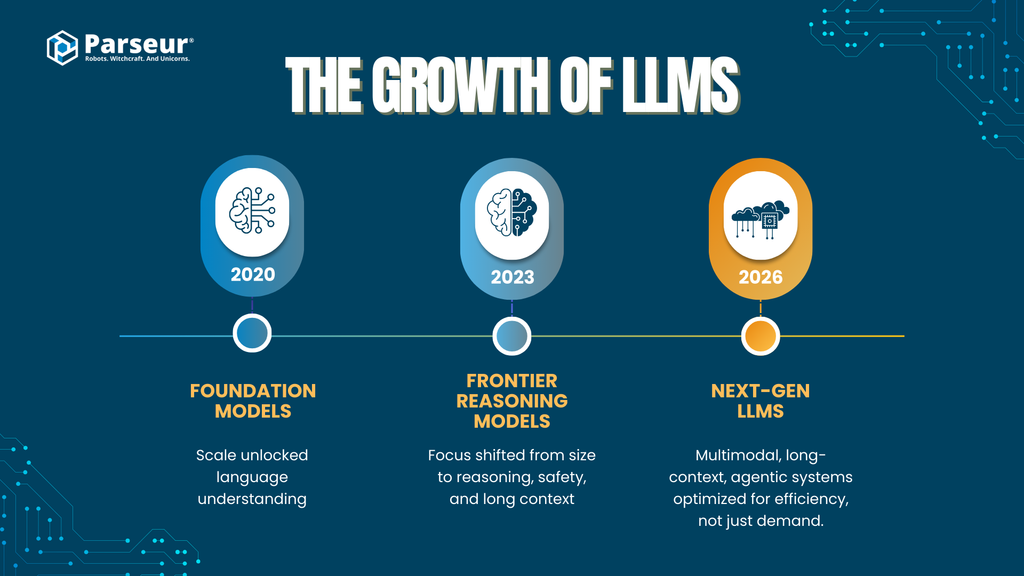

Scale and development of LLMs

The capabilities of large language models have expanded rapidly as both model scale and training techniques have evolved across major AI providers:

- Early foundation models (2020–2021)

Models such as GPT-3 introduced large-scale transformer architectures (~175 billion parameters), demonstrating that scale alone could unlock strong general-purpose language understanding.

- Second-generation frontier models (2023–2024)

Models including GPT-4, Claude 2/3 (Anthropic), Gemini 1.x (Google), and DeepSeek-LLM shifted focus beyond raw parameter count toward improved reasoning, safety tuning, and longer context windows. While most vendors no longer disclose exact parameter sizes, these models are widely believed to operate at or beyond trillion-parameter equivalence when accounting for mixture-of-experts and architectural optimizations.

- Search- and retrieval-augmented models

Platforms such as Perplexity AI emphasize retrieval-augmented generation (RAG), combining LLM reasoning with real-time search and citation mechanisms to improve factual accuracy and reduce hallucinations in knowledge-intensive tasks.

- Next-generation LLMs (2025–2026)

Across providers, the emphasis is shifting toward:

- Multimodality (text, images, tables, documents, audio)

- Long-context processing (hundreds of thousands to millions of tokens)

- Agentic capabilities (tool use, multi-step reasoning, orchestration)

- Efficiency and specialization, rather than linear increases in model size

This evolution signals a broader industry trend: performance gains are increasingly driven by architecture, tooling, and system design rather than parameter count alone, an important distinction for enterprise document automation use cases.

Enterprise Adoption Trends

- LLMs are moving from pilots to production

- 78% of organizations report using generative AI in at least one business function, including operations, analytics, and automation.

- Enterprise launching is accelerating rapidly

- By 2026, over 80% of enterprises are expected to have used generative AI APIs or launch GenAI-enabled applications in production, up from under 5% in 2023, according to Gartner.

- Document processing is a top enterprise use case

- Document automation and data extraction rank among the leading GenAI applications, driven by demand for reduced manual effort, faster processing cycles, and improved operational efficiency (McKinsey, Gartner).

Relevance to Document Automation

In document workflows, LLMs are primarily used for:

- Processing unstructured or semi-structured text

- Classifying documents by type or intent

- Extracting loosely defined fields where rigid rules fail

- Handling language variability across vendors, regions, and formats

However, while LLMs excel at interpretation and reasoning, they are inherently probabilistic systems. Their outputs are generated based on likelihood rather than deterministic rules, a characteristic that has important implications for accuracy, repeatability, cost, and governance in high-volume document automation.

This distinction sets the stage for the central question enterprises must address: where LLMs add strategic value, and where specialized document processing systems remain essential.

Core Capabilities: Where LLMs Excel (The Strategic Layer)

Large Language Models deliver their greatest value at the strategic layer of document automation, where flexibility, semantic understanding, and reasoning matter more than deterministic precision. Their strengths are qualitative rather than mechanical, making them particularly effective in early-stage automation, edge cases, and knowledge-intensive workflows.

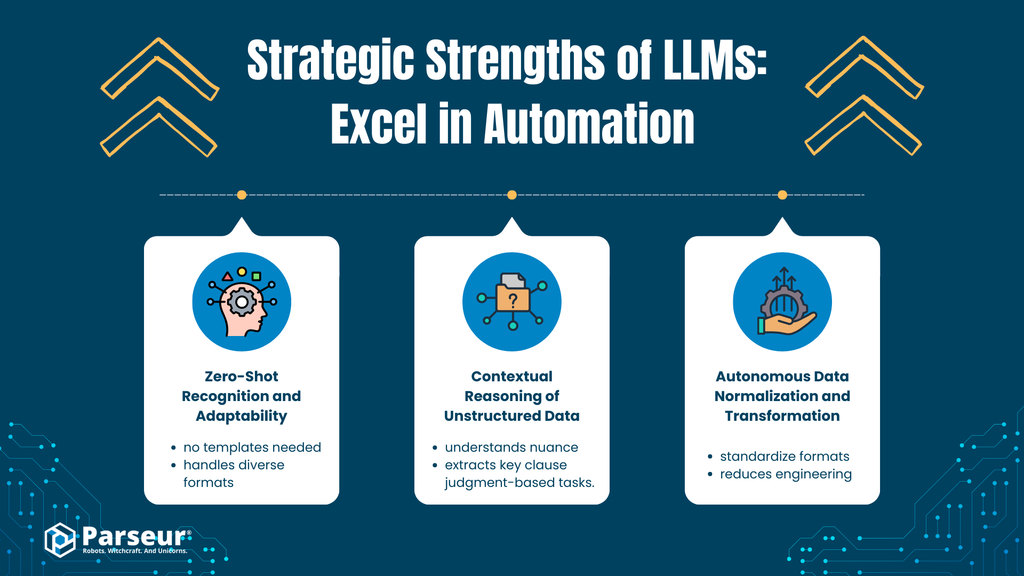

A. Zero-Shot Recognition and Adaptability

Analysis

LLMs possess strong semantic understanding, allowing them to recognize and extract relevant information from document types and layouts they have never explicitly seen before. For example, an LLM can identify an invoice number, due date, or total amount from a non-standard invoice issued by a new supplier, even when labels, positioning, or formatting differ significantly from prior examples.

This capability stems from generalized language modeling rather than document-specific training. The model infers meaning from context, not from fixed rules or predefined templates.

Business Impact

- Accelerates time-to-value by reducing upfront configuration

- Minimizes dependency on large, labeled training datasets

- Enables faster onboarding of new vendors, formats, or document types

For organizations operating in dynamic or heterogeneous environments, this adaptability can significantly lower initial automation friction.

B. Contextual Reasoning of Unstructured Data

Analysis

Unlike traditional rule-based systems (e.g., regular expressions or fixed-position logic), LLMs can analyze nuance, intent, and implied meaning in unstructured or semi-structured text. This includes long-form content such as emails, contract clauses, policy documents, and customer correspondence.

LLMs can extract meaning from narrative language, understand relationships between concepts, and reason across paragraphs, capabilities that are fundamentally difficult to encode using deterministic rules.

Business Impact

- Enables automation of workflows that require judgment-based reasoning.

- Supports use cases such as:

- Customer intent classification

- Clause identification in legal text

- Extraction of key dates, obligations, or risks from narrative documents

- Reduces manual review in knowledge-heavy processes

This makes LLMs particularly valuable in domains where structure is inconsistent, and context matters as much as content.

C. Autonomous Data Normalization and Transformation

Analysis

LLMs can normalize extracted data during generation. For example, they can:

- Convert varied date formats into standardized representations (e.g., ISO 8601)

- Standardize currencies and numeric formats

- Harmonize field naming conventions across inconsistent sources

This reduces reliance on downstream transformation logic, custom scripts, or brittle post-processing pipelines.

Business Impact

- Simplifies integration with downstream systems (ERP, CRM, analytics)

- Reduces engineering overhead for data cleaning and formatting

- Improves the speed of launching for proof-of-concept and pilot projects

Strategic Strengths of LLMs in Automation

At a broader level, LLMs bring several foundational advantages to enterprise automation initiatives:

Natural Language Understanding at Scale

Extract, summarize, and categorize unstructured text across large document volumes.

Semantic Flexibility

Identify meaning across variations in phrasing, layout, and intent.

Generalized, Non-Rule-Bound Reasoning

Perform classification, inference, and pattern recognition without explicit logic trees.

Rapid Cross-Domain Applicability

Applicable across customer support, legal review, knowledge management, and internal tooling.

Foundation for Agentic Workflows

Enable prompt chaining, task decomposition, and decision orchestration in AI-driven systems.

For example, one SaaS client of ours, who annually processed approximately 4,000 invoices, began a project to automate the invoice intake process. Using LLMs, the client was able to apply the 40% savings from the manual workload associated with standard vendor invoices. In contrast, when attempting to process invoices from a variety of older vendors with non-standard formats and scanned PDF versions, LLMs were unable to accurately extract invoice total amounts or dates. The solution was to provide rule-based checks and optical character recognition (OCR) verification for LLMs. It is critical to understand that LLMs are best suited to assist with automating documents but should not take the lead in the decision-making process. - Nick Mikhalenkov, SEO Manager, Nine Peaks Media

Critical Limitations: Where LLMs Struggle (The Operational Layer)

While Large Language Models(LLMs) offer significant strategic value, their limitations become pronounced when applied to high-volume, production-grade document automation. At the operational layer, where accuracy, consistency, speed, and cost control are non-negotiable, pure LLM-based approaches introduce measurable risk.

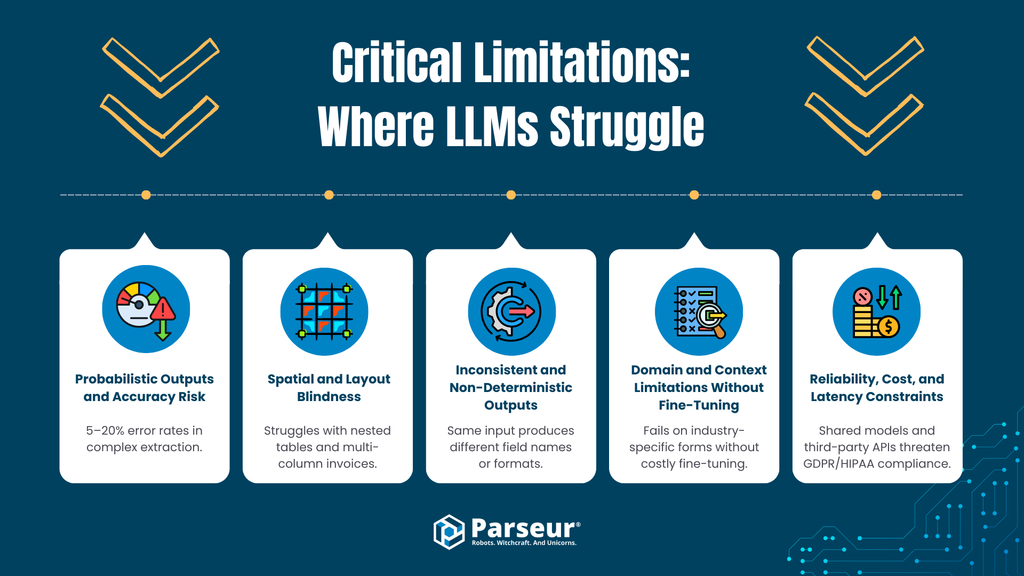

A. Probabilistic Outputs and Accuracy Risk

The Limitation

LLMs are inherently probabilistic systems; they generate outputs based on statistical likelihood rather than deterministic rules. Even advanced models continue to produce measurable rates of incorrect or fabricated information. According to industry standards, leading models such as GPT-4o and similar modern LLMs still exhibit measurable hallucination or error rates when assessed on structured tasks, with Master of code reporting 5–20% error/hallucination rates in complex reasoning and extraction scenarios.

Operational Risk

In financial and operational workflows such as accounts payable, accounts receivable, procurement, or compliance reporting, probabilistic errors are unacceptable. Ramp’s data shows manual invoice processing alone produces error rates of about 1–3%, meaning 10–30 problematic transactions per 1,000 invoices that require correction or investigation. These errors often lead to missed discounts, late payments, and costly rework. Unlike rule-based systems, LLMs cannot guarantee identical outputs given identical inputs without extensive verification layers and human review, eroding much of the efficiency that automation seeks to deliver**.**

B. Spatial and Layout Blindness

The Limitation

Most LLMs process text sequentially and lack a native understanding of spatial relationships. While they can read text extracted from documents, they struggle to reliably translate layout-dependent meaning, such as:

- Multi-column invoices

- Nested or multi-line tables

- Headers spanning multiple columns

- Values whose meaning is defined by position rather than labels

However, this is evolving as newer models are coming to the surface.

Operational Risk

In structured documents, layout is logic. Misinterpreting row alignment or column association can result in:

- Line items mismatched with prices or quantities

- Totals assigned to the wrong fields

- Header values are incorrectly propagated across rows

These errors are often subtle, difficult to detect automatically, and highly damaging in automated workflows.

C. Inconsistent and Non-Deterministic Outputs

The Limitation

LLMs do not enforce strict schemas by default. Field presence, naming, formatting, and ordering can vary between runs, especially when prompts or document structures change slightly.

Operational Risk

Enterprise systems require predictable, schema-stable outputs (e.g., fixed JSON structures, consistent field names, normalized data types). Variability forces teams to:

- Build complex validation and correction layers

- Handle frequent edge cases

- Reintroduce manual review

This undermines the reliability required for end-to-end automation.

D. Domain and Context Limitations Without Fine-Tuning

The Limitation

While LLMs demonstrate broad general knowledge, they often struggle with highly specialized document schemas (e.g., logistics documents, tax forms, industry-specific invoices) without fine-tuning or prompt engineering.

Operational Risk

Fine-tuning introduces additional complexity:

- Requires curated datasets

- Increases development time

- Raises data privacy and retention concerns

- Adds ongoing maintenance overhead

For many enterprises, this negates the promised “plug-and-play” advantage of LLMs.

E. Reliability, Cost, and Latency Constraints

The Limitation

LLM inference, particularly agentic or multi-step reasoning, is computationally expensive. Real-world extraction workflows using LLMs often take 8 to 40 seconds per document, compared to milliseconds for specialized OCR and extraction engines.

Operational Risk

At scale, this creates high cost and performance issues:

- API costs can be 10×–100× higher per document than specialized IDP systems

- Latency becomes a bottleneck in time-sensitive workflows

- Throughput constraints limit batch processing and peak loads

For environments processing thousands or tens of thousands of documents per month, unit economics often become untenable.

F. Data Privacy and Compliance Exposure

The Limitation

Many LLM integrations involve third-party APIs, shared model architectures, or unclear data retention policies. In regulated environments, this raises concerns around:

- GDPR purpose limitation and data minimization

- Right to erasure and auditability

- HIPAA, GLBA, and industry-specific compliance

Operational Risk

When documents are processed through models that may log, retain, or reuse data for training, enterprises lose control over sensitive information. This introduces compliance risk that cannot always be reduced through contracts alone.

From my point of view, one of the most significant limitations of Large Language Models in Document Processing is their inability to deliver precise results when accuracy really counts. LLMs do well at summarizing and understanding what's being said in text; however, they fail at structured data extraction, where minor errors in reading a score wrong, reading a date wrong, etc., can be detrimental to the outcome of a process. Additionally, LLMs tend to sound very confident in their answers even when the underlying data is either missing or unclear.

In education-related use cases, I have observed that LLMs perform well when summarizing class materials or explaining concepts. Still, they consistently fail to extract standardized information from academic records or test results accurately. Traditional rule-based systems with human oversight continue to outperform pure LLM-based automation in these applications. - Joern Meissner, Founder & Chairman, Manhattan Review

Summary: Strategic Intelligence vs. Operational Reliability in Document Automation

Large Language Models (LLMs) offer unmatched capabilities in natural language understanding, semantic interpretation, and contextual reasoning. However, in enterprise document automation workflows, LLMs face limitations in precision, deterministic extraction, layout interpretation, cost efficiency, and regulatory compliance. Their probabilistic outputs and slower processing times make a pure LLM approach risky for high-volume invoice processing, accounts payable automation, and other structured document workflows.

The Rise of “Agentic AI” in Document Processing

As organizations attempt to overcome the limitations of pure LLM-based extraction, a new architectural pattern has emerged: Agentic AI. In this model, LLMs are no longer single-pass text generators but act as orchestrators, invoking external tools, applying multi-step reasoning, and iteratively validating their own outputs.

What Is Agentic AI?

Agentic AI refers to systems in which an LLM:

- Breaks a task into multiple steps

- Calls external tools (OCR engines, calculators, databases, validation scripts)

- Reviews and revises its own outputs

- Repeats this loop until a confidence threshold is reached

In document processing, this often means an LLM extracts data, checks totals, re-queries the document, and corrects inconsistencies before producing a final result.

The Intended Benefit: Reduced Hallucinations

By introducing validation loops and tool-assisted reasoning, agentic workflows can reduce some common LLM failure modes:

- Numerical inconsistencies

- Missing fields

- Obvious logical errors (e.g., totals not matching line items)

This approach moves the LLM from a single probabilistic guess to a self-correcting system, improving accuracy in complex or ambiguous documents.

The Trade-Off: Latency, Cost, and System Complexity

While agentic architectures improve reasoning reliability, they introduce substantial operational trade-offs:

Latency

Each reasoning step and tool call adds processing time. In practice, agentic document extraction workflows can take 8–40 seconds per document, making them unsuitable for real-time processing or large batch jobs.

Cost

Multiple LLM invocations dramatically increase token usage and API costs. At scale, this can result in per-document costs that are an order of magnitude higher than those of deterministic extraction pipelines.

Engineering Complexity

Agentic systems require:

- Orchestration frameworks

- Error handling across multiple tools

- Monitoring and observability layers

- Continuous prompt and logic tuning

This increases integration time and long-term maintenance overhead.

Operational Reality: Not Built for High-Throughput Automation

For enterprises processing thousands of documents per month, these trade-offs are difficult to justify. High-volume document automation prioritizes:

- Predictable latency

- Stable costs

- Deterministic outputs

- Simple failure modes

Agentic AI improves for reasoning depth, not operational efficiency.

Verdict: Powerful, but Narrowly Applicable

Agentic AI represents an important progress in how LLMs are applied to complex tasks. However, its strengths align best with:

- Low-volume, high-complexity research

- Exception handling and edge-case analysis

- Knowledge-intensive document review

It is not well-suited for high-volume, production-grade data entry or document processing pipelines.

Agentic AI boosts LLM reasoning, but it does not eliminate the fundamental trade-offs of probabilistic models. For high-throughput document automation, it complements rather than replaces deterministic and specialized extraction systems.

Why Specialized Tools Still Matter (Parseur and the Value of Purpose-Built Engines)

As interest in LLM-driven automation accelerates, many organizations assume that general-purpose models can replace traditional document processing systems. In practice, the opposite is emerging. Enterprises that achieve reliable, high-volume automation increasingly combine LLMs with specialized document processing engines, using each where it delivers the greatest value.

Purpose-built platforms like Parseur exist not because LLMs lack intelligence, but because enterprise automation prioritizes precision, predictability, and operational efficiency over generalized reasoning.

Precision at Scale

Document automation systems operate under fundamentally different constraints than conversational AI. Invoices, purchase orders, and financial forms require field-level accuracy, not approximate understanding.

Specialized document processing engines rely on:

- Rule-enhanced extraction

- Layout-aware pattern recognition

- Classification models tuned for structured documents

This approach produces deterministic outputs, ensuring the same document yields the same result every time.

In contrast, LLM-based extraction remains probabilistic. Even small error rates compound quickly at scale, creating downstream reconciliation and exception-handling costs that negate automation gains.

Configurable Rules Combined with Targeted Learning

Modern document processing platforms are no longer purely rule-based. They combine:

- Configurable templates and validation rules

- Lightweight classification models

- Optional AI-assisted field detection

This hybrid approach consistently delivers 95%+ field-level extraction accuracy across variable document formats, according to industry standards.

Typical LLM‑only extraction workflows report higher field error rates on structured financial documents, whereas specialized Intelligent Document Processing systems have been shown to reduce extraction and entry errors by over 52%, translating directly into less manual intervention and review effort.

The key distinction is control. Enterprises can explicitly define:

- Accepted formats

- Validation logic

- Fallback behaviors

This level of determinism is difficult to guarantee with prompt-based systems alone.

Integration and Workflow Maturity

Purpose-built platforms are designed to operate inside production workflows, not at the edge of experimentation.

Mature document processing tools provide:

- Stable REST APIs and webhooks

- Native integrations with ERP, accounting, and CRM systems

- Compatibility with automation platforms (Zapier, Make, Power Automate)

- Built-in monitoring, retries, and error handling

This allows organizations to launch document automation as a reliable system component, rather than a fragile orchestration of prompts and scripts.

LLMs integrate well at the logic and reasoning layer, but specialized tools handle the operational plumbing required for enterprise-scale automation.

Security and Compliance Built In

Security and compliance are not add-ons in enterprise document processing; they are architectural requirements.

Specialized platforms like Parseur are designed with:

- Tenant-level data isolation

- Encryption at rest and in transit

- Configurable data retention policies

- GDPR-aligned processing and deletion controls

Because these systems do not rely on customer documents to retrain global models, they avoid many of the data sovereignty and auditability challenges associated with shared AI platforms.

For regulated industries, this distinction is critical. Compliance depends not only on how data is stored, but also on whether it is reused at all.

The Intersection: LLMs Inside Document Processing Tools

As enterprises move beyond experimentation, a more pragmatic model is emerging: LLMs are being embedded inside document processing tools, not used as standalone extraction engines. This hybrid approach combines the adaptability of large language models with the reliability of deterministic systems.

Rather than replacing traditional document automation, LLMs increasingly operate as supportive layers, enhancing flexibility, error handling, and downstream intelligence while core extraction remains structured and controlled.

This change reflects a broader architectural principle: LLMs are most effective when constrained by systems that enforce accuracy, performance, and compliance.

Prompt-Driven Correction and Enrichment Layers

One of the most effective uses of LLM integration in document extraction tools is post-extraction enrichment.

In this model:

- A deterministic or layout-aware engine extracts core fields (e.g., invoice number, total, due date).

- An LLM is applied selectively to:

- Normalize descriptions

- Resolve ambiguous labels

- Add contextual metadata (e.g., vendor categorization, payment terms identification)

The LLM operates after primary extraction, its probabilistic nature does not compromise data integrity. Errors can be bounded, verified, or ignored without disrupting the underlying workflow.

This approach delivers flexibility without introducing systemic risk.

Human-in-the-Loop Validation Using LLM Summarization

Another emerging pattern is the use of LLMs to assist human reviewers, rather than replace them.

Examples include:

- Summarizing long documents to highlight key fields or anomalies

- Explaining why a field may have failed validation

- Generating natural-language review notes for audit trails

In document processing platforms, this reduces cognitive load during exception handling while keeping final control with the human operator.

From an operational standpoint, this improves throughput and consistency without relying on LLMs for authoritative data entry, an important distinction in regulated workflows.

Agentic AI Workflows: LLMs Orchestrating Deterministic Systems

More advanced platforms are experimenting with agentic AI workflows, in which LLMs coordinate multiple tools across a document-processing pipeline.

In these architectures:

- The LLM acts as an orchestration layer

- Deterministic systems handle OCR, classification, and field extraction

- Validation rules enforce constraints

- Humans intervene only when thresholds are breached

While powerful, these systems must be carefully scoped. As discussed earlier, agentic workflows introduce latency, cost, and operational complexity, making them best suited for:

- Low-volume, high-variance documents

- Cross-system reconciliation tasks

- Exception-driven workflows

For high-volume extraction, agentic AI complements, rather than replaces, specialized document engines.

Why Hybrid Architectures Are Becoming the Enterprise Standard

The growing adoption of LLM integration in document extraction tools reflects a mature understanding of AI’s strengths and limitations.

Hybrid systems deliver:

- Deterministic accuracy for structured data

- Semantic flexibility for edge cases

- Predictable cost and performance at scale

- Stronger compliance and auditability

Strategic Takeaway

The future of document automation is not LLM-first; it is LLM-aware.

Enterprises that succeed in 2026 and beyond will be those that:

- Use LLMs to improve decision-making and flexibility

- Rely on purpose-built engines for operational execution

- Design architectures where intelligence is constrained by reliability

When to Use LLMs vs. Specialized Document Processing Tools

| Decision Criteria | Use Large Language Models (LLMs) | Use Specialized Document Processing Tools (e.g., Parseur) |

|---|---|---|

| Document Type Variability | Highly variable, novel, or unstructured documents (emails, free-form text, contracts) | Consistent or semi-structured documents (invoices, receipts, forms) |

| Accuracy Requirements | Advisory or assistive outputs where human review is acceptable | System-of-record automation requiring deterministic, repeatable accuracy |

| Error Tolerance | Occasional inaccuracies acceptable | Near-zero error tolerance required |

| Regulatory Risk | Low sensitivity or non-regulated data | Regulated data (GDPR, CCPA, financial, healthcare) |

| Data Privacy & Sovereignty | Data used for reasoning or enrichment with limited retention needs | Strict data isolation, auditability, and right-to-erasure requirements |

| Processing Volume | Low to moderate volume | High volume (thousands to millions of documents per month) |

| Latency Sensitivity | Seconds per document acceptable | Millisecond-level or near-real-time processing required |

| Cost Efficiency at Scale | Acceptable at low volume; costs increase rapidly with scale | Predictable, low unit cost at high volume |

| Integration Complexity | Flexible outputs, loosely coupled workflows | Fixed schemas, ERP/RPA/Accounting integrations |

| Best-Fit Use Cases | Classification, summarization, intent detection, enrichment | Invoice processing, AP/AR, form extraction, compliance workflows |

Future Outlook: LLMs, Agentic Systems, and Automation

The landscape of AI-driven document automation is rapidly evolving. Enterprises should understand not only the capabilities of current LLMs but also the emerging trends that will shape automation strategies over the next several years.

1. Next-Generation LLM Architectures

- Multi-Modal Models: LLMs are increasingly able to process not just text but images, tables, and structured documents simultaneously, opening new possibilities for invoice, form, and PDF automation.

- Retrieval-Augmented Models (RAG): By integrating external knowledge sources, these models improve accuracy and contextual understanding without retraining on sensitive customer data.

- Agentic AI Workflows: Multi-step reasoning loops and tool integration allow LLMs to perform tasks autonomously, such as cross-referencing fields, summarizing complex contracts, or suggesting exceptions.

2. Enterprise Adoption Trends

- Future adoption projections: AI adoption is expected to reach universal levels among large enterprises by 2027, with generative AI becoming a core part of automation, content processing, and knowledge workflows, suggesting hybrid automation stacks will be common. (Inferred from broad enterprise AI trends rather than a single explicit forecast).

- Adoption will focus on reducing human effort, improving operational speed, and enabling strategic insights from unstructured enterprise data.

3. Explainability, Trust, and Oversight

- As LLM adoption grows, regulatory and operational pressure for explainable AI will increase. Organizations will need mechanisms to audit outputs, confirm decisions, and maintain compliance.

- Human-in-the-loop (HITL) oversight will remain crucial, especially for critical documents such as contracts, invoices, or financial statements.

- Enterprise automation strategies will increasingly favor hybrid architectures that combine LLM flexibility with the deterministic reliability of specialized engines to ensure both trustworthiness and compliance.

Balancing AI Intelligence with Operational Control

Large Language Models (LLMs) provide powerful cognitive capabilities for understanding unstructured text and reasoning across complex documents, but they are not a standalone approach for enterprise-grade data extraction. Organizations should selectively leverage LLMs for strategic tasks that require flexibility and contextual reasoning, while relying on specialized platforms like Parseur to ensure deterministic accuracy, regulatory compliance, scalability, and auditability. By combining AI intelligence with purpose-built extraction engines, enterprises can achieve both operational reliability and strategic insight, optimizing document automation for speed, precision, and control.

Frequently Asked Questions

To help enterprise decision-makers understand the practical applications and limitations of Large Language Models in document workflows, we’ve compiled answers to the most common questions about their use, security, and integration with specialized tools like Parseur.

-

What are LLMs good for in document processing?

-

LLMs excel at translating unstructured text, recognizing patterns, classifying content, and extracting context-sensitive information. They are ideal for flexible, reasoning-heavy workflows but are probabilistic rather than deterministic.

-

Why can’t LLMs replace specialized extraction tools?

-

LLMs can produce inconsistent outputs, struggle with layout-dependent data, and are costly at scale. Tools like Parseur provide deterministic accuracy, compliance, and scalability for high-volume, structured documents.

-

Are LLMs secure for business data?

-

Security depends on implementation. Many LLMs use shared APIs, which may retain data. Parseur ensures isolated processing, configurable retention, and compliance with GDPR and other regulations.

-

How should enterprises combine LLMs and specialized tools?

-

Use LLMs for reasoning and unstructured data, and specialized engines for high-volume, structured, or regulated documents to ensure flexibility and reliability.

Last updated on