What Does “No Training On Your Data” Mean?

At its core, the phrase “no training on your data” refers to a commitment by AI providers that the information you share with their systems will not be repurposed to improve or retrain their machine learning models.

Key Takeaways

- “No training on your data” ensures information is processed, not reused for AI training.

- Enterprises need this policy to protect trust, compliance, and IP.

- Parseur delivers encryption, retention controls, compliance, and strict no-training by default.

When companies adopt AI tools, they want certainty that their sensitive business information will not be misused, exposed, or repurposed in ways they disapprove.

These concerns are reflected in the consumer realm as well: about 70% of adults do not trust companies to use AI responsibly, and over 80% expect some level of misuse of their data, according to Protecto, making reputational loss from mishandling data even more impactful than regulatory fines.

In recent years, major AI providers such as OpenAI, Anthropic, and Microsoft have faced increasing scrutiny about how they use customer data. The central question is whether the data shared with these platforms is processed to deliver results or whether it is also fed back into the model for training.

This is why “AI no training on your data” has quickly become the new standard for building AI trust. It is a commitment that customer data is used strictly for processing and never incorporated into broader training models. For enterprises, this guarantee forms the foundation of trust, compliance, and long-term adoption of AI solutions.

You will gain a clear understanding of what “no training on your data” means in practice, why it matters for enterprise compliance and governance, how major providers are handling it, and how Parseur implements this policy to ensure both AI trust and data security in this article.

What Does “No Training On Your Data” Mean?

Your data is only used to fulfill your request, whether that’s summarizing a document, extracting fields, drafting text, or analyzing patterns. Then it’s either discarded or securely stored under clearly defined terms.

Here’s the key distinction:

- Processing: Your data is used in real time to complete a task, such as generating a report or extracting invoice details. Once finished, the data does not re-enter the system for future learning.

- Training: Your data is fed into the AI model to improve performance. Over time, your inputs could become part of the model’s knowledge base, raising the possibility that fragments of sensitive information might resurface.

Many large AI systems blurred this line in the past, using customer inputs for processing and training. While this helped the technology evolve, it also created risks:

- Confidentiality concerns: Sensitive business documents, financial data, or client information could inadvertently be exposed or reconstructed.

- Data ownership issues: If your inputs help train a model, does that dilute your intellectual property rights?

- Compliance challenges: Industries like healthcare, finance, and law cannot risk client data being reused without explicit consent.

A no-training policy removes that ambiguity. Your data is only processed for the task; it is not recycled for training, shared with third parties, or repurposed in hidden ways. This strengthens data security, helps meet compliance requirements, and builds trust between businesses and AI providers.

In short, “no training on your data” means your data stays yours; private, protected, and under your terms.

Why Data Training Policies Matter For Businesses

An AI data privacy policy is not just a nice-to-have feature. For businesses, it is a crucial safeguard determining whether sensitive information remains secure or becomes a hidden liability. Without a clear policy, organizations risk losing control of their data and exposing themselves to regulatory fines, reputational damage, and competitive disadvantages.

Risks of data being used for training

- Intellectual property leakage: Proprietary business processes, contracts, or research could unintentionally become part of a model’s training data, diminishing ownership and exclusivity.

- Compliance and regulatory breaches: Regulations such as GDPR and CCPA set strict boundaries on how personal and sensitive data can be handled. If a provider reuses this information for training without explicit consent, it can trigger costly violations.

- Competitive intelligence risks: When your proprietary data is used to enhance a model, competitors could indirectly benefit from the knowledge embedded in that system, eroding your competitive edge.

Why enterprise buyers care

Enterprise buyers nowadays are not just evaluating AI tools based on performance but also scrutinizing AI data privacy policies. A survey by The Futurum Group in 2025 found that 52% of organizations prioritize AI vendor technical expertise, with data handling and privacy controls close behind at 51%, highlighting how crucial robust privacy policies are during vendor selection. A vendor that commits to a no-training policy demonstrates:

- Respect for data ownership: Reassuring clients that their intellectual property stays in their control.

- Compliance alignment: Reducing legal risk and simplifying audits for regulated industries.

- Trust and transparency: Strengthening long-term vendor-client relationships by eliminating hidden data practices.

Ultimately, companies view data training policies as a make-or-break factor in AI adoption. Choosing a vendor with a strict no-training policy allows businesses to innovate with AI while maintaining control, compliance, and confidence in their data security.

The Compliance and Governance Perspective

For many organizations, AI adoption is not just about efficiency but about meeting strict compliance and governance requirements. Regulations and standards such as ISO 27001, GDPR, and industry-specific frameworks in healthcare, finance, and legal services require companies to handle data with the highest levels of security and accountability. According to the 2025 Investment Management Compliance Testing Survey, 57% of compliance officers identified AI usage as their top compliance concern, reflecting the critical need to align AI with strict regulations like ISO 27001, GDPR, and sector-specific standards in healthcare, finance, and legal services.

A no-training policy directly supports these requirements by ensuring that data is only used for its intended purpose. This aligns with core compliance principles such as:

- Data minimization: Only the minimum necessary data is processed, reducing exposure.

- Consent and control: Information is not repurposed without explicit authorization.

- Purpose limitation: Data is used solely for the task requested and never recycled for future training.

By following these practices, AI providers make it easier for businesses to demonstrate compliance during audits and to avoid the risk of non-compliance penalties.

Beyond regulations, governance is also about building trust through standardized practices. Organizations like the Electronic Commerce Code Management Association (ECCMA) work to improve data quality and trust across industries, and no-training policies reflect that same commitment to transparency and reliability.

When enterprises choose AI vendors that align with governance best practices, they stay compliant and strengthen the foundations of secure and ethical AI adoption.

Industry Examples: How Big Tech Handles Data Training

One of the clearest signs of how important data policies have become is how Big Tech companies now approach data training. In recent years, major AI vendors have adapted their practices to meet enterprise demands for explicit guarantees about privacy and control.

- OpenAI: With ChatGPT, data used in the free and consumer versions may be collected to improve models, but enterprise users are given opt-out controls and assurances that prompts and responses will not be used for training. This distinction highlights the growing separation between consumer AI and enterprise-grade AI.

- Microsoft: In its Copilot for Microsoft 365 and Azure OpenAI Service, Microsoft emphasizes data isolation. Enterprise customer data is never used to train foundation models, giving businesses confidence that sensitive documents and communications remain private.

- Anthropic: With Claude, Anthropic has publicly committed to no training on enterprise data. This reinforces its positioning as a trustworthy AI partner focused on safety, transparency, and client control.

These examples show a clear industry trend: enterprises are demanding and receiving stronger guarantees around AI data privacy policies. Vendors that cannot provide these assurances risk losing ground to competitors who prioritize transparency and trust.

Why ‘No Training on Your Data’ Builds AI Trust

At the heart of every successful AI adoption is one essential factor: trust. Enterprises will only embrace automation and AI at scale if they can be confident their data is handled securely and responsibly. PwC’s 2025 AI agent survey shows that 28% of business leaders rank lacking trust in AI agents as a top challenge for broader deployment. Without clear boundaries, like a no-training policy, enterprises feel exposed to risks that inhibit trust and adoption. A no-training policy is one of the most effective ways to establish that trust, because it sets clear boundaries between customer information and AI model development.

- Reassures customers: Businesses want to know their proprietary data will never be reused to train models that competitors could benefit from. By committing to no training, vendors provide a critical layer of reassurance that sensitive strategies, client records, or financial data stay fully protected.

- Ensures transparency: Transparency is a cornerstone of AI trust. Clear policies that separate customer data from model training remove ambiguity and give enterprises confidence in managing their information. This also helps internal compliance teams prove responsible AI usage.

- Strengthens adoption: When trust is explicit, enterprises are more willing to roll out AI across high-stakes workflows, from finance and legal to healthcare and customer service. Knowing their data will not be repurposed encourages broader adoption and long-term reliance on automation tools.

“No training on your data” is not just a policy; it’s a competitive advantage. Companies that commit to this principle show they value customer confidence, making it easier for enterprises to integrate AI solutions without hesitation.

How Parseur Implements This Policy

At Parseur, data privacy and compliance are at the core of our platform design. We recognize that many businesses hesitate to adopt AI tools because they are uncertain how their data will be used. Parseur takes a firm stance: your data is yours and will never be used to train AI models.

Here’s what that means in practice:

- No model training on your data: Parseur never reuses customer content (documents, emails, invoices, or utility bills) for AI training. Every extraction runs in a closed environment to keep information private and secure.

- Purpose-built processing only: Data is processed exclusively for extraction and workflow automation, nothing more. It is never stored or recycled to improve models.

- Enterprise-grade safeguards: With end-to-end encryption, retention controls, and customer deletion rights, businesses maintain full control over their information while meeting industry compliance requirements.

This strict no-training policy protects customer information and builds long-term AI trust. Enterprises can confidently scale automation without worrying about data leakage, intellectual property risks, or compliance violations.

By combining transparency, governance, and robust security measures, Parseur empowers organizations to embrace automation while keeping privacy at the forefront.

Best Practices for Evaluating Vendors

Selecting an AI or automation vendor goes beyond comparing features. For enterprises, the absolute priority is ensuring that the vendor’s data practices align with security, compliance, and governance standards. Without a clear framework, organizations risk exposing sensitive information or falling short of regulatory requirements.

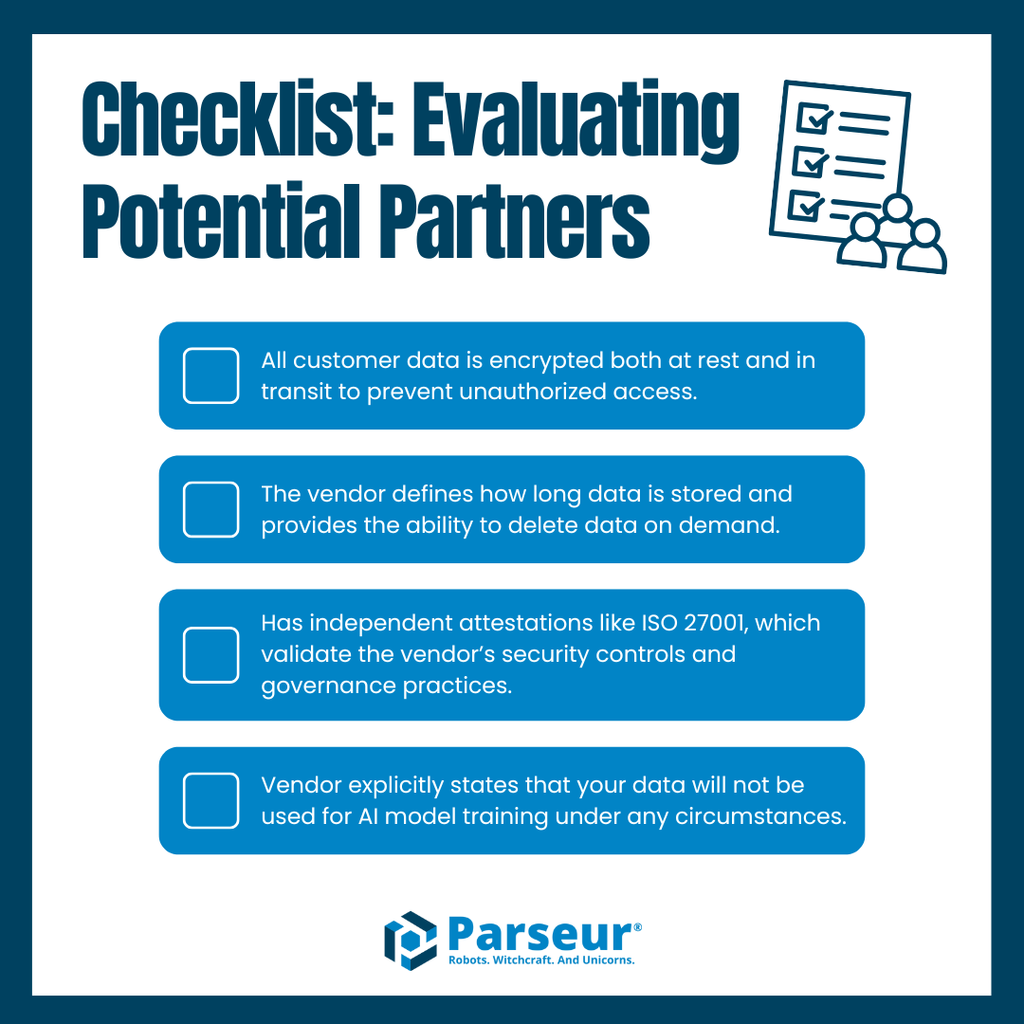

When evaluating potential partners, enterprises should use the following checklist:

- Data encryption: Confirm that all customer data is encrypted at rest and in transit to prevent unauthorized access.

- Retention policy: Ensure the vendor defines how long data is stored and allows users to delete data on demand.

- Compliance certifications: Look for independent attestations like ISO 27001, which validate the vendor’s security controls and governance practices.

- No-training commitment: Verify that the vendor explicitly states that your data will not be used for AI model training under any circumstances.

Vendors that fail to clarify these points may introduce compliance gaps or leave businesses vulnerable to privacy risks. On the other hand, those that meet all these requirements offer enterprises a strong foundation of trust and reliability.

This is where Parseur stands out. With encryption by default, clear retention controls, recognized compliance standards, and a strict no-training policy, Parseur delivers a solution that allows businesses to scale automation with confidence. Enterprises know precisely how their data is managed, making Parseur a vendor that checks every box.

Why Trust Defines The Future Of Automation

Trust has become the defining factor for businesses choosing automation and data solutions. As organizations handle more sensitive information, confidence in how vendors protect and manage that data is critical. At the center of this trust is the principle of “no training on your data.”

Looking ahead, enterprises will place even greater emphasis on transparency and accountability. Industry experts predict that within the next few years, organizations will increasingly demand vendors that provide clear retention policies, encryption by default, compliance certifications, and written commitments that customer data will never be reused for model training. Those unable to meet these standards risk losing credibility in a market where governance and data security are non-negotiable.

Parseur is designed for this future. With enterprise-grade encryption, strict retention controls, recognized compliance practices, and a no-training policy, Parseur ensures businesses can automate at scale without compromising security or privacy.

Explore how Parseur protects your data with enterprise-grade security and a strict no-training policy, giving your business the confidence to automate workflows safely, transparently, and at scale.

Frequently Asked Questions

As businesses explore automation and data extraction tools, questions about data security, compliance, and vendor practices often arise. Here are the most common questions enterprises ask about no-training policies and how they impact trust in automation.

-

What does “no training on your data” mean?

-

This policy ensures that your data is never reused to train machine learning models or AI systems. Vendors with this policy only process your information for its intended purpose, ensuring it remains private and secure.

-

Why is a no-training policy important for enterprises?

-

A strict no-training policy reduces risks of data leakage, compliance breaches, and intellectual property misuse, making it a top requirement for enterprises evaluating automation vendors.

-

How does Parseur protect my data?

-

Parseur enforces enterprise-grade security with encryption, retention controls, compliance alignment, and a strict no-training commitment to ensure your information is never repurposed.

-

Are no-training policies linked to compliance regulations?

-

Yes. Standards like GDPR and ISO 27001 emphasize data minimization and purpose limitation. A no-training policy aligns directly with these principles, helping organizations stay compliant.

-

Will more vendors adopt no-training policies in the future?

-

Industry experts predict that no-training policies will become the norm as enterprise buyers increasingly demand transparency, compliance alignment, and stronger data governance.

Last updated on