What Is Data Quality?

Data quality refers to how accurate, complete, consistent, and reliable information is for its intended purpose. In automation, data quality becomes even more critical because machines don’t second-guess; they act on the data they’re given.

Key Takeaways

- Tools like Parseur make it possible to scale automation with clean, compliant, and actionable data.

- Frameworks like VACUUM and ECCMA (Electronic Commerce Code Management Association) provide structure for ensuring reliable data.

- AI, validation, and cleaning techniques strengthen accuracy and trust in automation.

Poor data quality is more than an inconvenience; it’s expensive. Studies show inaccurate or incomplete data costs businesses millions yearly through wasted resources, compliance risks, and poor decision-making. In the age of automation, the stakes are even higher. After all, the principle of “garbage in, garbage out” (GIGO) still applies: if automation runs on flawed data, the results will be just as flawed.

According to Resolve, manual data processing produces error rates between 3–5%, but automation can cut this to 0.5–1.5%, leading to a 60–80% reduction in costly mistakes within six months of deployment. These improvements highlight the direct connection between automation and efficiency. Still, they also underscore why maintaining high-quality data is critical, because even minor errors, when scaled, can have massive consequences. In fact, poor data quality is responsible for up to 20–30% of annual revenue loss, costing the US economy $3.1 trillion each year, as stated by Techment.

That’s why data quality in automation has become a central concern for organizations adopting AI-driven document processing and intelligent workflows. High-quality data isn’t just about accuracy but consistency, reliability, and trust. Without it, automation can’t deliver its full value.

In this article, we’ll break down the essentials of maintaining data quality in automated systems. We’ll look at frameworks like VACUUM and ECCMA, explore common challenges such as GIGO, and discuss practical solutions, including AI-powered parsing, data validation automation, and human-in-the-loop (HITL) safeguards. By the end, you’ll see how businesses can ensure their automated processes run on clean, accurate, and actionable data.

What Is Data Quality?

Good data quality means:

- Accuracy – values are correct (e.g., an invoice total matches the actual amount billed).

- Completeness – no critical fields are missing (e.g., a contract includes both start and end dates).

- Consistency – the same information is represented the same way across systems (e.g., customer IDs align in CRM and ERP).

- Reliability – data is up to date and comes from trusted sources.

When automation systems process high-quality data, workflows run smoothly, decisions are faster, and errors are minimized. Poor data quality, on the other hand, introduces risks like duplicate records, compliance failures, and misleading insights, issues that quickly multiply when scaled through automated pipelines.

In short, data quality in automation ensures that every automated action is based on trustworthy information. Without this foundation, even the most advanced AI or machine learning systems will deliver subpar results.

Why Data Quality Matters in Document Processing Automation

Data quality isn’t just a technical detail; it’s a business-critical factor. When automation workflows run on poor-quality data, the ripple effects touch every part of an organization.

Efficiency

- Inaccurate data slows down automation pipelines.

- Manual rework becomes costly and time-consuming.

Cost

- According to MIT Sloan, poor data quality costs organizations an average of 15–25% of revenue through wasted resources, process inefficiencies, and missed opportunities

- Errors scale quickly in automated processes.

Compliance

- Mistakes in contracts, invoices, or healthcare forms can lead to compliance violations, penalties, and legal exposure.

Customer trust

- Wrong invoices, misfiled claims, or lost shipment details erode confidence and damage brand reputation.

These risks are amplified in the context of automation. Bad data doesn’t just sit in a database; it flows through systems at scale. Instead of saving time and money, flawed inputs can turn automation into a liability, reinforcing the old saying: garbage in, garbage out.

The VACUUM Model: Framework For Data Quality

The VACUUM model is one of the most widely recognized frameworks for assessing data quality. It defines six key dimensions that determine whether information is trustworthy and usable. In the context of data quality in automation, the VACUUM model provides a practical checklist for ensuring that extracted data is fit for purpose.

Here’s what each element means:

- Valid – Data must follow the correct format or rules. For example, an invoice date should be in a valid date format, not a free-text string.

- Accurate – Captured values should reflect the real-world truth. A patient ID in a medical form must match the ID assigned in the healthcare system.

- Consistent – Data should be uniform across different sources. A vendor name should appear the same way in both invoices and contracts.

- Uniform – Duplicate entries should be avoided. Automated systems shouldn’t process the same shipping record twice.

- Unified – Units of measure, currencies, and formats should be standardized. For instance, amounts should consistently use the same currency (USD vs EUR).

- Model – Data must be relevant and valuable for the intended task. Extracting a page number from a contract might be valid, but it’s not meaningful for contract management.

When applied to automated data extraction, whether from invoices, patient forms, or shipping documents, the VACUUM model helps ensure that outputs are not just digitized but also actionable and trustworthy.

Challenges In Data Quality For Automation Workflows

Even with advanced automation tools, ensuring high-quality data remains a challenge. A 2025 global survey by Precisely revealed that 64% of organizations identify data quality as their biggest obstacle, while 67% admit they don’t fully trust their data when making business decisions. This gap underscores a simple truth: automation can’t deliver its full value without reliable data, and AI systems risk making flawed recommendations.

The risks are not theoretical. Based on the report of Monte Carlo, the freight technology marketplace suffered millions in losses after corrupted automation data fed back into their core machine learning model, causing incorrect bidding predictions and marketplace failures. In just one year, this led to 400 data incidents, 2,400 hours of data downtime, and an estimated $2.7 million efficiency loss. Ultimately, the company shut down after failing to resolve its pervasive data quality problems.

Common Challenges in Automated Workflows

- Unstructured data → Invoices, contracts, receipts, and forms often arrive in different formats, layouts, and languages. Without proper parsing, extracting accurate fields becomes difficult.

- Human error → Typos, missing values, or inconsistent labels create inaccuracies that ripple through automated processes.

- Scaling issues → What works for 100 documents may fail for 100,000, as small inconsistencies become large-scale problems.

- Lack of validation → Without built-in checks, automation lets incorrectly identified IDs, totals, or dates pass unnoticed.

Garbage In, Garbage Out (GIGO)

These challenges reflect a classic principle in computing: Garbage In, Garbage Out (GIGO). If flawed data enters a system, flawed results will follow. Automation doesn’t fix bad inputs; it amplifies their impact.

In document automation, GIGO shows up in several ways:

- Unstructured or messy formats – Scanned PDFs, handwritten forms, or poorly formatted invoices make accurate extraction difficult.

- Human error at the source – A single customer ID or invoice number typo can cause failed payments, misrouted shipments, or compliance flags.

- Inconsistent data – Dates, currencies, and units recorded in multiple formats create confusion when passed into automated systems.

- Scaling issues – One incorrect record might be manageable manually, but when automation replicates that error across thousands of documents, the business impact multiplies quickly.

Take a few real-world examples:

- Invoice processing → An OCR engine misreads “$1,249.99” as “$12,499.9.” Without validation, that inflated number could be pushed straight into an ERP, skewing financial records.

- Healthcare forms → A patient ID may be misinterpreted due to poor scan quality. This error could lead to mismatched records or even compliance issues.

- Shipping documents → A smudged barcode translates into the wrong delivery address, creating costly delays and unhappy customers.

The lesson is clear: without strong quality controls, validation rules, data-cleaning steps, and human-in-the-loop (HITL) review, automation doesn’t just pass along mistakes; it magnifies them. Instead of saving time, organizations spend more on corrections, lost revenue, and compliance penalties.

ECCMA Standards And ISO 8000 For Global Data Quality

Regarding data quality in automation, frameworks are only part of the picture. To ensure consistency and compliance across industries, many organizations turn to the **Electronic Commerce Code Management Association (ECCMA),** which ****develops and promotes standards for data quality, helping organizations ensure consistency, interoperability, and compliance.

ECCMA is best known for developing and maintaining ISO 8000, the international standard for data quality. This standard defines best practices for creating, managing, and exchanging high-quality master data across industries. In automated workflows, ECCMA standards provide a structured way to ensure that extracted data isn’t just machine-readable but also semantically correct and globally interoperable.

Why do ECCMA data quality standards matter for document processing and automation?

- Consistency across systems → ECCMA ensures data extracted from documents (like invoices or contracts) can flow seamlessly between ERPs, CRMs, and accounting platforms without mismatches.

- Accuracy & reliability → By defining clear rules for formatting and structure, ECCMA reduces costly errors caused by ambiguous or inconsistent data.

- Compliance-ready → Global standards support audit trails and regulatory requirements, which are especially important in finance, healthcare, and logistics.

For example, an invoice processed through an ECCMA-aligned system won’t just extract the “total amount;” it will format and tag that value so that downstream accounting software can instantly recognize and reconcile it.

At Parseur, we align with these best practices by combining AI-powered extraction with standardization and validation, ensuring that the data flowing into your workflows is both clean and compliant.

VACUUM vs ECCMA: Two Sides of Data Quality

| Factor | VACUUM Model | ECCMA Standards |

|---|---|---|

| Focus | Conceptual framework for assessing data quality . | International standards for creating, managing, and exchanging high-quality data (ISO 8000). |

| Scope | Evaluates whether the extracted data is fit for use. | Provides globally recognized rules for interoperability and compliance. |

| Strength | Flexible, easy-to-apply across industries and workflows. | Ensures standardization across systems and borders. |

| Application in automation | Used to measure if extracted data from invoices, forms, or contracts meets quality benchmarks. | Ensures extracted data is structured in a universally consistent format that downstream systems can interpret. |

AI in Data Quality Automation: Smarter Validation And Error Detection

Artificial intelligence is transforming how businesses ensure data quality in automation. Traditional methods, like manual checks or rule-based validation, are effective but limited in scale. AI, on the other hand, brings flexibility, adaptability, and continuous improvement.

How AI enhances data quality:

- Contextual validation → AI models can interpret meaning, catching subtle errors like a mismatched invoice date or an incorrect currency code.

- Entity recognition → Machine learning identifies specific fields (invoice totals, patient IDs, shipment addresses) across unstructured layouts.

- Error detection & correction → AI can spot anomalies (e.g., a tax calculation that doesn’t add up) and automatically suggest corrections.

- Learning over time → By incorporating feedback loops, AI-powered systems get smarter with every document processed.

- Multilingual capability → AI handles different languages, formats, and scripts, ensuring global consistency in data capture.

In short, AI doesn’t just extract data; it actively works to improve accuracy, consistency, and trustworthiness. This makes it a critical enabler for businesses aiming to scale automation without sacrificing quality.

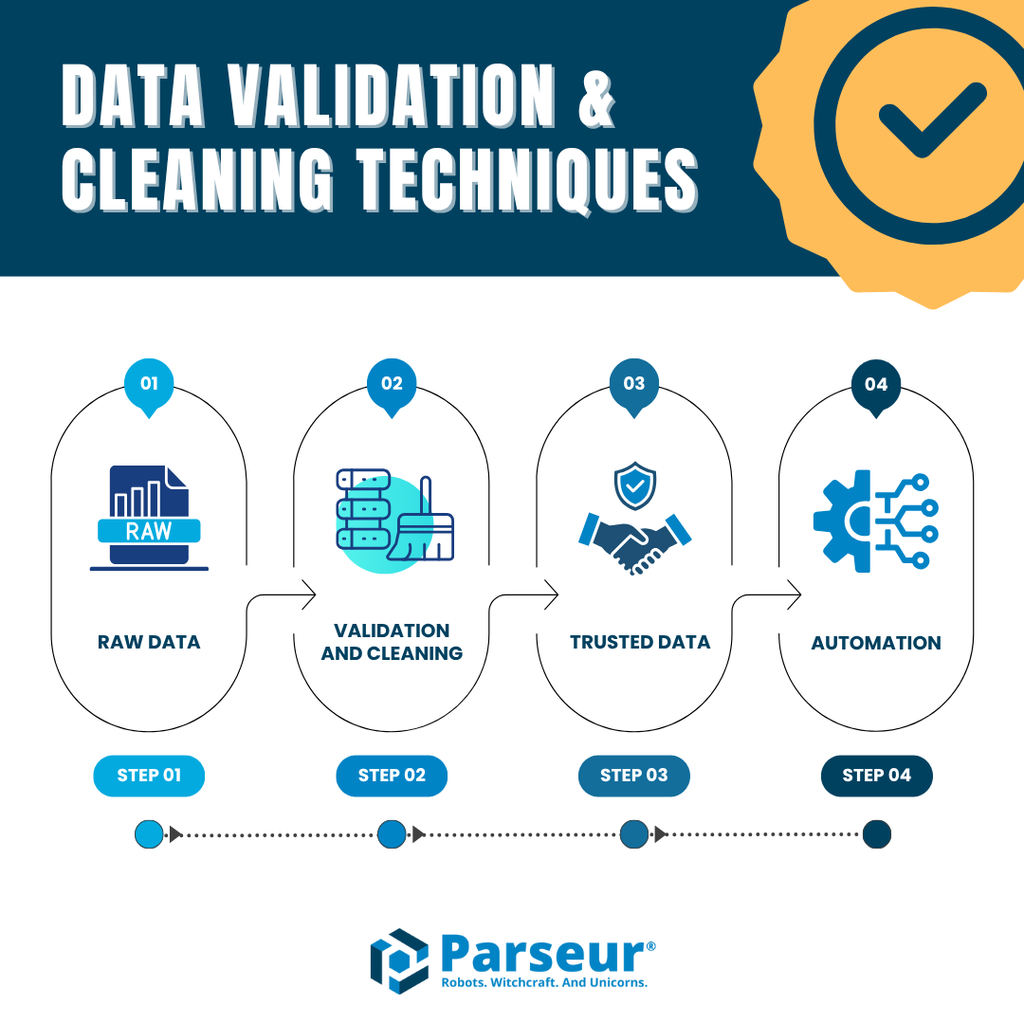

Data Validation & Cleaning Techniques

To maintain data quality in automation, it’s not enough to extract information; you must also validate and clean it. Without these steps, even the most advanced OCR or AI engine risks letting errors slip into your workflows.

Here are the most effective techniques:

- Automatic field checks → Validate that extracted fields match expected formats. For example, invoice totals must be numeric, dates must follow a standard format, and patient IDs must match a known schema.

- Duplicate detection → Prevent redundant records from entering your system, reducing confusion and eliminating unnecessary processing.

- Normalization → Standardize values like dates, currencies, phone numbers, and addresses to be consistent across platforms. (e.g., converting “12/09/25” and “2025-09-12” into one uniform format).

- Error flagging & exceptions → Identify anomalies, such as mismatched totals or missing line items, before they affect downstream systems.

- Human-in-the-loop (HITL) → For edge cases or ambiguous documents, a quick human review ensures accuracy without slowing overall automation.

Tools like Parseur make these steps practical. With template-free extraction, built-in validation, and integrations into ERPs, CRMs, and accounting platforms, Parseur enables businesses to scale automation while keeping their data clean, accurate, and automation-ready.

For a deeper dive, check out our guides on data validation and data cleaning techniques to see how these practices strengthen automation pipelines.

How Parseur Ensures Data Quality

When it comes to maintaining data quality in automation, Parseur takes a practical, business-first approach. Instead of relying solely on OCR or rigid templates, Parseur combines AI-powered extraction with built-in validation and seamless integrations. It also enforces data quality by ensuring that the output from AI matches the exact fields requested. Users can further refine results by adding custom instructions, tailoring the data as closely as possible to their needs.

Key features that strengthen data quality:

- Template-free extraction → Adapts to varied document formats (invoices, receipts, contracts, shipping forms) without constant rule-building.

- Accuracy benchmarks → Parseur consistently delivers 90–99% field-level accuracy in document parsing, even across diverse layouts and unstructured data.

- Validation & cleaning → Automatically checks for duplicates, formatting errors, and anomalies, ensuring data is reliable before entering downstream systems.

- Integrations → Send cleaned, structured data directly into Google Sheets, SQL databases, ERPs, CRMs, or accounting platforms without extra middleware.

Real-world impact:

- In finance, Parseur helps teams extract invoice totals, tax IDs, and payment details with near-perfect accuracy, reducing manual data entry time by up to 80%.

- In logistics, companies use Parseur to parse bills of lading and delivery receipts, ensuring shipment IDs and addresses are captured correctly and flow directly into tracking systems.

By aligning with best practices like VACUUM and ECCMA data quality standards, Parseur ensures that businesses don’t just automate document processing; they automate it with accuracy, reliability, and built-in compliance.

Conclusion

Automation promises speed, scalability, and efficiency, but only if the data driving it is clean, consistent, and trustworthy. As we’ve seen, poor data quality can erode efficiency, inflate costs, and undermine customer trust, while strong practices, such as the VACUUM framework, ECCMA standards, AI-powered validation, and human-in-the-loop review, transform automation into a true business advantage.

Ultimately, automation is only as effective as its data. By investing in data quality, businesses ensure that every automated decision is accurate, compliant, and reliable.

With Parseur, you get automation that’s accurate, reliable, and aligned with global standards. Whether you’re handling invoices, patient forms, or shipping documents, Parseur ensures your automation runs on high data quality.

Frequently Asked Questions

Ensuring data quality in automation is a complex but vital task. Businesses often ask how frameworks, standards, and tools fit together to keep automation accurate and trustworthy. Below are answers to some of the most common questions:

-

What is data quality in automation?

-

Data quality in automation refers to the accuracy, consistency, and reliability of data flowing through automated systems. High-quality data ensures workflows run smoothly, while poor data leads to errors, inefficiencies, and compliance risks.

-

Why is data quality important for automation?

-

Automation relies on input data to make decisions. If data is flawed, automation will amplify errors at scale. Strong data quality reduces costs, boosts efficiency, and builds trust in automated processes.

-

What is the VACUUM model in terms of data quality?

-

The VACUUM model defines six key dimensions of data quality: Validity, Accuracy, Consistency, Uniqueness, Uniformity, and Meaningfulness. It provides a framework to evaluate whether extracted data is trustworthy and usable in automation.

-

What are ECCMA data quality standards?

-

ECCMA develops global data quality standards, including ISO 8000. These standards ensure data consistency, interoperability, and industry compliance, making automation outputs more reliable and audit-ready.

-

How can businesses improve data quality in automation?

-

Companies can improve data quality through validation, cleaning, normalization, duplicate detection, and human-in-the-loop review. AI-driven tools like Parseur simplify these steps, ensuring automation runs on accurate and actionable data.

-

How does Parseur ensure data quality?

-

Parseur uses AI-powered, template-free extraction with built-in validation, cleaning, and integrations. It aligns with best practices like VACUUM and ECCMA to deliver accurate, reliable, and scalable automation across industries.

Last updated on