Key Takeaways

- By 2030, HITL will be a core design feature for trusted and explainable AI.

- Regulations will require human oversight in sensitive AI decisions.

- Human-AI synergy will drive ethical, scalable automation.

A Hybrid Future For AI And Humans

As we approach 2030, one truth becomes increasingly clear: the most successful AI systems will not be the fastest or most autonomous. They will be the most trustworthy. That trust comes from balance, pairing automation speed with human expertise.

Early waves of AI adoption taught us that while autonomous systems can be robust, they often carry risks like bias, lack of transparency, and unpredictable behavior. These challenges have reignited interest in HITL systems and human-centered AI, not as a fallback but as a forward-looking design strategy.

It's estimated that by 2026, over 90% of online content will be generated by AI rather than humans. This shift underscores the importance of trust and oversight in automated systems, as mentioned by Oodaloop.

To understand the foundations of this concept, explore our guide on Human-in-the-Loop AI: Definition and Benefits, a practical introduction to HITL's current use and business value.

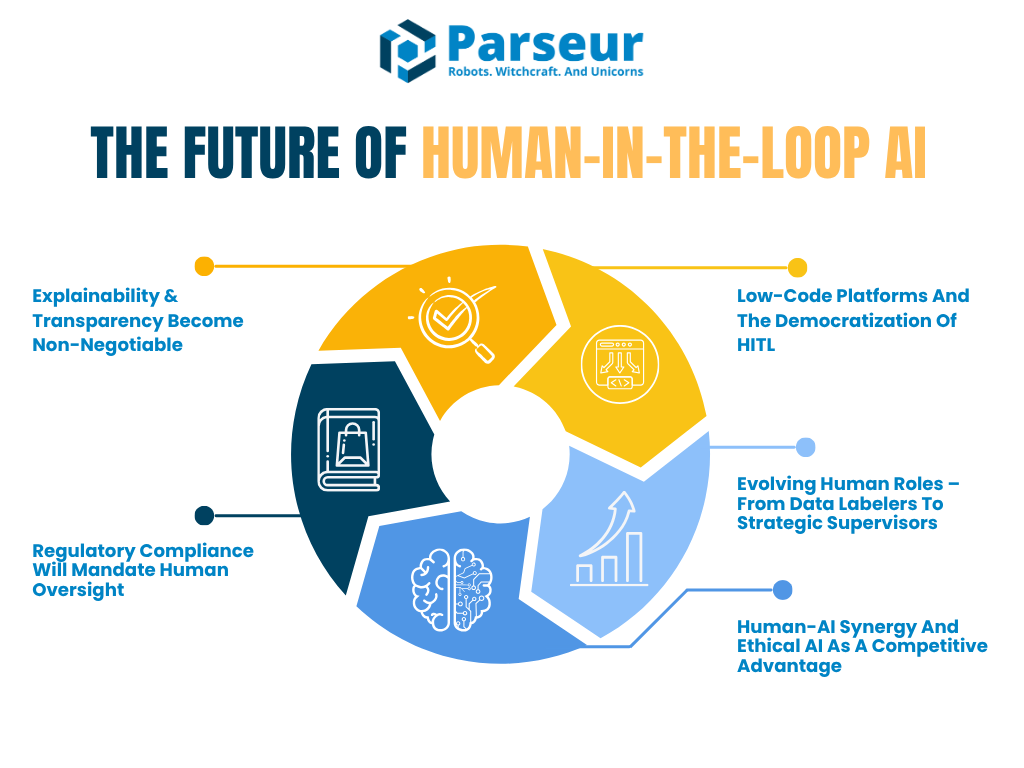

This article will explore how HITL will evolve from now to 2030. It will focus on five key trends shaping the future of human-AI collaboration:

- The demand for explainable and auditable AI

- The rise of regulatory compliance requiring human oversight

- The growth of low-code platforms enables more straightforward HITL implementation

- The evolution of human roles from data labelers to AI supervisors

- How human-AI synergy will become a competitive advantage

According to Venture Beat, even leading tech visionaries like Reid Hoffman predict a future shaped by AI superagency, when he said, “Human‑not using AI will be replaced by humans using AI”, positioning AI as a productivity multiplier rather than a threat.

Supporting this, PwC’s 2026 Global AI Jobs Barometer found that since the proliferation of generative AI in 2022, productivity growth in AI-exposed industries has nearly quadrupled from 7% (2018-2022) to 27% (2018-2024), it demonstrated how AI significantly amplifies human productivity across sectors like financial services and software publishing.

Organizations are no longer forced to choose between automation and assurance. The future lies in hybrid AI architectures that embed human judgment at key moments, whether through in-the-loop or on-the-loop models.

Trend 1: Explainability & Transparency Become Non-Negotiable

As AI systems continue to influence real-world decisions, the question is no longer whether AI can perform tasks accurately. The genuine concern is whether humans can understand and explain those decisions. This is where explainable AI (XAI) and transparency come into play.

In the coming years, explainability will shift from a best practice to a requirement, especially in high-stakes sectors like finance, healthcare, insurance, and law. Human-in-the-loop workflows will not just support oversight. They will enable organizations to meet regulatory and ethical demands by placing people in roles that interpret, validate, and explain AI outputs.

According to Gartner, by 2026, more than 80% of enterprises will have used generative AI APIs or deployed generative AI-enabled applications. This highlights the urgent need for explainability and human oversight in AI systems across industries such as healthcare, legal, and financial services.

A great example is the EU AI Act, which already requires that high-risk AI applications include human oversight and the ability to explain outcomes. Meanwhile, the NIST AI Risk Management Framework warns that a lack of clarity around HITL roles and opaque decision-making remain serious challenges. Soon, frameworks like NIST’s will formalize how human review is designed and documented.

Future outlook: human-in-the-loop with explanation interfaces

By 2030, AI tools will likely come with built-in “explanation interfaces”. These interfaces will help human reviewers make sense of model decisions. Imagine a credit approval system that scores an applicant and shows the top three reasons the AI made that call. This gives a reviewer the context needed to validate fairness and accuracy.

HITL will evolve from manual review at every step to strategic validation at critical points. It will no longer be about approving outputs line by line. It will be about confirming that the logic behind the AI’s outputs aligns with business values, compliance goals, and fairness standards.

Industries where this is already happening

The healthcare and financial sectors are early movers. In these industries, explainability is not just preferred. It is legally required. For example, a 2026 Deloitte Tech Trends report noted, “The more complexity is added, the more vital human workers become.” This highlights a key paradox in AI: as automation becomes more powerful, skilled human oversight becomes more critical, not less.

This concept is echoed in the rise of “human-on-the-loop” models. In these systems, humans do not need to intervene at every moment. Instead, they supervise AI operations continuously and retain the ability to take over when necessary, similar to how pilots monitor autopilot systems.

Why it matters

The trend toward explainability reinforces a bigger truth: businesses cannot scale AI without trust. And that trust comes from transparency. In the future, we may even see organizations required to maintain “explanation logs” or reports summarizing how decisions were made, with humans assigned to audit or sign off on those explanations.

These human roles are not simply reactive. They will shape the evolution of AI systems by identifying flaws, offering context, and providing the ethical grounding AI cannot.

Trend 2: Regulatory Compliance Will Mandate Human Oversight

As AI capabilities advance, so do the legal and ethical responsibilities surrounding them. Global regulators are placing greater emphasis on accountability and human oversight in automated systems. By 2026 to 2030, we can expect a wave of regulations that formally require Human-in-the-Loop processes for many high-impact AI applications.

Governments and standards bodies like the European Union, the United States, and NIST align on one key point: AI should never be a black box. People affected by algorithmic decisions must be able to understand them, challenge them, and, in many cases, request a human review.

In fact, as of 2026, more than 700 AI-related bills were introduced in the United States alone in 2024, with over 40 new proposals early in 2026, reflecting a rapidly evolving regulatory landscape focused on AI transparency and human oversight, according to Naaia.

Under GDPR Article 22, individuals can request human intervention when subject to automated decision-making. The upcoming EU AI Act builds on this by requiring that humans play a meaningful role in overseeing and controlling specific high-risk systems. This is not just a trend. It is becoming a legal standard.

Future outlook: compliance-driven HITL systems

By 2030, many companies will implement what could be called "compliance HITL". These are governance workflows explicitly designed to meet regulatory demands. For example:

- Human auditors will log and review AI decisions on a scheduled basis.

- Some organizations will set up AI oversight teams to monitor live systems, similar to control rooms in aviation or cybersecurity.

- Human checkpoints will be added to decision pipelines where risk is high or fairness is critical.

Tools and platforms will adapt to make this easier. Companies may use dashboards that track model accuracy and how often human reviewers override AI decisions. This kind of traceability will become a compliance metric in itself.

Expert insight: the rise of hybrid AI governance

Industry leaders are already embracing hybrid frameworks that blend automation with human oversight. According to Gartner, 67% of mature organizations have created dedicated AI teams and introduced new roles such as AI Ethicists, Model Managers, and Knowledge Engineers to ensure the responsible deployment of AI systems.

In sectors like data and analytics, these roles are becoming standard as enterprises recognize that technical oversight and ethical accountability are central to AI's success. We can anticipate titles such as “AI Auditor,” “AI Risk Manager,” or “Human in the Loop Supervisor” becoming widespread by 2030, signaling a strategic shift toward embedding human oversight in AI governance.

Explainability meets regulation

Regulators will not only demand that humans are involved. They will expect that those humans can explain why decisions were made. This connects back to the first trend. Documentation and audit trails will become part of the standard operating procedure. The NIST AI RMF already recommends that organizations empower humans to override AI and monitor outputs regularly. In the future, failing to do so may result in regulatory penalties or loss of certification.

Some organizations may even pursue "AI trust certifications" to show that their systems are governed responsibly, with human oversight built in from the start.

Trend 3: Low-Code Platforms And The Democratization

Historically, building a HITL workflow required engineering resources, custom integrations, and deep AI expertise. This made it difficult for smaller teams or non-technical users to adopt the model. But that barrier is rapidly disappearing. By 2026 and beyond, low-code and no-technical-knowledge-required platforms are expected to make HITL accessible to a much wider audience.

These platforms allow users to integrate human checkpoints into AI workflows without writing a single line of code. Already, tools like UiPath, Microsoft Power Automate, and Amazon A2I offer drag-and-drop features that include human review as part of decision automation.

Future outlook: AI workflows built by business users

Expect AI platforms with HITL features to be standard components in the next few years. By 2026, 70% of customer experience (CX) leaders plan to integrate Generative AI across touchpoints, leveraging tools that often include HITL features to ensure quality and oversight, as mentioned by AmplifAI.

This democratization is a game-changer. It allows departments like operations, legal, or finance to implement their AI governance layers. With more people building and monitoring AI, organizations can scale faster and more safely.

In the future, even LLMs could recommend where to insert a human-in-the-loop step, based on model confidence or data risk. Platforms also include monitoring dashboards where humans can view decision summaries, flag issues, and improve future AI behavior through feedback.

Crowd-powered human review

Another part of this trend is the rise of crowd-sourced or on-demand human validation. Services like Amazon Mechanical Turk or BPO partners can be integrated into AI pipelines to offer scalable HITL at a lower cost. This enables companies to balance automation and quality assurance without building large internal teams.

Imagine a scenario where an e-commerce company uses AI to process product reviews but routes all suspicious entries to a human validation queue handled by a freelance HITL team. It is fast, efficient, and accurate.

Industry momentum toward accessible HITL

Platforms should enable automation through low-code interfaces while providing code-level customization. This trend suggests that HITL will be as easy to implement as setting up an email campaign or building a website..

This also aligns with Parseur’s evolution. While Parseur offers the flexibility to create your templates if you don't want to rely on the AI engine. As HITL becomes a standard part of intelligent automation, platforms like Parseur will be key in scaling responsible, human-verified workflows across industries.

Trend 4: Evolving Human Roles – From Data Labelers To Strategic Supervisors

As AI systems improve, the nature of human participation is shifting. In the early stages of AI adoption, HITLs were often tasked with repetitive work like labeling data or validating basic outputs. However, in the future, these tasks will increasingly be handled by AI or outsourced to crowd-sourced workers. This does not mean humans are being pushed out. Instead, their roles are becoming more strategic, specialized, and value-driven.

In Statistica’s report 2026, humans handled 47% of work tasks, while machines accounted for 22%, with 30% requiring a combination of both. By 2030, businesses expect a more balanced division, with machines taking on a larger share.

Future outlook: HITL 2.0 as AI supervisors and risk managers

We are entering the age of “Human-in-the-Loop 2.0.” In this model, humans are not just reviewers. They are supervisors, coaches, and AI risk managers.

For example, a doctor overseeing a medical AI might only step in when the system shows uncertainty or flags an anomaly. That human input is then used not just to complete a task, but to retrain the AI model and improve accuracy for future predictions. This is augmented intelligence in action, where humans and AI learn together.

The World Economic Forum reports that by 2030, 60% of employers expect digital transformation to drive demand for analytical and leadership skills, while manual skills decline.

This also introduces the concept of “human-on-the-loop,” where humans monitor AI systems continuously and intervene only when necessary. Think of it like air traffic control for automated systems.

Humans augmented by AI

Just as AI is embedded in workflows, it will also assist the humans overseeing them. Imagine an AI tool that proactively alerts a compliance officer: "This decision is inconsistent with past rulings. Please review." In the future, humans will not only be in the loop, but AI will also support them in managing the loop more intelligently.

New titles and responsibilities

By 2030, we may see job titles like:

- AI Feedback Specialist

- Algorithm Ethics Officer

- Model Behavior Coach

- Human-in-the-Loop Supervisor

These roles will be responsible for quality assurance and guiding the evolution of AI systems. This is a shift from task execution to system stewardship.

According to Deloitte’s 2026 Global Human Capital Trends report, 57% of organizational leaders say they must teach employees how to think with machines, not just use them. This highlights a shift in human roles from task execution to strategic oversight.

Trend 5: Human-AI Synergy And Ethical AI As A Competitive Advantage

The future of artificial intelligence will not be about humans versus machines. It will be about humans working with machines to solve problems more efficiently, ethically, and intelligently. As companies look toward 2030, the most competitive organizations will be those that master the balance between automation and human judgment.

This shift is known as augmented intelligence, where the strengths of AI (speed, scale, pattern recognition) combine with the strengths of humans (ethics, empathy, domain expertise). The result is more intelligent decision-making across the board.

55% of organizations have established an AI board or governance body to oversee AI initiatives, reflecting the growing importance of human oversight and ethical governance in AI deployment, as reported by Gartner.

Why Synergy will win

By 2026, the idea of "human-in-the-loop" will no longer be an optional safety net. It will become a core feature of trustworthy AI systems. From loan approvals to hiring decisions to healthcare recommendations, human validation will drive accuracy and trust.

Organizations will increasingly market their use of HITL as a brand differentiator. Much like “sustainably sourced” or “certified organic” labels, we may see terms like “Human-Verified AI” or “AI with Human Oversight” appear in product messaging, particularly in sensitive industries like healthcare, finance, and education.

Companies that can say, "People review our AI," will likely earn more user trust, especially in an era where AI mistakes can go viral and damage reputation.

Ethical AI as a business strategy

High-profile AI failures have proven how quickly things can go wrong with unchecked automation. A misclassified resume, a biased legal risk score, or a flawed medical diagnosis can lead to lawsuits, media backlash, and consumer distrust.

By integrating human review into high-stakes AI decisions, businesses meet regulatory expectations and signal accountability to customers. Ethical AI is no longer just a moral issue. It is a business necessity.

Certifications and auditable systems

We may even see AI systems certified for HITL compliance. Just as ISO standards validate safety and quality for industrial systems, future AI platforms could carry labels proving that decisions can be reviewed, explained, and reversed by a human.

This trend creates a clear competitive edge. Companies with transparent, auditable, and human-guided AI will win the trust of customers, investors, and regulators.

According to the NIST AI Risk Management Framework, organizations must design AI systems with built-in oversight. That guidance highlights human-in-the-loop and human-on-the-loop roles as key to reducing systemic risks. In the coming years, these oversight functions may be required by law for high-impact AI use cases.

Human-in-the-loop by design

The concept of "HITL by design" is likely to gain momentum. Rather than adding human review as a patch later, AI systems will be built with HITL as a foundational element. This reflects a shift toward responsible AI, where fairness, transparency, and accountability are engineered into the product lifecycle.

Industry leaders are already embracing this mindset.

Ultimately, it is not going to be about man versus machine. It is going to be about man with machines. — Satya Nadella, CEO of Microsoft

That perspective shapes product development across multiple sectors, from enterprise software to consumer tech.

Toward 2030: Humans And AI Hand-In-Hand

As AI accelerates, one message becomes clear: the future belongs to organizations that balance automation with human expertise.

AI has the potential to contribute up to $15.7 trillion to the global economy by 2030, according to PWC.

HITL will not fade into the background as AI advances. Instead, it will evolve into a strategic necessity, built directly into the architecture of ethical, explainable, and trusted AI systems.

By 2030, human oversight will be a foundational design principle, not a temporary safeguard. HITL will be expected in everything from regulatory frameworks to platform capabilities. The companies that adopt it early will be positioned for long-term success.

Think of it this way: just as seatbelts became a standard feature in every vehicle, HITL mechanisms will become standard in every serious AI deployment. They will protect users, prevent errors, and ensure innovation happens responsibly and inclusively.

Now is the time to prepare for that future, as Amazon stated that Europe could see near-universal AI adoption by 2030.

Business leaders and tech strategists should begin by:

- Investing in explainable AI tools and interfaces

- Creating human oversight roles across teams

- Choosing platforms that allow for easy human validation steps

- Designing processes where humans and AI collaborate by default

As regulations tighten and public trust becomes more critical, these practices will set the groundwork for resilience, agility, and ethical leadership.

Last updated on