Key Takeaways:

- HITL AI combines human judgment with machine intelligence to ensure accuracy, fairness, and trust in high-stakes workflows.

- Industries such as healthcare, finance, and customer service utilize HITL to minimize errors, comply with regulatory standards, and enhance performance.

- As AI adoption increases, organizations must strategically integrate human oversight to manage risks, ensure compliance, and address ethical concerns.

- Organizations leveraging HITL workflows report significant gains in accuracy, customer satisfaction, and risk reduction across critical AI applications.

Why Human-in-the-Loop AI Matters In 2026

AI adoption has surged across various industries, automating tasks ranging from document processing to customer support. However, as organizations scale their use of AI, a common challenge arises: How can we ensure that these systems remain accurate, compliant, and trustworthy, particularly when decisions have real-world consequences?

That’s where Human-in-the-Loop (HITL) AI comes in. HITL is not just a technical model; it's a strategic approach that combines machine efficiency with human judgment to enhance outcomes, mitigate risk, and meet the growing demands for transparency and accountability.

Moreover, as 65% of organizations now routinely deploy generative AI, nearly double the number from the previous year, HITL becomes essential for managing growing complexity, compliance needs, and trust issues, as reported by Netsol Tech.

In this guide, you’ll learn:

- What HITL AI means (and what it doesn’t)

- How it works in practice across document processing, healthcare, customer support, and more

- Why HITL is critical for accuracy, compliance, and trust in high-stakes workflows

- How to prepare your organization for HITL AI in 2026 and beyond

Whether you're overseeing automation in finance or evaluating AI systems for regulatory compliance, this guide will help you understand how HITL strategies enable smarter, safer AI.

What Is Human-in-the-Loop (HITL) AI?

Human-in-the-Loop (HITL) AI refers to any artificial intelligence system that includes human intervention at key stages of its development or operation. Unlike fully autonomous systems, HITL AI establishes a feedback loop that enables humans to guide, review, and refine AI outputs, ensuring greater accuracy, reliability, and ethical oversight.

According to VentureBeat, 96% of AI/ML practitioners believe that human labeling is important, with 86% considering it essential, emphasizing that expert oversight isn’t a luxury, but a necessity.

In simple terms, HITL refers to the collaboration between humans and AI. AI handles repetitive or large-scale tasks, while humans step in when judgment, context, or domain expertise is needed.

Formal definition:

HITL AI is a machine learning approach that integrates human feedback at critical points such as training, validation, or decision-making to refine model performance and reduce errors.

This approach is especially crucial in sensitive workflows, such as document processing, healthcare diagnostics, financial risk analysis, and legal compliance, where mistakes can incur significant costs.

Related terms:

- Human-on-the-loop: Humans monitor AI systems and intervene only when necessary

- Human-out-of-the-loop: The AI system operates independently with no human involvement once deployed

By combining the strengths of both humans and machines, HITL offers a more flexible and trustworthy approach to automation, making it a must-have strategy as AI becomes increasingly integrated into business operations.

How Does Human-in-the-Loop AI Work?

Human-in-the-loop (HITL) AI operates through a collaborative feedback loop, where humans participate at various stages of the AI system’s lifecycle. This hybrid process enhances the system’s performance over time, ensuring that outputs meet high standards for accuracy, fairness, and reliability.

Most HITL workflows follow these three key stages:

1. Data annotation

Humans label or annotate the raw data that will be used to train the AI model. For example, in document processing, a human might highlight specific fields in an invoice, such as invoice number, total amount, and due date. This creates structured, high-quality training data.

2. Model training

The AI model is trained on the annotated data. During this phase, data scientists and machine learning engineers monitor the model’s performance and tweak parameters as needed. Human guidance ensures the model learns patterns correctly and avoids unwanted biases.

3. Testing and feedback

After deployment, the AI continues to process new data, but any low-confidence predictions or ambiguous cases are flagged for human review. Humans correct or validate the AI's output, and those corrections are used to retrain and refine the model. This creates a continuous learning cycle.

Real-world example: document processing

In intelligent document processing(IDP), the HITL workflow looks like this:

- AI extracts data fields from a scanned shipping document

- Fields with high confidence are auto-approved

- Fields with low confidence (e.g., unclear handwriting or complex layouts) are reviewed and corrected by a human

- These corrections feed back into the system to improve future performance

This ongoing interaction ensures that even as AI handles more tasks, human oversight remains a built-in quality control mechanism.

Tely.ai reported that organizations leveraging HITL workflows often achieve accuracy rates up to 99.9% in document extraction, blending AI speed with human precision.

Benefits of Human-in-the-Loop AI

As organizations accelerate their adoption of AI, many realize that automation alone is insufficient. (HITL) AI combines the speed and scale of artificial intelligence with human judgment to ensure quality, compliance, and trust. This approach is particularly crucial in domains such as document processing, customer service, legal technology, and healthcare, where errors can have severe consequences. Instead of relying on black-box AI systems, businesses opt for hybrid AI workflows that enable humans to guide, correct, and approve AI outputs.

For instance, the History Tools report shows that 72% of customers prefer talking to a live agent over a chatbot for complex issues, while organizations using HITL in customer service often see 20–40% reductions in average handling time, a powerful one-two punch of satisfaction and efficiency.

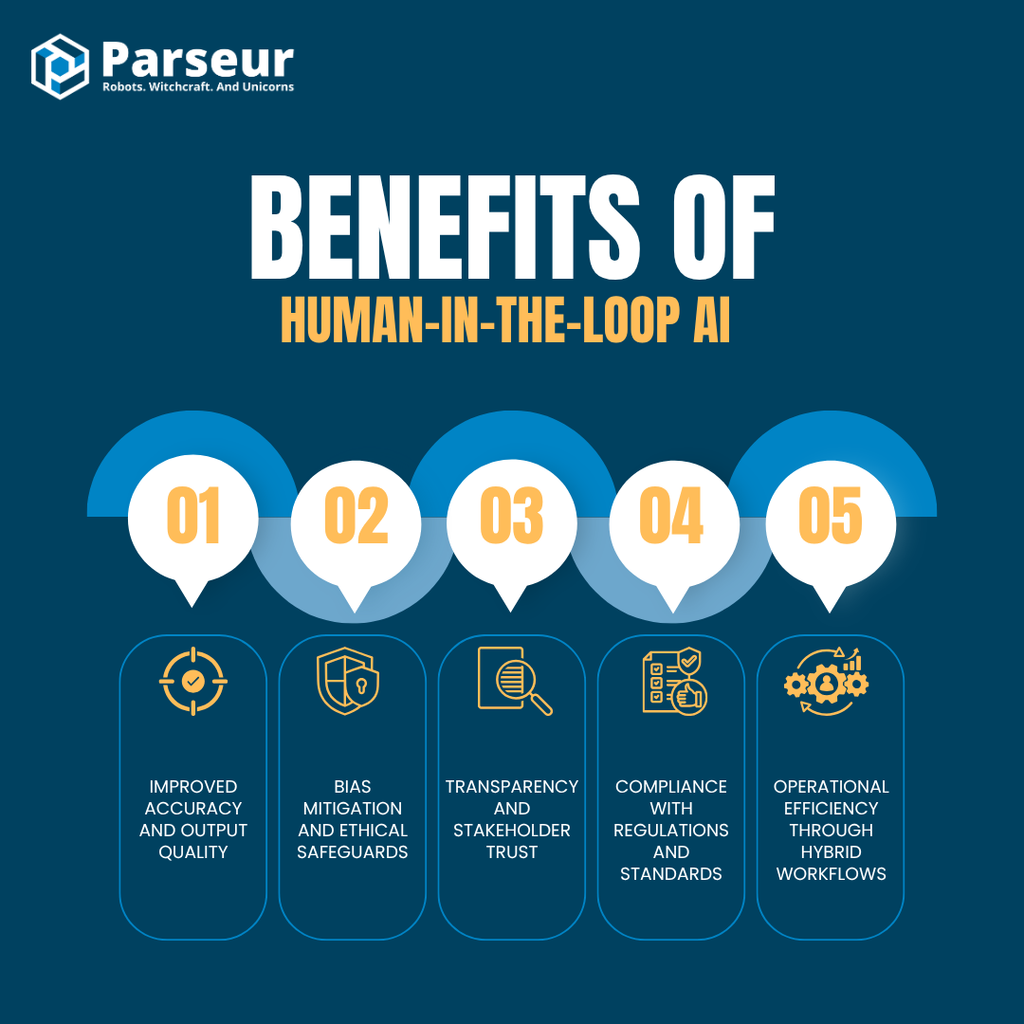

Here are the core benefits of implementing HITL AI:

Improved accuracy and output quality

AI can handle vast amounts of data quickly, but it often struggles with ambiguous inputs or predictions of low confidence. With HITL, human reviewers validate and correct these outputs, resulting in more accurate overall results. For example, in document processing, humans verify the extracted data from invoices or contracts, ensuring that important values, such as totals, names, and dates, are error-free before submission to downstream systems.

Bias mitigation and ethical safeguards

Algorithms can inherit or even amplify biases present in training data. HITL offers an opportunity for human reviewers to identify and correct biased decisions, particularly in applications such as hiring, lending, or insurance underwriting. Human input helps ensure AI systems uphold fairness, equity, and compliance with ethical standards.

Transparency and stakeholder trust

HITL makes AI more explainable. When people are involved in validating or approving AI outputs, the decision-making process becomes more transparent and accountable. This human touchpoint enhances confidence among users, regulators, and business stakeholders, alleviating concerns about “black-box” AI.

Compliance with regulations and standards

New regulations, such as the EU AI Act, require human oversight for high-risk AI applications. HITL workflows support compliance by ensuring that a qualified human reviews outputs before action is taken, which is essential for industries such as legal, healthcare, and finance, where mistakes could result in legal liability or safety risks.

Operational efficiency through hybrid workflows

A well-designed HITL system doesn’t slow down operations, it makes them smarter. AI handles high-volume, routine cases quickly, while humans focus only on low-confidence or exception cases. This hybrid approach combines speed with quality control, reducing total workload without sacrificing accuracy. For instance, in an invoice parsing workflow, high-confidence extractions can be sent directly to the ERP, while edge cases are flagged for human review.

According to Gartner, 30% of new legal tech automation solutions will include human-in-the-loop functionality by 2025. This indicates that businesses are increasingly recognizing the need for responsible AI with built-in human oversight.

In summary, HITL AI is not a limitation of AI technology. Instead, it is a powerful method for enhancing its reliability, usability, and impact. By combining human intelligence with machine speed, businesses can confidently scale automation while safeguarding against risks.

How Human-in-the-Loop AI (HITL) Works in Real-World Applications

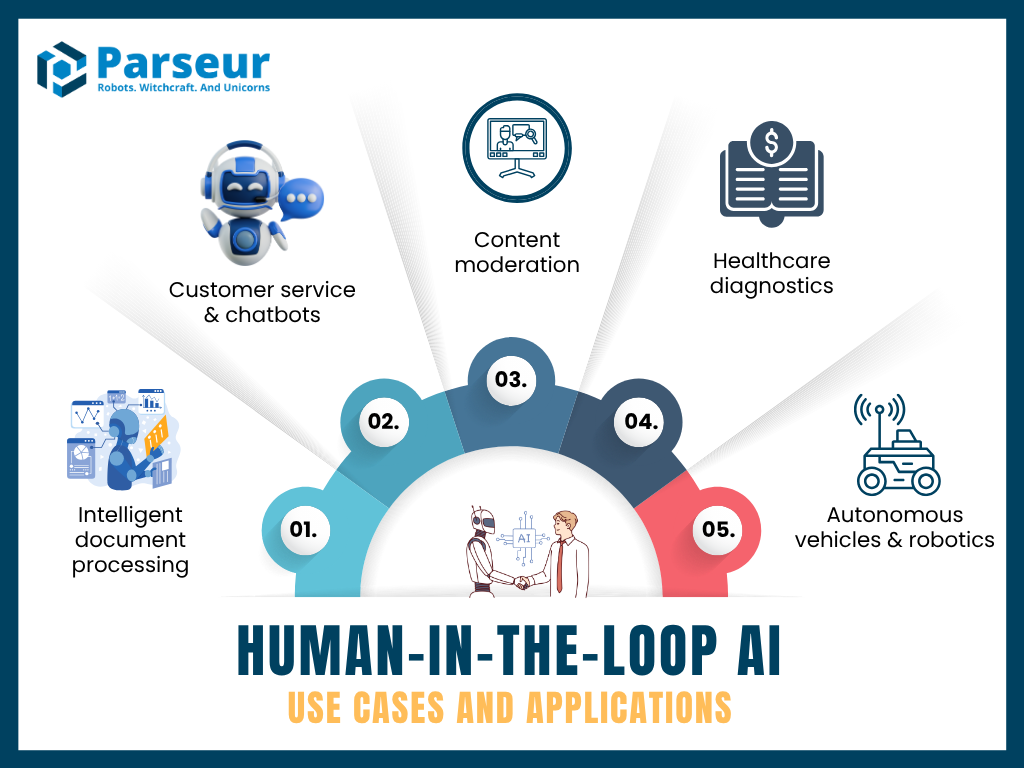

Human-in-the-Loop AI is not just a concept; it’s already transforming a wide range of industries. Below are key domains where HITL delivers real-world impact, combining the precision of automation with the critical thinking and context only humans can provide.

Intelligent document processing (IDP)

In document-heavy workflows, like parsing invoices, insurance claims, or onboarding forms, AI handles bulk extraction while humans verify low-confidence outputs. This hybrid model achieves nearly 100% accuracy for critical financial and legal data, ensuring compliance and minimizing costly errors. It’s where Parseur’s strengths in document validation shine.

Organizations that combine AI with human-in-the-loop verification in document processing can achieve accuracy rates of up to 99.9% in data extraction, ensuring near-perfect reliability for critical financial or legal documents, as mentioned by Tely.ai.

Customer service & chatbots

AI chatbots efficiently manage high-volume inquiries, but complex or nuanced conversations still require human expertise. HITL enables seamless escalation: AI handles standard queries, while humans step in for exceptions that require human intervention.

According to Sekago's research, implementing a human handoff in AI chatbots can increase customer satisfaction rates by up to 35% while reducing churn by around 20%. This improves resolution rates, elevates customer satisfaction, and reduces agent fatigue.

Content moderation

AI can rapidly flag objectionable content, including hate speech, nudity, and misinformation; however, ambiguous cases require contextual judgment. Humans review these edge cases to make final decisions. This ensures platforms strike a better balance between the speed of automation and the nuance of human oversight.

SEO Sandwich stated that AI moderation systems correctly flag approximately 88% of harmful content, but humans still need to review 5–10% of AI-flagged cases, particularly for ambiguous or edge-level content

Healthcare diagnostics

AI systems analyze scans or lab results at scale. However, clinicians review questionable findings, such as low-confidence anomalies, before making treatment decisions. HITL here supports safety and compliance in patient care, ensuring that no critical decision is made solely by AI.

A study from Nexus Frontier shows that healthcare diagnostics with human-in-the-loop (HITL) combines pathologists' analysis with AI-automated diagnostic methods, improving accuracy to 99.5%. In comparison, the AI method alone achieved approximately 92% accuracy, while human pathologists alone achieved approximately 96%.

Autonomous vehicles & robotics

In self-driving and robotics, humans often monitor AI systems and intervene during unexpected road conditions or operational failures, a model known as human-on-the-loop. This oversight is critical for the real-world deployment and testing of autonomous systems.

In 2024, the number of self-driving car accidents nearly doubled to 544 reported crashes, compared to 288 in 2023, illustrating the ongoing challenges in autonomous vehicle safety and the critical need for human oversight during real-world deployment, according to Finance Buzz’s report.

Additional industries

- Cybersecurity: AI flags suspicious activities; human analysts investigate incidents.

- Finance: Algorithmic trading systems alert humans when market irregularities are detected.

- Legal Tech: Lawsuit or contract reviews use AI to pre-screen documents; attorneys confirm final decisions.

- Sales: AI handles initial qualification tasks, filters leads by basic requirements, and allows human teams to engage deeply only with high-value prospects.

Magee Clegg, CEO of Cleartail Marketing, shares a real-world example demonstrating the benefits of HITL in sales lead qualification:

One of our manufacturing clients was drowning in website inquiries —getting 200+ contact form submissions monthly but their sales team could only follow up on about 60% within 24 hours. We implemented a HITL chatbot system where the AI handles initial qualification questions, but humans jump in when prospects mention specific technical requirements or custom solutions.

Results after 6 months: they're now capturing and properly qualifying 85% of inquiries, and their sales team went from 40+ unqualified calls per month to just 12 highly-qualified prospects. The human reps love it because they're not wasting time on tire-kickers anymore.

The key insight here is that we didn't try to make AI do everything. Instead, we used it to filter and prep leads so humans could focus on what they do best—building relationships and solving complex problems. Their close rate on qualified leads jumped from 23% to 34% because sales reps now have better intel before every conversation.

Anupa Rongala, CEO of Invensis Technologies, provides a compelling example illustrating the tangible ROI of HITL in invoice data extraction:

A real-world example of successful HITL implementation came from a project involving invoice data extraction for a large logistics client. While AI models could process and classify structured data with high accuracy, edge cases—like handwritten annotations or non-standard invoice formats—required human intervention. By integrating a HITL layer, where human reviewers validated low-confidence outputs flagged by the AI, we significantly improved accuracy rates—from 82% to 98%—while reducing processing time by over 40%.

The key benefit wasn't just better accuracy—it was trust. The client gained confidence in automation because there was always a human safety net. Over time, the AI model also learned from human corrections, gradually reducing the volume of required interventions. That feedback loop is where the real ROI emerges: better models, fewer errors, and more scalable automation. HITL isn't a bottleneck—it's a catalyst for sustainable AI adoption.

Gunnar Blakeway-Walen TRA, Marketing Manager at The Rosie Apartments by Flats, shares a successful implementation of HITL in marketing budget optimization:

HITL transformed our $2.9M annual marketing budget allocation. Automated systems track performance across Chicago, San Diego, Minneapolis, and Vancouver properties, while human analysis determines budget reallocation between digital marketing and ILS packages. Result: 4% budget savings while maintaining occupancy targets and 50% reduction in unit exposure time.

The multifamily industry thrives on HITL because resident behavior patterns need human context that AI misses. Our Digible campaigns use AI for geofencing and targeting, but humans adjust messaging based on neighborhood nuances - you can't automate understanding why Pilsen residents respond differently than South Loop prospects.

Amy Bos, Co-Founder & COO at Mediumchat Group, shares a practical HITL application enhancing customer interactions and emotional intelligence:

On our platform, we handle thousands of written and voice readings daily in multiple languages and tones. A few years ago, we developed an AI tool to assist in tagging and routing incoming requests to the most suitable advisors. Whilst accelerating the process, it lacked accuracy as it didn't understand the nuances and emotions.

So, we added a Human-in-the-Loop step. A small team of senior advisors reviews edge cases where the AI isn't confident, like emotionally complex readings or dual-topic queries. Initially, they had to review nearly 30% of the cases. However, with ongoing corrections and feedback, that dropped to under 10% within four months, and customer satisfaction scores rose by 18%.

What initially appeared to be a bottleneck turned out to be a smart feedback loop. The AI improved, advisors felt more in control of the tech, and clients noticed the difference.

Lori Appleman, Co-Founder at Redline Minds, shares insights on how effective HITL implementations significantly boosted conversion rates and optimized resource allocation:

My most successful HITL implementation involved customer behavior analysis tools like Lucky Orange and Hot Jar. AI tracked where visitors clicked and scrolled, but our team finded that customers were confused by shipping costs appearing too late in checkout. We moved shipping calculators earlier in the funnel and saw 18% conversion improvement within two weeks.

The biggest pitfall I see businesses make is treating HITL like a replacement decision instead of an improvement strategy. Companies either go full-AI or full-human when the sweet spot is AI handling data collection while humans make strategic decisions. AI flagged our client's inventory issues, but human judgment determined which products to discontinue versus which ones needed better positioning.

From an ROI perspective, HITL works best when you assign clear dollar values to human time versus AI processing costs. One client saved $2,400 monthly by letting AI handle routine customer service inquiries while humans focused on high-value sales conversations that averaged $340 per interaction.

Gregg Kell, President at Kell Solutions, highlights compelling outcomes from HITL integrations in professional services:

Professional services will see the biggest HITL impact over the next five years. In our law firm implementations, AI handles initial client intake and document review, but attorneys make the judgment calls on case viability and settlement strategies. This hybrid approach lets firms handle 40% more consultations without sacrificing the relationship-building that wins cases.

ROI-wise, our most successful HITL deployments show 2.3x efficiency gains within six months. The key metric isn't just cost savings—it's revenue protection. Human oversight prevents the costly mistakes that pure automation makes, like our plumbing client who avoided a $15K reputation hit when humans caught the AI scheduling non-emergency calls during actual emergencies.

Martin Weidemann, Owner of Mexico-City-Private-Driver.com, shares how integrating HITL significantly enhanced reliability and trustworthiness in transportation logistics:

When I first launched Mexico-City-Private-Driver.com, I built a system that automatically matched incoming bookings to our roster of drivers based on availability, location, and type of vehicle. It worked — until it didn't.

One Monday morning, a high-stakes transfer for an embassy official was incorrectly assigned to a driver unfamiliar with security protocol. That day, I realized that 100% automation in a human-facing business was a risk I couldn't afford. So I implemented a HITL checkpoint: every assignment flagged as "sensitive" or "VIP" is now reviewed manually before confirmation.

This small loop — human validation within the AI workflow — reduced critical assignment errors from around 1 in every 35 bookings to less than 1 in 500. It also allowed us to build trust with high-profile clients, like diplomatic delegations and international CEOs staying at hotels like the St. Regis or Ritz-Carlton.

In hindsight, HITL wasn't just about fixing mistakes. It gave me a way to train the system better, since each human correction became a learning point for our AI logic. Today, over 80% of our bookings are still handled automatically — but the 20% we manually verify? That's where reputational damage is avoided and client relationships are preserved.

Andrew Leger, Founder & CEO at Service Builder, shares practical examples of HITL transforming job scheduling and quoting processes:

We implemented HITL for job scheduling where AI handles the initial optimization - matching technician skills, travel time, and availability. But humans make the final call on complex scenarios like emergency calls or customer relationship nuances. A recent HVAC client was struggling with 30% schedule conflicts using pure automation, but with our HITL approach, their dispatcher now reviews AI suggestions and catches things like "Mrs. Johnson always needs morning appointments" that AI missed.

The human oversight piece is crucial for quoting too. Our AI generates initial quotes based on job type, location, and historical data, but service managers review and adjust for unique factors like difficult access or repeat customer discounts. One pest control company saw quote accuracy improve 40% and customer satisfaction jump because techs weren't showing up with completely wrong expectations.

What surprised me most was how much faster decisions became. Instead of dispatchers starting from scratch, they're now power-users making quick judgment calls on AI recommendations. The AI does the heavy computational work while humans focus on relationship management and edge cases that require real business context.

Why HITL matters across industries

Research indicates that HITL is crucial for developing accountable and transparent AI systems in high-stakes sectors, including finance and healthcare. In these domains, even minor errors can lead to significant financial losses, legal exposure, or patient harm. For example, in healthcare, HITL AI is used to validate AI-assisted diagnostics, thereby reducing the risk of misdiagnosis. In finance, it ensures compliance by having humans verify flagged transactions or anomalies.

Jorie illustrates why human-in-the-loop (HITL) matters across industries, especially in healthcare and finance, by noting that nearly 86% of healthcare mistakes are administrative errors that are often caused by manual processes or outdated systems. HITL AI systems help reduce these errors by combining automated data processing with human oversight to ensure accuracy and compliance.

Together, these cases demonstrate that hybrid AI workflows, which combine automation with human oversight, are not a fallback; they’re the modern standard for reliability, trust, and scalability in 2026.

Challenges And Best Practices

HITL AI brings powerful benefits, but to implement it successfully, organizations must also address key challenges that can hinder its effectiveness. Below is a balanced view of the most common issues and how to overcome them with best practices.

As stated by Big Data Wire, 55% of organizations cited a lack of skilled personnel as a major barrier to scaling generative AI. In comparison, 48% flagged high implementation costs as a key obstacle.

Scalability and cost

Involving humans in AI workflows can increase operational overhead and slow down processing, especially if every task requires human review.

Best Practice: Use humans strategically, focusing only on edge cases, low-confidence predictions, or periodic audits. Leverage techniques like active learning to prioritize human involvement where it adds the most value.

Human error and bias

The human element can also introduce mistakes or subjective bias. If reviewers are undertrained or overworked, they might approve flawed AI outputs without realizing it.

Best Practice: Clearly define roles, provide consistent training, and consider using multiple reviewers for critical tasks to ensure accuracy and consistency. Continuously assess both AI and human accuracy to improve outcomes over time.

Defining the right loop

Not every AI decision needs human intervention. Poorly scoped HITL implementation can create confusion or inefficiencies in workflows.

Best Practice: Identify high-risk or high-impact decision points where AI errors would have serious consequences. These are the areas where human involvement is most effective. Automate low-risk or repetitive tasks to maximize efficiency and productivity.

Integration and workflow design

Blending human oversight into automated systems is not always seamless. Disconnected processes can reduce effectiveness or cause delays.

Best Practice: Use AI platforms (like Parseur) that support human validation steps within the workflow. Build processes with user-friendly interfaces so humans can quickly verify outputs and submit feedback that helps the AI learn.

Rob Gundermann, Owner at Premier Marketing Group, highlights critical mistakes companies frequently make with HITL and AI automation:

The biggest mistake I see is businesses trying to automate everything at once. Last year, I had an HVAC client who wanted AI to handle their entire lead qualification process without any human review. They ended up with automated responses going to commercial prospects who needed $50K systems, treating them like residential $3K repair calls. We had to rebuild their entire funnel because they skipped the human checkpoint for high-value leads.

Another major pitfall is not training your team on what to actually review. I worked with a dental practice that implemented AI for appointment scheduling, but nobody knew which edge cases needed human intervention. When the AI started booking root canals during lunch breaks and double-booking hygienists, it took weeks to fix the mess because staff didn't know what warning signs to watch for.

The technical side trips people up too - I've seen companies blow their budgets on AI tools that don't integrate with their existing CRM systems. One auto repair shop client spent $800/month on an AI chatbot that couldn't pass leads to their existing customer management system, so they were manually copying information anyway. The AI was supposed to save time but created more work instead.

Privacy and compliance

Human reviewers may be exposed to sensitive data, which creates risks related to privacy, confidentiality, and compliance.

Best Practice: Enforce strict access controls, NDAs, and secure environments, especially when using external annotators or contractors. Ensure workflows comply with regulations like GDPR or HIPAA, where applicable.

To maximize the benefits of HITL systems, organizations should clearly define where human input is essential, select qualified reviewers, and provide them with the necessary tools and training to ensure accurate and efficient reviews. Regularly measure performance, such as accuracy gains or reduced error rates, and refine the process over time to optimize its effectiveness.

Standards, such as the **NIST AI Risk Management Framework,** recommend human oversight for high-risk AI use cases. Aligning your HITL strategy with these guidelines helps ensure responsible and scalable AI adoption, especially as regulations and expectations evolve in 2026 and beyond.

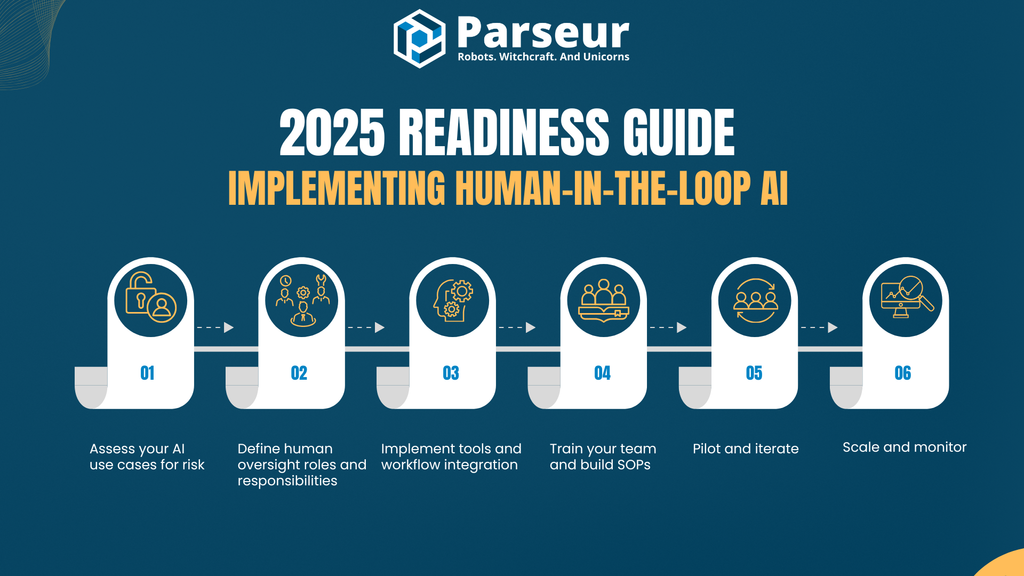

2026 Readiness Guide: Implementing Human-in-the-Loop AI

As AI adoption accelerates across industries, preparing your organization for Human-in-the-Loop (HITL) AI is no longer optional. In 2026, compliance, trust, and accuracy are top priorities. HITL ensures your systems meet all three. Below is a step-by-step readiness guide to help you plan and implement HITL AI successfully in your workflows.

Step 1: Assess your AI use cases for risk

Identify where AI is currently used in your business and evaluate which processes involve high-stakes decisions, such as those related to legal, financial, or customer-facing operations. These areas are most in need of human oversight to ensure safe and ethical outcomes.

Step 2: Define human oversight roles and responsibilities

Decide who will act as the human in the loop. It could be a data analyst, compliance officer, or end user. Clearly define their authority. Can they override AI decisions, or are they only validating low-confidence outputs? Clarifying responsibilities prevents bottlenecks or confusion later.

Step 3: Implement tools and workflow integration

Use platforms that support built-in review steps or human validation features. For example, Parseur allows human reviewers to approve or correct data before it is finalized. Ensure your workflow includes notifications or triggers to prompt human input at the right time.

Step 4: Train your team and build SOPs

Train your human reviewers on how to interpret AI results and when to intervene. Develop standard operating procedures (SOPs) for consistent review and feedback, including what to check, how to correct, and how to flag edge cases.

Step 5: Pilot and iterate

Start with a focused pilot project to test your HITL workflow. Measure key outcomes such as accuracy improvements, turnaround time, and human effort. Adjust thresholds, confidence levels, and review logic based on your findings.

Step 6: Scale and monitor

After a successful pilot, scale the HITL approach to other departments or use cases. Continue to monitor system performance, human feedback loops, and regulatory compliance. Update your process regularly as AI models evolve or new risks emerge.

Why HITL matters in 2026

With rising scrutiny around AI governance and regulatory mandates, such as the EU AI Act, which requires human oversight in high-risk AI applications, organizations must treat HITL as a core component of their responsible AI strategy.

It is not just about compliance. It is about building more resilient, accurate, and trusted AI systems that can scale with your business.

Over the past two years, large language models have transformed AI-powered workflows, turning what felt impossible just months ago into everyday reality. At Parseur, our customers are already automating all their data-extraction tasks with AI, unlocking unprecedented speed and scale. But AI, like any tool, isn’t flawless: edge cases still demand the judgment and oversight only a human can provide. That’s where Human-in-the-Loop (HITL) shines: it blends AI efficiency with human accuracy by automating the routine 95 percent and routing the critical 5 percent to expert review. The result is getting the best of both worlds: true end-to-end automation with iron-clad reliability.

Conclusion

Human-in-the-Loop AI represents a powerful middle ground between fully automated systems and manual processes. By integrating human judgment into critical stages of the AI lifecycle, businesses can enhance accuracy, ensure compliance, and build trust in their automated workflows. In 2026 and beyond, HITL is no longer optional for organizations operating in high-stakes industries. It is essential for achieving responsible, reliable AI outcomes.

Whether you're managing complex document workflows, training AI models, or ensuring customer satisfaction, HITL enables smarter, safer, and more ethical automation. With the right strategy and tools like Parseur’s validation workflows, you can implement HITL processes that scale with confidence.

Ready to bring human-AI collaboration into your business operations? Discover how Parseur seamlessly integrates intelligent document processing with built-in human oversight, enabling you to achieve the best of both worlds.

Frequently Asked Questions

To wrap up, here are answers to some of the most common questions about Human in the Loop AI. These insights will help clarify how HITL integrates into real-world AI workflows, particularly in areas such as automation, compliance, and document processing.

-

What is the difference between human in the loop and human on the loop?

-

Human-in-the-loop (HITL) involves active human participation at critical points in the AI process, either during training, validation, or decision-making. In contrast, human-in-the-loop refers to a supervisory role in which a human monitors the AI system and intervenes only if something goes wrong or the system flags uncertainty. While both approaches maintain human oversight, HITL is more hands-on and suited for high-stakes or ambiguous use cases where accuracy is essential.

-

Does human-in-the-loop AI mean AI is not fully automated?

-

Yes, HITL AI is not fully autonomous. It is a hybrid approach that combines the speed and efficiency of AI with the reasoning and context-awareness of human input. The goal is not to slow down automation, but to ensure quality, safety, and trust, especially in areas where a mistake could lead to compliance issues, financial loss, or poor customer experiences. HITL still allows for automation at scale while minimizing risks.

-

When should I use human in the loop versus fully automated AI?

-

Human-in-the-loop is best used when decisions carry significant consequences or require contextual judgment, such as processing legal documents, handling financial data, or responding to nuanced customer queries. Fully automated AI is appropriate for predictable, low-risk, repetitive tasks where outcomes are clear and tolerable, even if occasional errors occur. A well-balanced strategy combines both: AI handles the routine, and humans step in when needed for complex or critical edge cases.

-

When should I use human in the loop versus fully automated AI?

-

In document processing, AI tools are utilized to extract data from structured or semi-structured files, including invoices, contracts, and onboarding forms. However, when the AI encounters unclear layouts, low-confidence fields, or unusual formats, a human reviewer steps in to validate or correct the output. This not only improves the accuracy of the extracted data but also trains the AI model to perform better over time, creating a feedback loop that leads to near-perfect results in business-critical operations.

Last updated on