What is data extraction?

Data extraction refers to retrieving information from unstructured data sources. With data extraction, data can be refined, stored, and further analyzed. It is used throughout healthcare, financial services, and the tech industry. Businesses can optimize their efficiency by automating their manual processes using data extraction.

Are you looking to streamline how your business handles data? In this article, you'll discover everything you need to know about automated data extraction, from what it is and how it works to the transformative benefits it brings to organizations.

Key Takeaways

- Automated data extraction streamlines processes, transforming vast amounts of unstructured data into structured formats for practical use.

- Modern techniques leverage AI, OCR, and machine learning for high-speed, accurate data capture from various documents.

- Industries like finance, healthcare, and logistics rely heavily on automated data extraction to save costs and enhance productivity.

Businesses generate and manage vast quantities of data daily, and processing this information is critical for decision-making and operational efficiency. Automated data extraction transforms how organizations process data, providing a streamlined, efficient, and accurate alternative to manual methods.

What is automated data extraction?

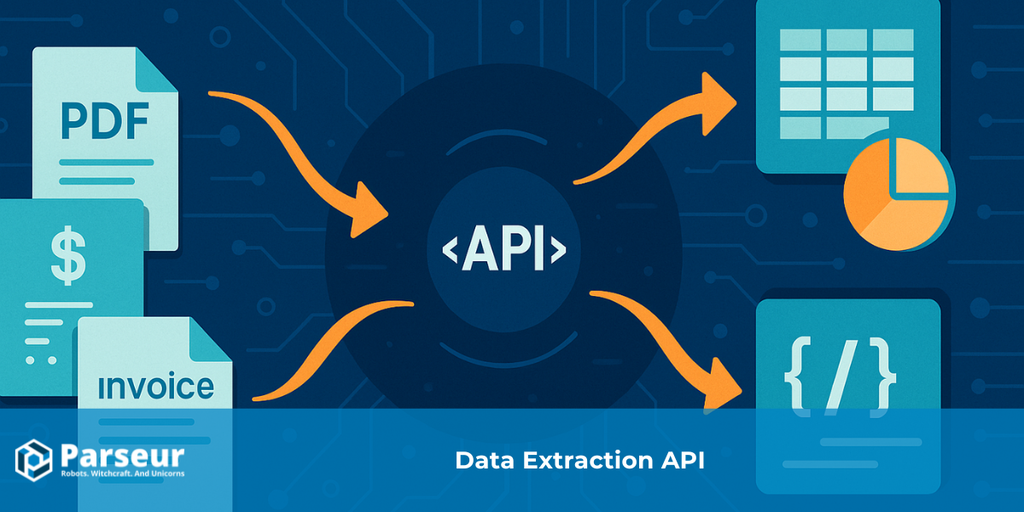

Automated data extraction uses advanced software and AI-powered technologies to automatically identify, capture, and convert data from various sources into structured formats, such as PDFs, scanned documents, and emails. By eliminating the manual process, businesses save time, reduce errors, and increase their data processing speed, empowering them to make quicker and more informed decisions.

In 2025, the global data sphere is projected to reach over 180 zettabytes, underscoring the need for efficient data extraction methods to process, analyze, and store this information” -- Source: Statista

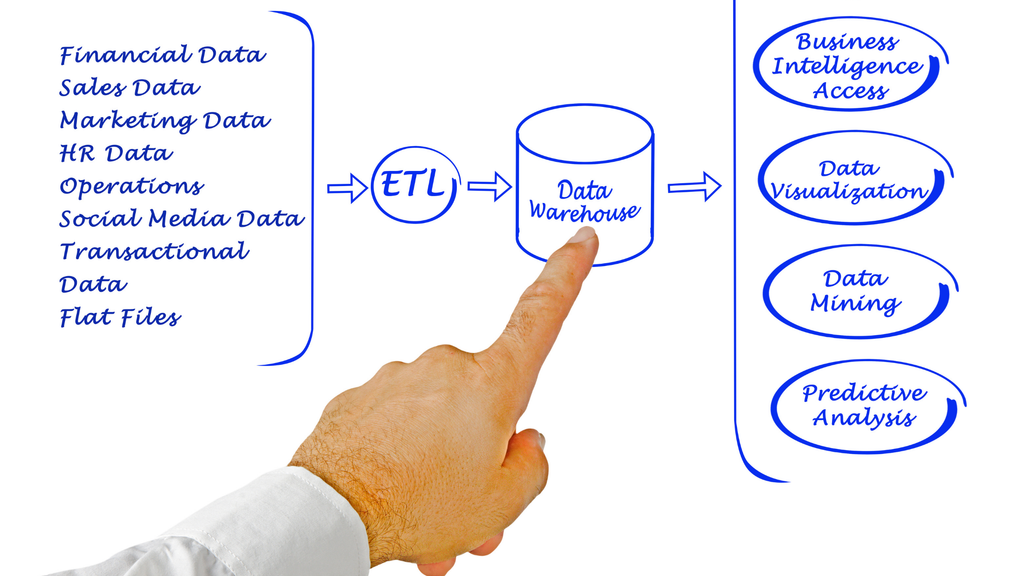

Data extraction and ETL

Data extraction is the first step in the ETL process. ETL stands for Extract, Transform, and Load, and it involves the 3 processes. The primary objective of ETL is to prepare data so that it can be loaded into a data warehouse, database, or directly into a business application. ETL is adaptable to any industry, including healthcare, SaaS, and retailers.

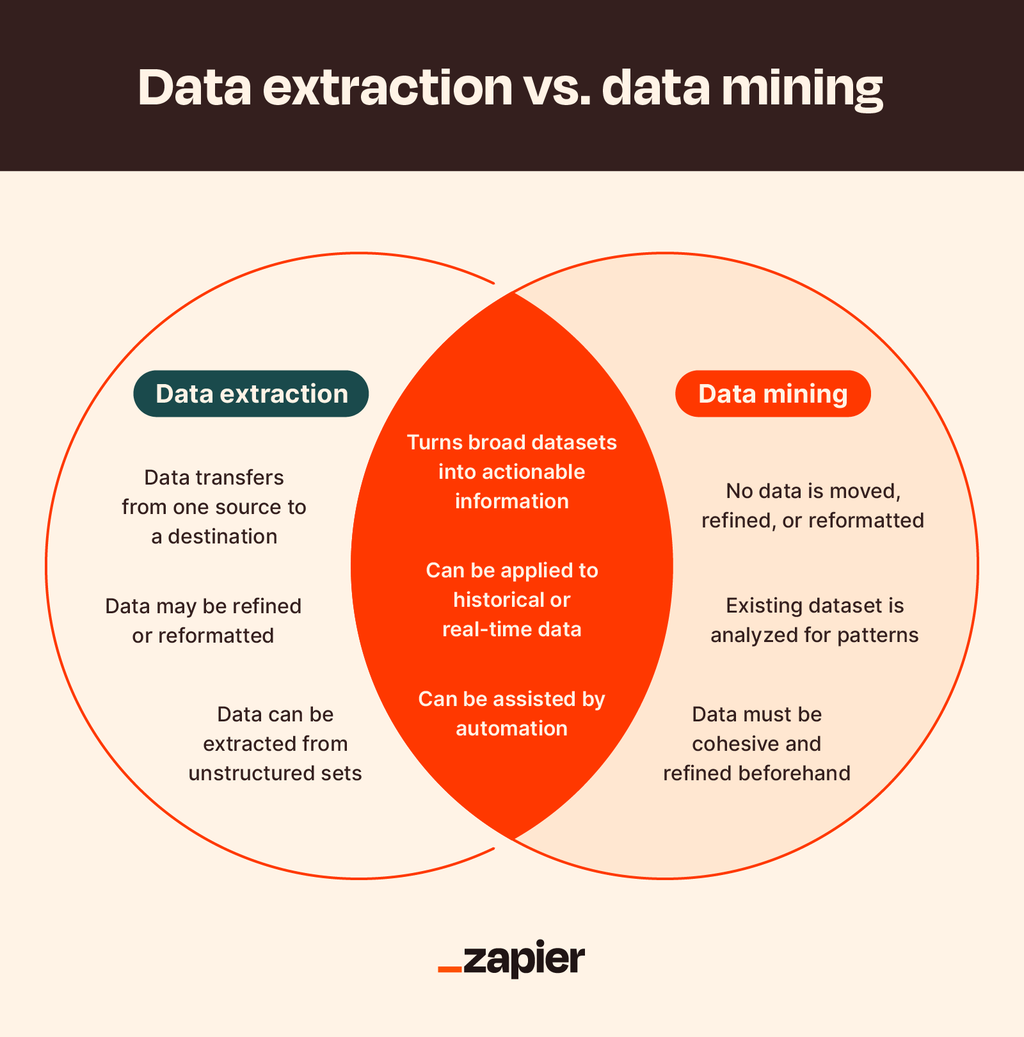

Data extraction vs. data mining

Data extraction and data mining are vital processes in analyzing a high volume of data, but they are not related.

Data extraction involves obtaining and collecting data, whereas data mining is the process of analyzing that data to uncover insights and patterns. Data extraction is a necessary step for data mining, but data mining involves more complex analysis and modeling techniques to derive value from the data.

What are the different types of data?

Understanding the various data types involved is essential for optimizing the extraction methods and ensuring accuracy.

Structured data

Definition: Structured data is highly organized and formatted, making it easy to search, retrieve, and analyze. It is typically stored in relational databases, where each row represents a unique record and each column represents a specific attribute.

Characteristics:

- Fixed schema (e.g., for use in databases)

- Easily manipulable using SQL and other database query tools

- Predictable and consistent structure

Common sources of structured data include:

- Databases: Relational databases store data in tables with rows and columns, such as customer information and sales records.

- Spreadsheets: Data stored in Excel files or Google Sheets often follows a consistent format, making it easy to extract specific data points.

Example: Businesses rely on structured data to generate reports, track sales performance, and manage customer relationships efficiently.

2. Semi-structured data

Definition: Semi-structured data does not conform to a rigid schema but still contains tags or markers to separate different elements.

Characteristics:

- Flexible and adaptable structure

- Hierarchical organization

This data type is common in formats such as:

- JSON (JavaScript Object Notation): JSON files are used extensively in web applications. They are structured as key-value pairs, making them relatively easy to parse.

- XML (eXtensible Markup Language): Like JSON, XML allows the creation of custom tags to represent data, providing a flexible structure for data exchange.

- Log Files: Consistently formatted entries allow for meaningful information extraction despite their semi-structured nature.

Example: An XML document containing product information where each product is tagged with relevant attributes like name, price, and description.

3. Unstructured data

Definition: Unstructured data needs a predefined format or structure, making analyzing and extracting meaningful information challenging.

Characteristics:

- Diverse formats and content types

- Requires advanced technologies (e.g., NLP, machine learning) for meaningful extraction

Common examples include:

- Text documents: Word files, PDFs, and emails can contain vast amounts of unstructured data, often requiring natural language processing (NLP) techniques for extraction.

- Images and videos: Media files that require image recognition or video analysis tools to extract relevant information, such as metadata or embedded text.

Example: Organizations analyze unstructured data to glean insights from customer feedback, enhance brand sentiment analysis, and extract critical information from contracts.

Read more about structured data vs. unstructured data

4. Time-series data

Definition: Time-series data is a sequence of data points collected or recorded at specific intervals. This data type is crucial in finance and IoT (Internet of Things), where historical data trends inform decision-making processes. Automated data extraction tools can analyze time-series data for insights or anomalies.

Characteristics:

- Sequential and time-ordered

- Captures temporal dynamics and trends

- Often requires specialized analysis techniques, such as forecasting and anomaly detection

Example:

Stock market prices are recorded hourly, which can be analyzed to predict future trends.

Weather Data: Temperature, humidity, and precipitation levels recorded hourly or daily can be analyzed to identify climate trends and improve forecasting accuracy.

5. Spatial Data

Definition: Spatial data relates to the physical location and attributes of objects. This data type is essential in geographic information systems (GIS) and can include coordinates, maps, and satellite imagery. Automated extraction tools can help convert raw spatial data into actionable insights for various industries, such as urban planning and logistics.

Characteristics:

- Essential for mapping and navigation

- Visualized using Geographic Information Systems (GIS)

Example: Geographic coordinates extracted from GPS data for route optimization.

Extraction methods

Two primary methods for extracting data from various sources are manual and automated.

Challenges in manual data extraction

Manual data extraction, a time-consuming and error-prone process, presents several challenges, including:

- Human Error and Data Inaccuracy: Manual extraction often leads to errors, especially with large datasets or complex documents.

- Resource Allocation: Significant manpower is needed for data processing, making it costly and less efficient.

- Compliance Risks: Manual processing can increase the risk of non-compliance, as errors in data entry may result in regulatory issues.

Automated extraction methods: Logical vs. Physical

Data extraction can also be categorized into two main types: logical and physical.

1. Logical extraction

Description: Logical extraction focuses on the logical structure of data. This method involves retrieving data based on its meaning and organization within a database or a data model rather than how it is physically stored. It often employs queries or APIs to access data.

Advantages:

- Efficiency: Allows for targeted data retrieval, as only relevant information is extracted based on specific queries or criteria.

- Data Integrity: Maintains the relationships and constraints within the data, ensuring that the extracted data remains consistent and accurate.

- User-Friendly: Often utilizes high-level languages (like SQL) that make it easier for users to define what data they need without understanding the underlying storage mechanisms.

2. Physical extraction

Description: Physical extraction refers to retrieving data from the actual physical storage format where it is kept, such as files, disk drives, or backup tapes. This method focuses on how data is stored on a physical medium and often involves lower-level data access techniques.

Advantages:

- Comprehensive: Can retrieve all data stored in a physical medium, including archived or historical data that may not be accessible through logical methods.

- Versatility: Useful in forensic analysis, data recovery, and backup scenarios where complete data extraction is necessary.

Benefits of automated data extraction

Automated data extraction offers numerous advantages for businesses, especially those that rely on large volumes of data for operations and decision-making. It makes it easier to get a wealth of data that enables organizations to get better insights and make more data based decision.

- Increased efficiency and speed: Automated data extraction enables rapid processing of vast data, minimizing the time required to complete tasks.

- Improved accuracy and reduced errors: Automating data capture reduces human error, leading to higher data extraction and processing accuracy.

- Cost savings and return on investment (ROI): By replacing manual data entry, companies can allocate resources more efficiently, resulting in substantial cost savings.

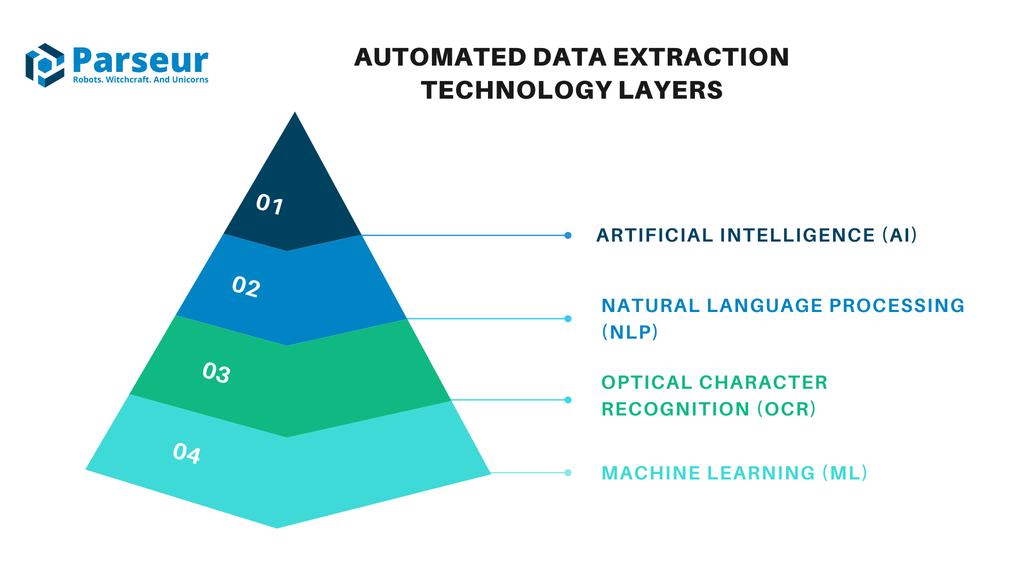

Technologies in Automated Data Extraction

Automated data extraction leverages a blend of advanced technologies to transform data from raw, often unstructured formats into organized, accessible information.

- Machine Learning (ML) Models: ML algorithms can adapt to different document structures, identifying patterns and extracting information based on previous interactions.

- Optical Character Recognition (OCR): OCR algorithms identify and analyze character patterns within images to recognize letters, words, and numbers, making it possible to digitize data from sources that would otherwise require manual entry.

- Natural Language Processing (NLP): Through NLP, automated data extraction systems can analyze context, sentiment, and the relationships between words, making it possible to extract insights from complex documents, such as emails, legal texts, or customer feedback.

- Artificial Intelligence (AI): Unlike traditional methods, AI can handle complex and dynamic data sources and adapt to various document types, layouts, and languages.

AI-based extraction techniques can save businesses 30–40% of their hours. - PWC Report

Automated data extraction for specific industries

Almost every industry must extract data better to understand its market, customers, or products. Here are the most common ones.

Finance

Financial institutions must process invoices, bank statements, and credit reports, ensuring accurate financial reporting and compliance.

Healthcare

AI enables fast and reliable processing of healthcare patient records, insurance claims, and medical reports. By doing so, healthcare providers enhance patient care and streamline administrative tasks.

Logistics and Supply Chain

It simplifies order processing, inventory management, and shipment tracking, ensuring supply chain operations run smoothly and customers receive timely updates.

Parseur as a data extraction tool

Parseur’s advanced AI-powered data extraction solution enables seamless, efficient, and reliable automation across various industries. Designed to cater to businesses with specific data processing needs, Parseur automates the capture and structuring of data from emails, PDFs, and other documents to minimize errors and maximize efficiency.

Bernard Rooney, the Managing Director of Bond Healthcare, describes Parseur as "Parseur is a highly customisable product and has a solution for straightforward data extraction through to complex spreadsheets".

Key features of Parseur

- State-of-art AI Engine: Parseur’s AI engine can now process documents up to 100 pages, making it suitable for businesses with high data volumes.

- Improved scanned document and image processing: Parseur’s upgraded OCR capabilities ensure high accuracy in parsing scanned documents, even those containing tables.

How does data extraction work?

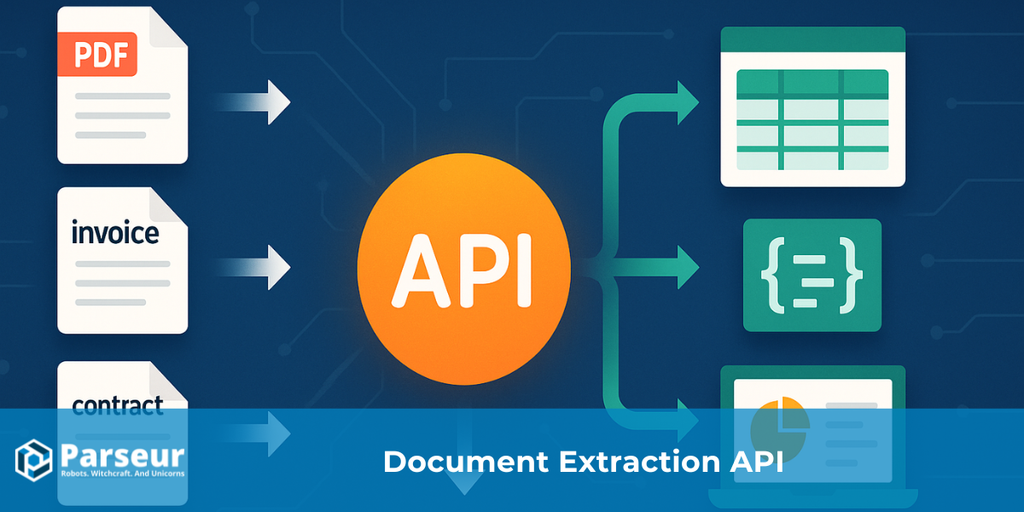

- Start by uploading your documents to Parseur via email, API, or the Parseur platform. Parseur accepts many file types, including PDFs, scanned images, and image files (BMP, PNG, JPEG, TIFF).

- Parseur’s AI engine detects document types, identifies key fields, and extracts data accordingly. You can create custom templates to ensure accurate results if specific extraction needs require further refinement.

- After extraction, Parseur organizes the data into your preferred format and seamlessly integrates it with applications, including CRM, ERP, and database systems. You can export data via CSV, Excel, or JSON formats or use Parseur’s integrations with tools like Zapier or Make to automate further workflows.

Future trends in automated data extraction

With advancements in AI and machine learning, the future of data extraction will likely see:

- Enhanced NLP Capabilities: AI-driven NLP is expected to improve context interpretation, enabling even more accurate extraction from complex text.

- Increased Integration with IoT: As IoT devices generate more data, automated extraction will be crucial in processing real-time information.

- Improved Customization and Scalability: Future solutions will offer more customization options to meet industry-specific needs.

Last updated on