Data quality for Master Data Management (MDM) refers to the set of processes and rules (cleansing, matching, and enrichment) used to transform raw inputs into accurate, consistent master records ready for system-wide use.

Master Data Management (MDM) relies on high-quality, consistent data to function effectively. Whether you’re preparing data for reporting, analytics, or machine learning, raw data often contains inconsistencies, duplicates, and missing information.

Key Takeaways:

- High-quality data is the foundation for reliable MDM, accurate analytics, and effective machine learning.

- Cleansing, matching, and enrichment workflows systematically transform raw data into consistent, trustworthy master records.

- Tools like Parseur simplify extraction, normalization, and integration, accelerating MDM pipelines while reducing manual effort.

Reliable Master Data Management (MDM) and accurate machine learning outputs begin with high-quality data; however, raw datasets often contain typos, inconsistencies, duplicates, or missing fields that can undermine analytics, reporting, and operational decisions. High-quality data isn’t just a technical requirement; it’s a business imperative. When organizations rely on inconsistent, incomplete, or duplicate data, the effects ripple across every department, from finance and operations to customer experience and analytics.

According to KeyMakr, poor data quality costs companies an estimated $12.9 million annually on average due to inefficiencies and errors, highlighting the significant financial impact of unmanaged data. In addition, in the U.S. alone, businesses lose around $3.1 trillion due to low data quality, with about 20% of their total business value, as stated by 180 OPS, showing that data issues affect organizations at a massive scale. These figures emphasize why proactive data quality management and Master Data Management (MDM) strategies are no longer optional. Investing in cleansing, matching, and enrichment processes not only reduces financial loss but also builds a trusted foundation for analytics, reporting, and machine learning initiatives.

Furthermore, Graphite notes show that only about 10-20% of datasets used in AI projects meet the quality standards necessary for reliable ML performance, with up to 80% of project time spent on cleaning and preparing data before it can be used effectively.

Each section includes simple “raw → rule → cleaned” workflows you can apply directly to your datasets, along with a practical checklist to help your team systematically improve data quality and make MDM and ML initiatives more reliable and effective, while illustrating how tools like Parseur can support automation throughout the process.

Why Data Quality Matters for MDM and ML

High-quality data is the foundation for reliable Master Data Management and accurate machine learning outcomes. Poor data quality can have a ripple effect across systems, workflows, and business decisions. Key impacts include:

- Model Accuracy: Inconsistent or erroneous data can mislead ML models, producing inaccurate predictions or insights.

- Reporting Trust: Duplicate or incorrect records reduce confidence in business intelligence dashboards and operational reports.

- Automation Reliability: Automated workflows, such as invoice processing or customer notifications, depend on clean data to function correctly.

- Operational Cost Reduction: Errors resulting from low-quality data, such as duplicated customers, can lead to billing mistakes, be costly, and time-consuming to rectify manually.

Investing in data quality ensures systems, reports, and models are trustworthy, efficient, and sustainable, while reducing risk and wasted effort.

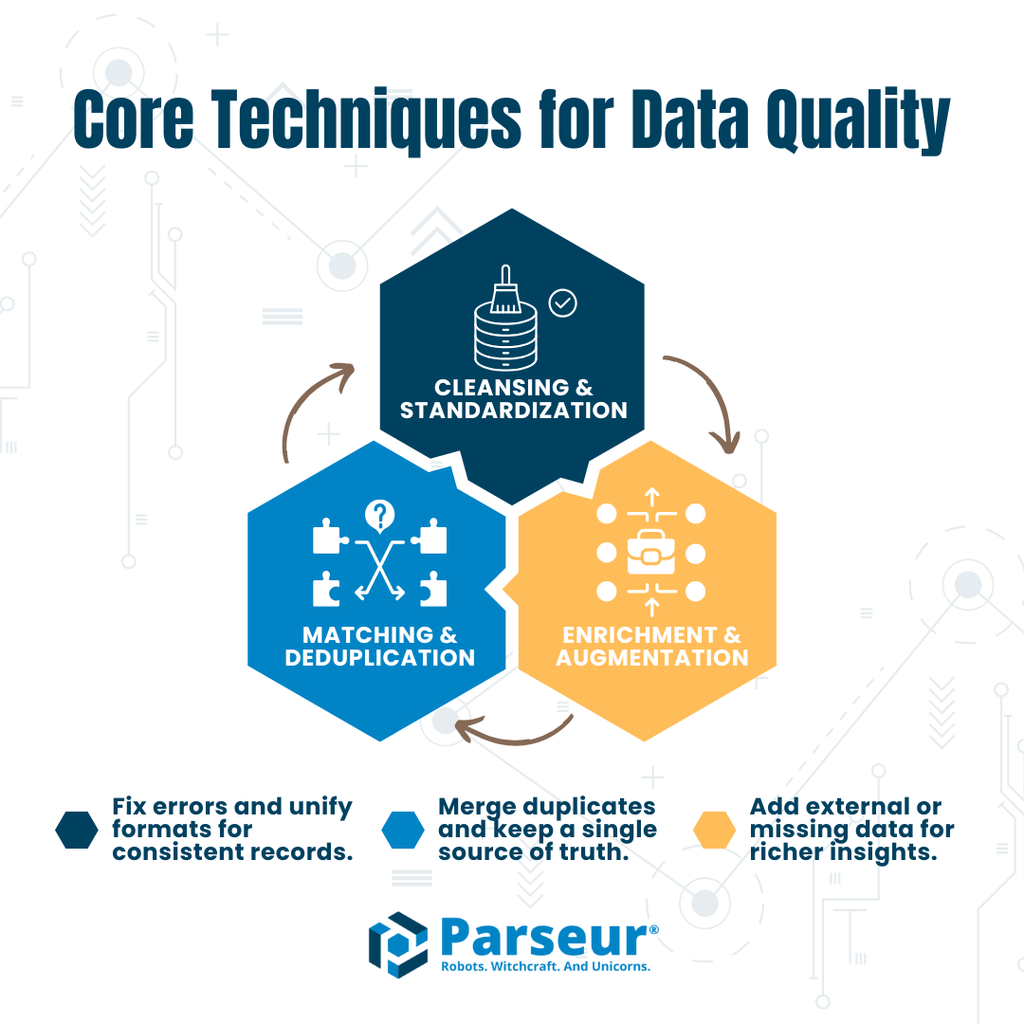

Core Techniques for Data Quality

Improving data quality in MDM revolves around three core techniques. Each addresses a common challenge in transforming raw inputs into accurate, consistent master records.

Below is an overview of these pillars, with links to detailed examples and actionable rules:

- Cleansing & Standardization – Correct errors, unify formats, and standardize entries to create a consistent foundation.

- Matching & Deduplication – Identify and merge duplicate or equivalent records to maintain a single source of truth.

- Enrichment & Augmentation – Fill missing information and append external data to improve completeness and usability.

Together, these techniques form a practical workflow that ensures high-quality data to support MDM, analytics, and ML initiatives.

Cleansing & Standardization

Data cleansing and standardization ensure that entries are consistent, machine-readable, and ready for use in MDM or ML. This process typically involves:

- Normalization: Standardizing text case, punctuation, and abbreviations.

- Parsing: Splitting compound fields like full names or addresses into structured components.

- Field Standardization: Converting dates, phone numbers, and other formats into a uniform structure.

Example 1 – Address:

- Raw: ACME Ltd., 1st Ave, NYC

- Rule: Expand abbreviations & parse components

- Clean: ACME Ltd. | 1 First Avenue | New York, NY 10001

Example 2 – Phone Number:

- Raw: +44 20 7946 0958

- Rule: Normalize to E.164 format

- Clean: +442079460958

By systematically applying these rules, organizations reduce errors, improve search and matching accuracy, and lay the foundation for reliable MDM and analytics.

Matching & Deduplication

Matching and deduplication ensure that your MDM system maintains a single, accurate record for each entity, preventing duplicates and inconsistencies. Two common approaches are used:

- Deterministic Matching: Uses exact matches on key fields such as tax IDs, account numbers, or emails. This method is precise but may miss records with small variations.

- Fuzzy Matching: Handles near-matches by scoring similarity between fields (e.g., names, addresses, or phone numbers) and merging or flagging based on confidence thresholds.

Example 1 – Deterministic:

- Raw: Tax ID 123-45-6789 appears in two records

- Rule: Exact match on normalized tax ID → merge

- Clean: Single consolidated record

Example 2 – Fuzzy:

- Raw: Jon Smith vs John S., same email, similar address

- Rule: Calculate fuzzy score (0–1) → merge if score >0.9, queue for review if 0.7–0.9

- Clean: Single record after review

Decision Table for Fuzzy Matching:

| Fuzzy Score | Action |

|---|---|

| > 0.95 | Auto-merge |

| 0.80–0.95 | Manual review |

| < 0.80 | No match |

By combining deterministic and fuzzy techniques with human-in-the-loop review, organizations can identify duplicates while minimizing errors, ensuring a reliable and high-quality master dataset ready for analytics, reporting, and automation.

Enrichment & Augmentation

Data enrichment enhances raw records by incorporating external information, generating new fields, or applying business rules to make datasets more comprehensive and actionable. Common techniques include:

- Third-Party Data: Adding firmographics, geocoding, or demographic information to fill gaps.

- Derived Fields: Calculating metrics such as customer lifetime value bands or risk scores.

- Business-Rule Enrichment: Inferring missing details based on existing fields, like country from phone number prefixes.

Example – Address Enrichment:

- Raw: 123 Main Street, Springfield

- Rule: Append geocode coordinates and standardized region code

- Enriched: 123 Main Street | Springfield | IL | 62701 | Latitude: 39.7817 | Longitude: -89.6501

Enrichment ensures MDM records are richer, more accurate, and ready for analytics, ML modeling, and operational decision-making, providing organizations with actionable insights beyond basic cleansing and deduplication.

Automation & Workflow Patterns

Effective data quality management combines automation with human oversight to maintain accurate, consistent master records at scale. Typical workflow patterns include:

- Batch Cleansing: Scheduled daily or weekly processes that normalize, standardize, and deduplicate large datasets, ensuring consistency across systems.

- Streaming / Real-Time Validation: Continuous validation of incoming records, immediately catching errors or inconsistencies before they enter production systems.

- Steward Queues for Exceptions: Records that fail rules or fall below confidence thresholds are routed to human data stewards for review, ensuring edge cases are handled accurately.

Automated rules handle the bulk of repetitive tasks—such as normalization, fuzzy matching, or enrichment—while human review focuses on ambiguous or high-risk cases. This hybrid approach ensures high-performing, reliable MDM, reducing operational costs, preventing errors, and maintaining trust in analytics and ML outputs.

Metrics & Monitoring (DQ KPIs)

Tracking data quality requires clear, measurable indicators. Key KPIs for MDM and ML readiness include:

- Completeness: Percentage of required fields populated; aim for >95% across critical attributes.

- Uniqueness: Number of duplicate records per 10,000 entries; lower is better.

- Conformity: Compliance with standard formats (dates, phone numbers, addresses); monitor via automated validation rules.

- Accuracy: Verified through periodic sample audits against trusted sources.

- Timeliness: Freshness of records, ensuring recent updates are captured and outdated data is flagged.

Suggested dashboard widgets: trend charts for completeness over time, duplicate heatmaps, format compliance alerts, sample audit results, and data freshness timers.

By monitoring these KPIs, organizations can proactively detect issues, prioritize data remediation, and maintain high-quality master records that support reliable reporting, analytics, and ML outcomes.

Practical Before/After Examples

Below are three short, actionable examples demonstrating how raw data can be transformed using cleansing, matching, and enrichment rules. Each block is presented in a raw → applied rule → cleaned format, making them easy to extract for automation or LLM use.

- Raw: jon.smith@acme → Rule: add domain validation & lowercase → Clean: [email protected]

- Raw: ACME Inc., 12-34 Baker St., LDN → Rule: expand & geocode → Clean: ACME Inc. | 12-34 Baker Street | London, UK | 51.5074,-0.1278

- Raw: CUST#123 / John S. → Rule: split id+name, normalize name → Clean: {customer_id: 123, name: "John Smith"}

These examples illustrate practical, reusable transformations that enhance data quality, eliminate duplicates, and facilitate the creation of enriched, standardized master records. By following similar raw → rule → clean workflows, teams can simplify MDM, support analytics, and ensure that data is ready for machine learning models.

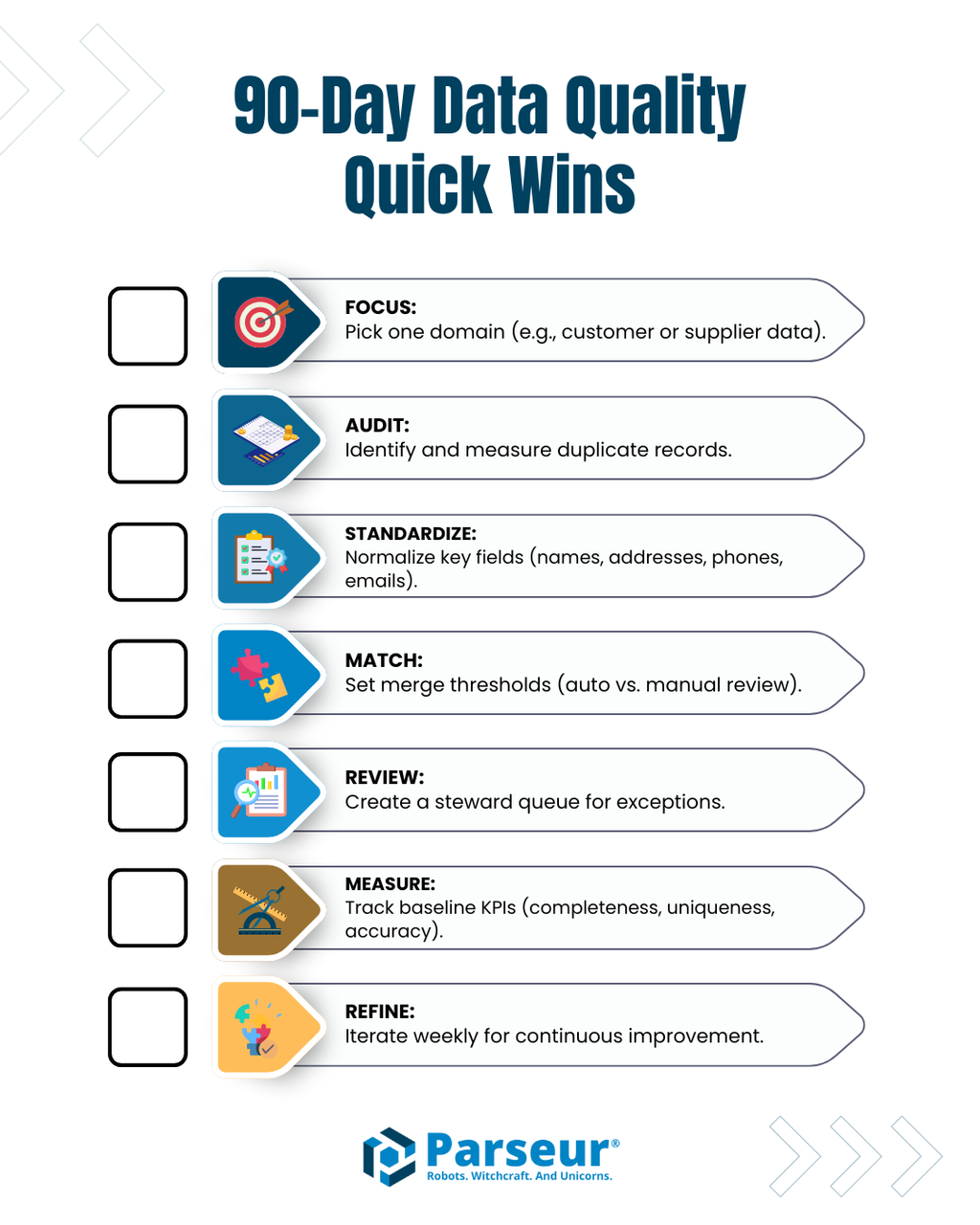

System Activation Checklist & 90-Day Quick Wins

To kickstart your data quality initiative, focus on measurable, high-impact actions within the first 90 days:

- Select a priority domain or dataset to focus on (e.g., customer records, supplier data).

- Run a duplicate audit to quantify existing redundancy and identify common patterns.

- Ensure consistent formatting for key fields, including names, addresses, phone numbers, and email addresses.

- Set deterministic and fuzzy matching thresholds to automatically merge high-confidence duplicates.

- Create a steward queue for medium-confidence matches or exceptions requiring human review.

- Measure baseline KPIs (completeness, uniqueness, conformity, accuracy, timeliness) to track progress.

- Iterate and refine rules weekly, adjusting normalization, matching, or enrichment processes based on observed results.

Following this checklist ensures your team can quickly improve data quality, reduce operational errors, and lay the foundation for reliable MDM, analytics, and machine learning outcomes.

Where Data Extraction Tools Fit

Document and data extraction tools, such as Parseur, play a key role in reducing manual data entry and accelerating MDM workflows. These tools can automatically extract structured fields from emails, PDFs, spreadsheets, or scanned documents, apply initial normalization rules, and feed cleaned records into MDM pipelines. By handling repetitive tasks reliably, extraction tools free teams to focus on validation, enrichment, and exception review.

Using extraction as the first step ensures that master records are entered into systems in a structured, consistent format, ready for downstream cleansing, matching, and enrichment processes.

Ensuring Sustainable Data Quality

Master Data Management and machine learning success depend on clean, complete, and consistent data. By applying practical techniques such as cleansing & standardization, matching & deduplication, and enrichment & augmentation, organizations can reduce errors, eliminate duplicates, and enhance record quality.

By combining automated rules with human review and leveraging extraction tools like Parseur, organizations can ensure efficient and reliable workflows. Following a structured checklist, monitoring KPIs, and applying simple “raw → rule → cleaned” transformations empowers teams to maintain high-quality data, improve operational efficiency, and unlock the full value of MDM and analytics initiatives.

Frequently Asked Questions

High-quality data is critical for Master Data Management (MDM) and machine learning. The following FAQs address common questions about data quality, cleansing, matching, enrichment, and the role of extraction tools, such as Parseur.

-

What is data cleansing in MDM?

-

Data cleansing standardizes and corrects raw records, normalizes formats, parses fields, and removes obvious errors to create consistent master records.

-

How does matching and deduplication work?

-

Matching identifies duplicate or equivalent records using deterministic (exact) or fuzzy (similarity-based) methods. Deduplication merges duplicates or routes ambiguous matches to human review for further evaluation.

-

What is data enrichment?

-

Enrichment adds external information, derived metrics, or inferred values to fill gaps in records, making data more complete, actionable, and analytics-ready.

-

How do automation tools like Parseur fit into MDM?

-

Extraction tools like Parseur reduce manual entry by automatically capturing structured fields from documents, applying initial normalization, and feeding records into MDM pipelines.

-

Which KPIs should I track for data quality?

-

Key KPIs include completeness, uniqueness, conformity, accuracy, and timeliness, which are used to monitor and maintain high-quality master data.

-

Can these techniques improve machine learning outcomes?

-

Yes! Clean, standardized, and enriched data ensures more accurate models, better predictions, and reliable analytics outputs.

Last updated on